Understanding how machines split text into tokens—words, subwords, or characters—to make sense of human language.: Tokenization in NLP: Breaking Down Language for Machines

“Before machines can understand us, they need to know where one word ends and another begins.”

🧠 Introduction: Why Tokenization Matters

Natural Language Processing (NLP) has made astounding progress—from spam filters to chatbots to sophisticated language models like GPT-3. But at the heart of every NLP system lies a deceptively simple preprocessing step: tokenization.

Tokenization is how raw text is broken into tokens—units that an NLP model can actually understand and process. Without tokenization, words like “can’t”, “data-driven”, or even emoji 🧠 would remain indistinguishable gibberish to machines.

This blog dives into what tokenization is, the types of tokenizers, the techniques behind them, and how this foundational step powers everything from search engines to large-scale language models.

📚 What Is a Token?

In NLP, a token is a meaningful unit of text. This could be:

- A word:

["I", "love", "AI"] - A subword:

["un", "believ", "able"] - A character:

["H", "e", "l", "l", "o"] - A symbol:

[":", ")", "#AI", "😊"]

What’s “meaningful” depends on the tokenizer type—and that makes all the difference downstream.

🧰 Types of Tokenization Techniques

1. Whitespace & Rule-Based Tokenization (Classical NLP)

- Simplest method—split on spaces or punctuation.

- Still used for traditional NLP pipelines.

- Example:

"Let's learn AI!" → ['Let', "'", 's', 'learn', 'AI', '!']

✅ Pros: Fast, interpretable

❌ Cons: Fails with contractions, languages without whitespace (e.g., Chinese)

2. WordPiece (Used in BERT)

- Breaks words into subword units using frequency-based rules.

- Handles unknown words better.

"unbelievable" → ["un", "##believ", "##able"]

✅ Pros: Reduces out-of-vocab (OOV) issues

❌ Cons: Slower, harder to interpret directly

3. Byte Pair Encoding (BPE) – GPT-2/3

- Starts with characters and merges frequent pairs iteratively.

"lower" + "##case" = "lowercase"- GPT models use pre-tokenized vocab generated with BPE.

✅ Pros: Compact vocabulary, efficient

❌ Cons: Language-dependent artifacts

4. SentencePiece (Used in T5, ALBERT)

- Treats input as a raw byte stream, not requiring whitespace segmentation.

- Allows multilingual and non-space-delimited language support.

✅ Pros: Works well with Asian languages, avoids preprocessing

❌ Cons: Difficult to debug manually

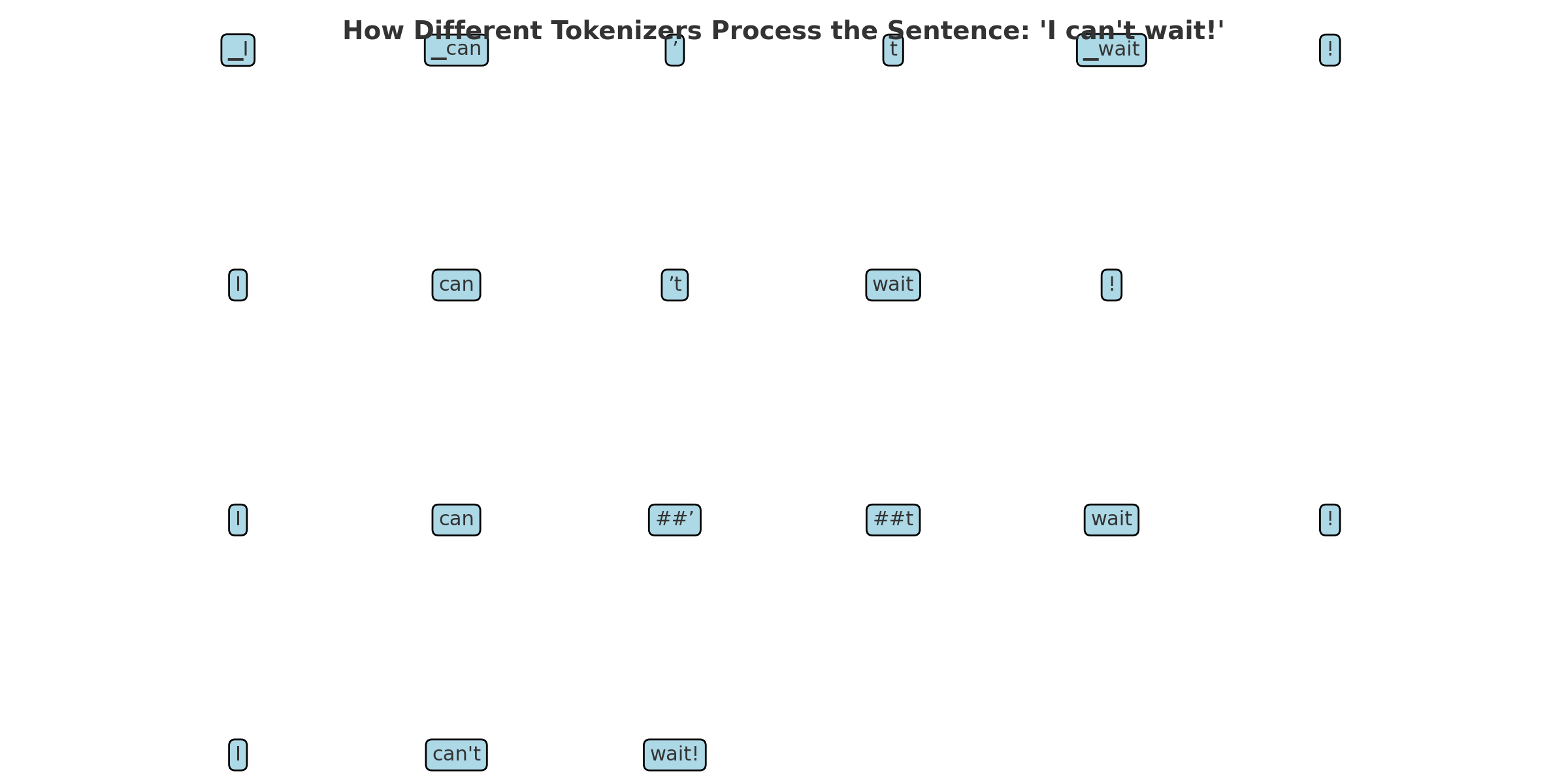

🧪 Visual Comparison

| Input Sentence | Tokenizer | Output Tokens |

|---|---|---|

| I can’t wait! | Whitespace | [“I”, “can’t”, “wait!”] |

| WordPiece | [“I”, “can”, “##’”, “##t”, “wait”, “!”] | |

| BPE | [“I”, “can”, “’t”, “wait”, “!”] | |

| SentencePiece | [“▁I”, “▁can”, “’”, “t”, “▁wait”, “!”] |

🔄 The Tokenization Pipeline in Practice

- Input Text → Raw user/HTML/formatted content

- Preprocessing → Clean casing, punctuation

- Tokenizer → Subword segmentation (WordPiece, BPE, etc.)

- Mapping → Tokens → IDs for model input

In HuggingFace Transformers, it’s as simple as:

💡 Why It Matters for Large Language Models

LLMs like GPT-3, BERT, and T5 do not understand words the way humans do. They process sequences of token IDs, not strings.

- Fewer tokens = faster computation

- Shorter context = better performance

- Mis-tokenized input = poorer predictions

The efficiency, accuracy, and scalability of your model hinge on the quality of tokenization.

🔍 Advanced Concepts

- Detokenization: Mapping token IDs back to readable text.

- Tokenizer Vocabulary: Usually capped (e.g., 30,000 to 50,000 entries).

- Token Overlap: Especially important in sequence classification or QA tasks.

🧭 Choosing the Right Tokenizer

| Task | Recommended Tokenizer |

|---|---|

| Sentiment Analysis | WordPiece (BERT) |

| Text Generation | BPE (GPT-2/3) |

| Translation | SentencePiece (T5) |

| Custom Corpus | Train your own BPE |

📘 Final Thoughts

Tokenization might seem like a “setup step,” but it’s where language meets math. Understanding this phase helps debug model errors, improve performance, and even build your own transformers.

Next time you see token counts or model limits (e.g., 4096 tokens), remember: tokenization is the translator between us and the machines.