Spark Basics

Spark Basics: A Complete Guide to Installing and Using Apache Spark on macOS Sierra

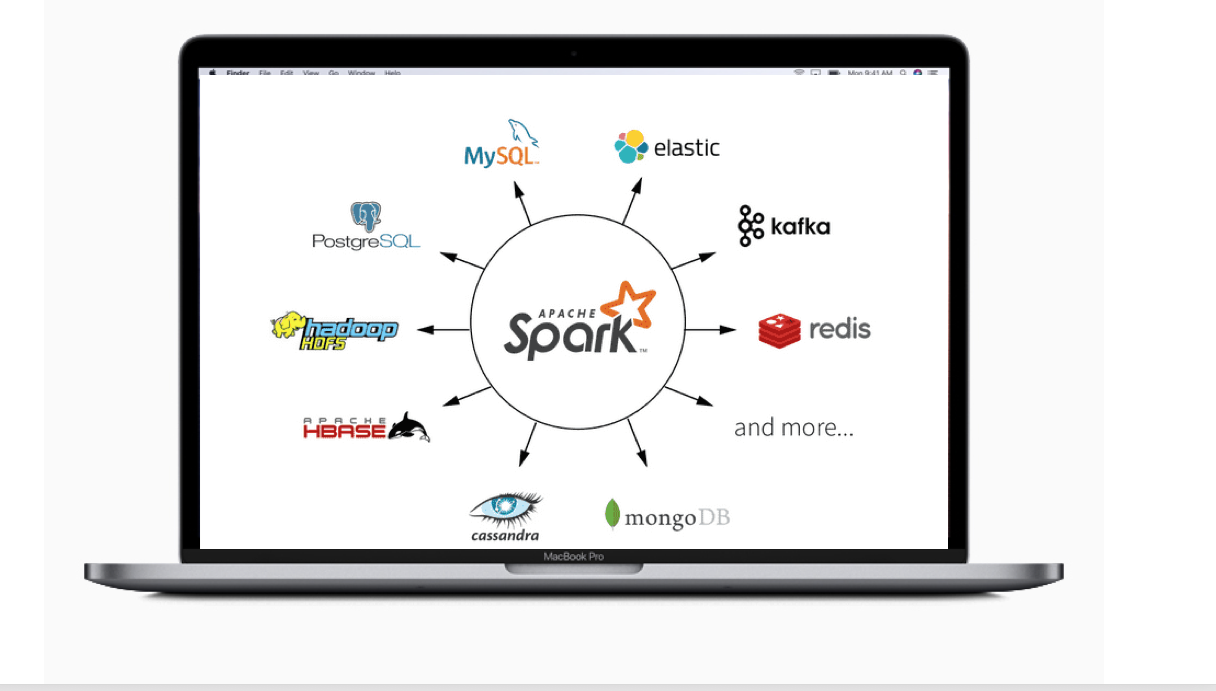

Apache Spark is a powerful open-source tool designed for large-scale data processing, analytics, and machine learning. This guide walks you through installing Apache Spark on macOS Sierra, explains its core components, and provides practical project examples and real-life scenarios to help you understand its capabilities.

Table of Contents

- Introduction to Apache Spark

- Setting Up Apache Spark on macOS Sierra

- Understanding Core Components of Apache Spark

- Getting Started with Spark Shell

- Sample Projects and Real-Life Scenarios Using Spark

- Real-Life Applications of Apache Spark

- Next Steps in Learning Apache Spark

1. Introduction to Apache Spark

Apache Spark is a unified analytics engine used for processing large volumes of data quickly and effectively. Its in-memory computation, combined with rich libraries and APIs for batch and real-time processing, make it a go-to tool for data engineers and data scientists.

- Key Features of Apache Spark:

- Speed: Processes data much faster than traditional engines.

- Ease of Use: Provides APIs in Scala, Java, Python, and R.

- Unified Engine: Supports batch, streaming, machine learning, and graph processing workloads.

2. Setting Up Apache Spark on macOS Sierra

Hardware and Software Prerequisites

- Hardware:

- Model: MacBook Pro (MacBookPro12,1)

- Processor: Intel Core i7, 3.1 GHz

- Memory: 16 GB

- Software:

- OS: macOS Sierra – OS X 10.12

- Package Manager: Homebrew 1.18

- Apache Spark 2.0.0

Step-by-Step Installation Guide

- Install Xcode 8.0

Download and install from Apple’s Xcode page, then set the path if needed: - Install Homebrew

Open Terminal (CMD + Space, type Terminal) and enter: - Install Apache Spark

Use Homebrew to install Spark:

3. Understanding Core Components of Apache Spark

Apache Spark offers several components, each suited for different types of data processing tasks:

- Spark Core: Provides basic functionalities such as scheduling, task dispatching, and fault recovery.

- Spark SQL: Supports SQL-based operations on structured data, making it easy to integrate with other databases and data sources.

- Spark Streaming: Enables real-time data processing.

- MLlib: A library for machine learning, providing algorithms for classification, regression, and clustering.

- GraphX: Spark’s API for graph processing.

4. Getting Started with Spark Shell

Once Spark is installed, test it by running the interactive Spark shell, which supports both Scala and Python.

- Scala Spark Shell:

- Python Spark Shell (PySpark):

In the shell, you can interactively test Spark commands, load data, and perform transformations to get familiar with Spark’s capabilities.

5. Sample Projects and Real-Life Scenarios Using Spark

To illustrate how Spark can be used practically, here are some sample projects with code snippets and steps:

Project 1: Data Cleansing and Transformation

- Scenario: Cleansing customer data to remove duplicates, standardize phone numbers, and validate email addresses.

- Steps:

- Load data with Spark SQL.

- Use UDFs (User Defined Functions) for custom transformations.

- Deduplicate records using similarity scoring functions.

Code Sample:

Project 2: Real-Time Data Monitoring for IoT Devices

- Scenario: Monitor IoT sensor data to detect anomalies in real time.

- Steps:

- Use Spark Streaming to ingest sensor data.

- Apply MLlib models to classify normal vs. anomalous data.

- Send alerts when thresholds are breached.

Code Sample:

Project 3: Customer Churn Prediction

- Scenario: Predict churn based on customer behavior.

- Steps:

- Aggregate historical customer data.

- Train a churn prediction model using MLlib.

- Score new data to identify potential churners.

Code Sample:

6. Real-Life Applications of Apache Spark

Beyond specific projects, here are common use cases for Apache Spark in different industries:

- Retail: Real-time recommendation engines for personalized shopping experiences.

- Finance: Fraud detection through pattern recognition in near real-time.

- Healthcare: Risk prediction using historical and real-time patient data.

- Manufacturing: Predictive maintenance by monitoring machine data to detect faults early.

7. Next Steps in Learning Apache Spark

To deepen your understanding of Apache Spark, follow these steps:

- Mastering Spark Components

- Dive into Spark SQL, Streaming, and MLlib for advanced analytics.

- Practice building complex queries and transformations.

- Data Engineering with Spark

- Build ETL pipelines using Spark and practice connecting to different data sources like HDFS, Parquet, and NoSQL databases.

- Optimizing Spark for Performance

- Study techniques like caching, partitioning, and resource tuning to maximize efficiency.

- Understand Spark’s Catalyst optimizer and Tungsten execution engine.

- Advanced Real-Life Projects

- Implement real-time analytics, such as fraud detection and recommendation systems.

- Experiment with large datasets on platforms like AWS EMR or Databricks.

- Certification and Learning Resources

- Consider certifications like Databricks Certified Associate Developer for Apache Spark.

- Recommended resources: “Learning Spark: Lightning-Fast Big Data Analysis” and Databricks’ online tutorials.

Spark Basics: Essential Resources

As you explore Spark, here are some valuable resources to help you dive deeper into working with Spark DataFrames in Python:

- PySpark Cheat Sheet: Spark DataFrames in Python: This cheat sheet provides a quick overview of the most essential PySpark DataFrame commands and transformations.

- PySpark Cheat Sheet: Spark in Python: A handy guide to help you get started with Spark in Python, covering key commands for data manipulation and transformation.

These resources offer a quick reference to make working with Spark in Python more intuitive and productive.

Conclusion

With this guide, you’ve taken the first steps to understand and use Apache Spark. From installation to sample projects and real-life applications, you now have a foundational understanding to continue exploring and mastering Spark. By following the next steps outlined above, you can gain more confidence in using Spark for data processing, machine learning, and real-time analytics, making it a valuable skill in the big data landscape.