Essential Metrics for Evaluating Large Language Models: From Perplexity to Human Preference: Key LLM Evaluation Metrics to Measure Language Model Success

Why LLM Evaluation Makes or Breaks Your AI Success

Picture this: you’ve spent months and a small fortune developing a new large language model. On launch day, however, it starts giving out nonsensical or even harmful advice to real users. This nightmare scenario is more common than you might think, and it’s almost always preventable. A solid evaluation strategy using the right LLM evaluation metrics is what separates a genuinely helpful AI from a costly failure.

Without proper evaluation, you’re essentially building a car and shipping it without a single test drive. It might look sleek in the workshop, but you have no idea if the brakes work or if it will veer off the road at high speeds. Similarly, an LLM can ace standard academic benchmarks yet fail miserably when faced with the messy, unpredictable queries of real people.

The Shortcomings of Traditional Testing

You can’t apply old-school software testing methods to generative AI. Traditional tests rely on predictable inputs and deterministic outputs—if you input 2+2, the output is always 4. But how do you write a simple pass/fail test for creativity, helpfulness, or logical consistency?

This is where the real challenges begin:

- No Single “Correct” Answer: If you ask an LLM to summarize a document, there are countless ways it can produce a great summary. There isn’t one right answer to check against.

- Data Contamination: It’s a known secret that some models have been accidentally trained on the very benchmark datasets used to test them. This leads to inflated scores that don’t reflect real-world ability.

- Prompt Sensitivity: A tiny tweak to a prompt can send a model’s output in a completely different direction, making it difficult to assess performance consistently.

- Evolving Capabilities: Newer models are so advanced that their answers can sometimes be better than the human-written “ground truth” examples used for comparison, making older metrics less reliable.

Because of these complexities, leading AI teams know that relying on simple accuracy scores is not enough. They build complete evaluation frameworks that mix automated metrics with crucial human feedback. There are many cautionary tales of models that confidently invented fake legal cases or gave out dangerous medical advice—costly mistakes that a thorough evaluation process would have caught early.

Building Confidence Before Deployment

At its core, the goal of using LLM evaluation metrics is to build confidence. You need to be sure your model is not just working, but is also reliable, safe, and aligned with what your users actually need. A rigorous, continuous evaluation process helps you:

- Validate User Satisfaction: Confirm the AI is providing useful and positive interactions.

- Ensure Output Coherence: Check that the generated text is logical, readable, and stays on topic.

- Mitigate Risks: Proactively find and fix issues related to bias, toxic language, and other harmful outputs.

- Guide Improvements: Use evaluation data to pinpoint specific weaknesses, which tells your team exactly what to work on next.

By treating evaluation as an ongoing cycle instead of a final step, you can transform a potential public relations disaster into a successful and trusted AI product.

Perplexity Demystified: Your Model’s Confidence Meter

When you start exploring LLM evaluation metrics, one of the first concepts you’ll encounter is perplexity. Think of it as your model’s confidence meter. It measures how “surprised” a model is by a sequence of words. A low perplexity score means the model is very confident in its predictions, much like a seasoned meteorologist who is certain it will be sunny. A high score suggests the model is confused, like a weather app forecasting snow in the desert—it’s completely baffled by what it sees.

At its core, a language model predicts the next word in a sentence based on the words that came before it. Perplexity quantifies the uncertainty of these predictions over an entire dataset. A model that consistently gives a high probability to the correct next word will have a low perplexity. This is why a lower score is always better; it shows the model has a solid grasp of the language’s patterns, grammar, and structure.

How to Interpret Perplexity Scores

Interpreting a perplexity score isn’t a one-size-fits-all situation; it’s all about context. There is no universal “good” perplexity number because it depends heavily on the complexity of the text. For instance, a model tested on simple children’s stories will naturally have a much lower perplexity than one evaluated on dense legal documents. The key is to use it for comparison: when testing two models on the same dataset, the one with the lower perplexity is better at predicting that specific kind of text.

Perplexity is especially useful during the model development cycle. Data science teams rely on it to track progress and guide their decisions. If you’re experimenting with different training methods, you can measure perplexity after each run. A drop in the score signals that your changes are improving the model’s fundamental understanding of language. This iterative feedback loop is a critical part of learning how to fine-tune LLMs for optimal performance.

The mathematical formula for perplexity reveals its direct link to probability. As the screenshot below from Wikipedia shows, it’s calculated from the likelihood the model assigns to a sequence of words.

Simply put, the formula shows that a model that is less “surprised”—meaning it assigns higher probabilities to the actual words in the text—will achieve a lower perplexity score.

To put these scores into perspective, here’s a look at how perplexity values typically correspond to different types of language models and their performance levels.

Perplexity Score Comparison Across Model Types

A comparison of typical perplexity ranges for different language model architectures and their performance implications

| Model Type | Typical Perplexity Range | Performance Level | Best Use Cases |

|---|---|---|---|

| Traditional N-gram Models | 200 – 1000+ | Very Low | Basic text prediction, speech recognition in limited domains. |

| Early Recurrent Neural Networks (RNNs) | 80 – 200 | Low | Simple language modeling, text generation with short-term dependencies. |

| LSTM/GRU-based Models | 40 – 80 | Medium | Machine translation, sentiment analysis, more complex text generation. |

| Early Transformer Models (e.g., GPT-2) | 20 – 40 | High | Advanced content creation, chatbots, code generation. |

| State-of-the-Art LLMs (e.g., GPT-3/4) | 10 – 20 | Very High | Sophisticated conversational AI, summarization, complex problem-solving. |

As the table illustrates, the evolution of model architecture has driven perplexity scores down significantly, marking a clear improvement in the ability of models to understand and predict language patterns.

The Limits of Perplexity

While perplexity is a foundational metric, it’s important to recognize its limitations. It only measures how well a model predicts the next word, not whether the text is factually accurate, creative, or actually helpful. A model can produce grammatically flawless sentences that are complete nonsense or factually incorrect and still get a good perplexity score.

For this reason, perplexity should be seen as just one piece of the evaluation puzzle. Today’s leading models can achieve perplexity scores as low as 10 to 15 on standard benchmarks, a huge leap from scores above 50 just a few years ago. But this number doesn’t tell you anything about human-aligned qualities. That’s why other metrics like BLEU and ROUGE are used alongside perplexity, especially for tasks like translation and summarization where you need to measure overlap with human-written text. If you’re interested in a broader view, you can explore more LLM evaluation metrics on wandb.ai. This underscores the need for a balanced evaluation strategy, combining multiple metrics to get a complete picture of a model’s true capabilities.

BLEU and ROUGE: The Art of Measuring Text Similarity

While perplexity gauges a model’s confidence, it doesn’t tell us if the generated text is actually useful or accurate for a specific task. To bridge this gap, we turn to metrics that measure similarity. Imagine you have two editors reviewing an article. One meticulously checks if every translated phrase is precise, while the other ensures the summary captures the main ideas. This is the core difference between BLEU (Bilingual Evaluation Understudy) and ROUGE (Recall-Oriented Understudy for Gisting Evaluation).

These metrics move beyond pure probability to answer a more practical question: how closely does the model’s output match a human-written reference? They work by comparing overlapping sequences of words, known as n-grams. An n-gram is simply a contiguous sequence of ‘n’ items from a given sample of text. For instance, in “the quick brown fox,” “the quick” is a 2-gram (or bigram), and “the quick brown” is a 3-gram (or trigram).

BLEU: The Precision-Focused Translator

Originally created for machine translation, BLEU acts like the strict, precision-focused editor. It measures how many n-grams from the model’s output also appear in a high-quality human reference translation. A higher BLEU score signals greater precision and similarity to the reference. For translation tasks, a score between 20 and 40 is often considered a sign of solid quality.

However, BLEU’s strictness can be a weakness. It penalizes valid synonyms and different sentence structures. If a model generates “the fast brown fox” when the reference is “the quick brown fox,” BLEU would score it lower, even though the meaning is identical. This is a common trade-off with n-gram-based metrics. The underlying architecture that enables such complex text generation is fascinating, and you can learn more about the transformer architecture that powers LLMs in our detailed guide.

ROUGE: The Recall-Minded Summarizer

ROUGE, on the other hand, is the editor focused on capturing the essence. It measures recall, assessing how many n-grams from the human reference text appear in the model’s output. This makes it ideal for evaluating summarization tasks. We want to know if the model’s summary includes the key points from the original document, and ROUGE helps us quantify that. A high ROUGE score suggests the summary is thorough.

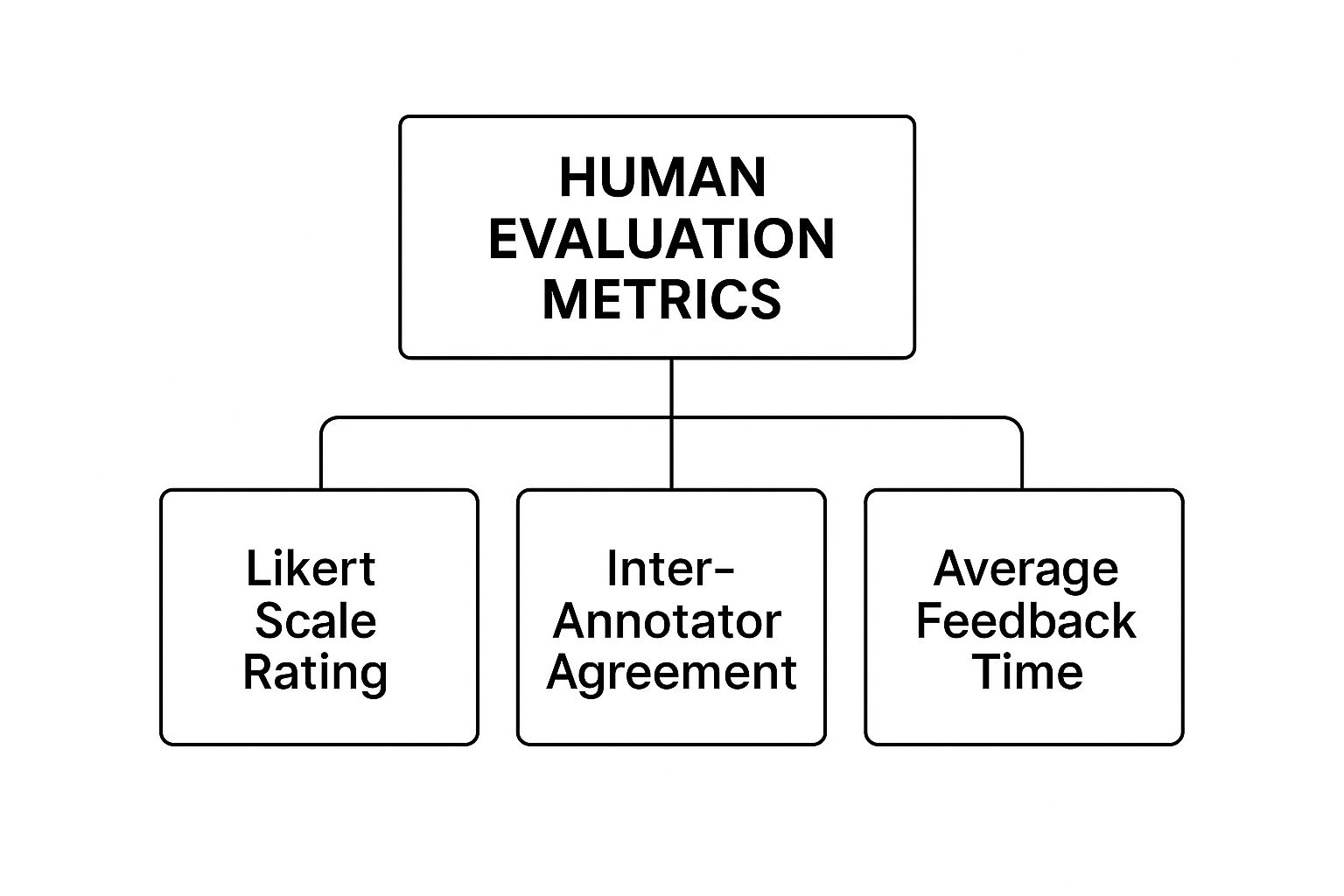

The following infographic illustrates the different human evaluation methods used to create the reference texts for metrics like BLEU and ROUGE.

This diagram shows that creating reliable “ground truth” references depends on structured human feedback, which ensures the benchmarks we test against are consistent and high-quality.

To help you choose the right tool for the job, here’s a breakdown of how BLEU and ROUGE compare.

BLEU vs ROUGE: When to Use Which Metric

A comprehensive comparison of BLEU and ROUGE metrics, their applications, scoring ranges, and optimal use cases

| Metric | Primary Focus | Typical Score Range | Best Applications | Limitations |

|---|---|---|---|---|

| BLEU | Precision (how many generated n-grams are in the reference) | 0-1 (often shown as 0-100) | Machine Translation, Code Generation | Struggles with synonyms and syntactic variety; penalizes valid rephrasing. |

| ROUGE | Recall (how many reference n-grams are in the generation) | 0-1 | Text Summarization, Question Answering | Can reward longer, less concise outputs that happen to contain reference words. |

Ultimately, both BLEU and ROUGE are powerful, efficient, and easy to interpret. They provide a quick, quantifiable snapshot of a model’s performance on specific text-generation tasks. While they have limitations and can’t fully grasp semantic meaning, they remain essential tools in any evaluation toolkit.

Beyond Word Matching: Semantic Similarity That Gets Meaning

Metrics like BLEU and ROUGE are great for measuring surface-level text overlap, but they have a major blind spot: they don’t understand what the words actually mean. They’re like a strict proofreader who flags a perfectly good sentence just because it uses different words than the answer key. A model could rephrase a famous quote with perfect clarity and still get a low score for not matching the original text word-for-word. This limitation shows why we need more advanced LLM evaluation metrics that can grasp meaning.

To truly measure a model’s abilities, we need to move beyond simple word counting. The next step in evaluation is semantic similarity—figuring out if two pieces of text convey the same idea, regardless of the specific words used. This approach is much closer to how humans communicate and is key to building AI that feels genuinely intelligent and helpful.

The Power of Embeddings in Evaluation

The core technology making this possible is embeddings. Think of an embedding as a rich, numerical fingerprint for a piece of text. Instead of seeing words as simple strings of characters, embedding models map them into a multi-dimensional space. In this space, words with similar meanings—like “king” and “queen”—are positioned closer together than unrelated words like “bicycle.”

By turning both the model’s output and the reference answer into these numerical fingerprints, we can measure the distance between them. A smaller distance suggests a higher degree of semantic similarity. This method is incredibly useful because it recognizes synonyms, different phrasing, and contextual details that n-gram-based metrics would completely miss. It’s the difference between checking if two grocery lists have the exact same items versus checking if they both contain the ingredients for the same recipe.

Human Evaluation: The Ultimate Ground Truth

While automated semantic metrics are a big leap forward, they still can’t capture everything. Qualities like creativity, tone, harmlessness, and trustworthiness are complex human concepts that require human judgment. This is where human evaluation frameworks are essential. These frameworks provide structured ways for human raters to score model outputs based on specific, qualitative criteria.

Instead of a simple pass or fail, human evaluators might use a Likert scale (e.g., rating helpfulness from 1 to 5) or answer direct questions about the output’s quality. This process yields rich, qualitative data that reveals insights automated systems can’t provide.

LLM-as-a-Judge: Scaling Human-Like Evaluation

Gathering human feedback is effective, but it is also time-consuming and expensive. To overcome this challenge, a new technique called LLM-as-a-judge has appeared. This approach uses a powerful, state-of-the-art LLM (the “judge”) to evaluate the outputs of another model (the “student”). The judge is given a clear rubric and asked to score the student’s answer based on criteria like correctness, helpfulness, and safety.

This method combines the speed of automation with the detailed understanding of a human rater. For example, Amazon Bedrock now offers LLM-as-a-judge features that provide not just a score but also a natural language explanation for its reasoning. According to their documentation, this allows teams to get human-like quality evaluation at a fraction of the cost and time. These advanced methods are becoming critical for measuring how well models align with human preferences and ensuring they are not just technically accurate but also genuinely useful and responsible.

Matching Metrics to Reality: Task-Specific Evaluation Done Right

Using a single set of LLM evaluation metrics for every AI task is like trying to measure distance with a thermometer—it might give you a number, but it’s completely missing the point. A model’s success isn’t an absolute value; it’s defined entirely by the job it’s designed to do. A customer service chatbot and a creative writing assistant require fundamentally different yardsticks for success, yet many teams fall into the trap of applying a one-size-fits-all evaluation strategy. This approach often leads to optimizing for metrics that don’t align with user satisfaction or business goals.

The key is to tailor your evaluation to the specific use case. The absence of a definitive “ground truth” for many generative tasks makes this even more critical. There isn’t just one “correct” summary or one “perfect” creative story, which is why matching your metrics to the desired outcome is so important for building reliable AI systems. A robust evaluation process must be flexible, acknowledging that what matters for one application might be irrelevant for another.

Frameworks for Different AI Tasks

To build an effective evaluation suite, you must first define what “good” looks like for your specific application. This means moving beyond generic academic benchmarks and focusing on the qualities that matter most to your users.

Let’s explore how evaluation criteria shift based on the task:

- Conversational AI (Chatbots): For a customer service bot, metrics like helpfulness and faithfulness (factual consistency with a knowledge source) are paramount. Users need correct answers and a pleasant interaction. You might measure session completion rates or use human raters to score conversation quality on a 1-5 scale. The goal is efficient problem resolution, not literary prose.

- Content Generation (Creative Writing): In contrast, a creative writing assistant needs to be evaluated on creativity, coherence, and adherence to tone. Metrics like BLEU would be counterproductive here, as they penalize unique phrasing. Instead, human evaluation or an LLM-as-a-judge approach, scoring outputs on originality and style, would be far more insightful.

- Summarization Tools: Here, a blend of automated and human-centric metrics works best. ROUGE is a great starting point to ensure the summary captures key information from the source text. However, it should be complemented by human reviews for conciseness and readability, as ROUGE can sometimes reward longer, less-focused outputs.

- Question-Answering (Q&A) Systems: For Q&A, especially in a Retrieval-Augmented Generation (RAG) system, accuracy is king. Metrics like retrieval precision (are the sourced documents relevant?) and faithfulness are crucial. You need to verify that the generated answer is factually grounded in the retrieved context to prevent hallucinations.

- Code Completion Models: Success for a code assistant is measured by functional correctness. Does the generated code compile and run without errors? The HumanEval benchmark is a perfect example, as it tests whether a model’s code can pass specific unit tests. It’s a clear, objective measure of utility for a developer.

This task-specific approach is central to modern AI development. The relationship between natural language processing and the capabilities of LLMs has evolved, demanding more nuanced evaluation. For a deeper look, our guide on understanding the correlation between NLP and LLMs provides more context.

By carefully selecting your LLM evaluation metrics to reflect real user needs, you can avoid optimizing for the wrong targets. Building a purpose-driven evaluation framework ensures you are not just building a technically impressive model, but one that is genuinely effective and valuable in the real world.

Building Your Evaluation System: From Metrics to Results

Knowing individual LLM evaluation metrics is like having a toolbox full of different hammers—each is useful for a specific job, but you need a complete strategy to build something sturdy. The real challenge isn’t just understanding perplexity or BLEU scores; it’s combining them into a coherent evaluation system that guides better model development. A truly effective system moves beyond isolated scores and creates a pipeline that balances automated efficiency with essential human insight.

The goal is to build a process that scales from early prototypes all the way to production models, providing clear signals at every stage. This means establishing meaningful baselines, managing evaluation data systematically, and communicating results in a way that drives action, not just more data points.

Designing Your Evaluation Pipeline

A strong evaluation pipeline is not a single action but a continuous workflow. Successful AI teams design these pipelines to be both thorough and adaptable. Here’s a practical approach to structuring your own:

- Establish a Strong Baseline: Before you can measure improvement, you need a starting point. Your baseline could be an existing open-source model, a previous version of your own model, or even human-generated answers. Benchmarking performance against a clear baseline is the only way to know if your changes are making a real difference.

- Combine Automated and Human-Centric Metrics: Relying solely on automated metrics like ROUGE can be misleading. They might tell you a summary is factually dense but miss that it’s unreadable. Your pipeline should integrate these fast, scalable metrics for initial checks and then layer in human or LLM-as-a-judge evaluations for nuanced qualities like helpfulness, tone, and safety.

- Manage Your Datasets Carefully: Your evaluation is only as good as the data you test against. It’s vital to maintain a high-quality, diverse, and representative “golden dataset” for testing. This dataset should mirror the real-world scenarios your model will face. Keep it separate from your training data to avoid contamination.

From Data to Decisions: A Scalable Workflow

Creating a scalable workflow means thinking about how evaluation fits into your broader development cycle. It’s not a one-off check before deployment; it’s an ongoing process.

A key challenge many teams face is integrating evaluation seamlessly into their AI workflows. The process needs to be repeatable and efficient. This involves setting up automated runs that trigger evaluations whenever a new model version is trained. The results should be logged, tracked over time, and easily visualized so that developers can get instant feedback. This is the core principle behind continuous evaluation, which helps catch performance regressions early.

Many modern platforms are designed to address this. For instance, some tools allow for the creation of synthetic data generation (SDG) pipelines that automatically create question-answer pairs from your documents to build robust test sets. This speeds up the creation of evaluation data, a process that is often a major bottleneck due to the cost and time of human annotation.

Common Pitfalls and How to Avoid Them

Building an effective evaluation system also means learning from common mistakes. One of the biggest pitfalls is overfitting to specific metrics. If your team focuses exclusively on improving a single score, you risk developing a model that is good at the test but bad at its actual job. For instance, optimizing only for BLEU in a translation task might lead to robotic, overly literal translations that miss cultural nuances.

Another trap is using rigid evaluation frameworks that cannot adapt. As models evolve, so must our methods for testing them. Your system should be flexible enough to incorporate new benchmarks, custom metrics, and changing business requirements. The most successful teams treat their evaluation system as a living product that evolves alongside their models. By adopting this mindset, you build a process that doesn’t just generate reports, but one that actively improves your AI.

Your Next Steps: Implementing Evaluation That Works

Moving from theory to a real-world evaluation system requires a practical, step-by-step approach. The goal is to build a process that gives you clear insights without burying your team in complexity. Whether you’re working with limited resources or building an enterprise-grade system, the key is to start small and scale intelligently. Your first move should be to create a simple, repeatable process that combines the speed of automation with the precision of human oversight, turning abstract LLM evaluation metrics into concrete results.

A Practical Starting Framework

You don’t need an elaborate system right out of the gate. A great starting point is to establish a “golden dataset”—a carefully chosen collection of prompts and ideal responses that reflect your most critical use cases. Think of this as your unchanging standard, the benchmark you’ll use to measure all future improvements.

With this dataset in hand, you can roll out a two-part evaluation strategy:

- Automated First Pass: For tasks like summarization, use efficient metrics like ROUGE. For code generation, you can use automated checks to verify functional correctness. This first layer acts as a quick filter, flagging major performance problems and giving you a fast, high-level overview.

- Human-in-the-Loop Review: Select a subset of your data for human reviewers. This is especially important for outputs flagged by automated checks or for those related to high-stakes tasks. This step is essential for judging qualities like helpfulness, tone, and safety—areas where automated metrics often struggle.

This blended approach delivers both wide coverage and deep, qualitative understanding. It ensures you catch obvious errors quickly while also grasping the subtle aspects of model performance that truly shape the user experience.

Scaling Your Evaluation Capabilities

Once your baseline process is running smoothly, it’s time to scale up and introduce more advanced methods. A key step here is to move from manual spot-checks to a more integrated workflow. The LLM-as-a-judge approach is a fantastic way to scale human-like feedback without the high cost and time of purely manual review. By using a powerful model armed with a clear rubric, you can automate nuanced evaluations for qualities like factual correctness and coherence.

This method is especially effective for Retrieval-Augmented Generation (RAG) systems, where you need to confirm both the accuracy of the retrieved information and the quality of the final generated answer.

As your evaluation process matures, consider developing a detailed checklist to ensure consistency and make your system audit-ready. This document should track everything from data sources to the specific metrics applied for each task. The ultimate objective is a continuous evaluation system built directly into your development cycle. This allows you to monitor model performance over time and make data-driven decisions that lead to real improvements.

At DATA-NIZANT, we are focused on helping you handle the complexities of AI development. Check out our in-depth articles to find the expert insights you need to build, evaluate, and deploy successful AI solutions.