Tracing the Evolution from Neural Networks to Transformers and the Rise of LLMs in Modern NLP: 🧠 From Syntax to Semantics: How Neural Networks Empower NLP and Large Language Models

In 2019, we explored the foundations of neural networks—how layers of interconnected nodes mimic the human brain to extract patterns from data. Since then, one area where neural networks have truly transformed the landscape is Natural Language Processing (NLP).

What was once rule-based and statistical has now evolved into something more fluid, contextual, and surprisingly human-like—thanks to Large Language Models (LLMs) built atop deep neural architectures.

The NLP Challenge: More Than Just Words

Traditional NLP systems relied heavily on handcrafted rules, syntactic parsing, and shallow learning models. While effective for basic tasks like tokenization and POS tagging, they struggled with semantics, ambiguity, and contextual understanding.

“The bank approved the loan despite the flood.”

Is “bank” a financial institution or a riverbank? Older models often couldn’t tell. But with contextual embeddings powered by deep learning, modern NLP systems can now grasp subtle meanings and disambiguate words based on surrounding text.

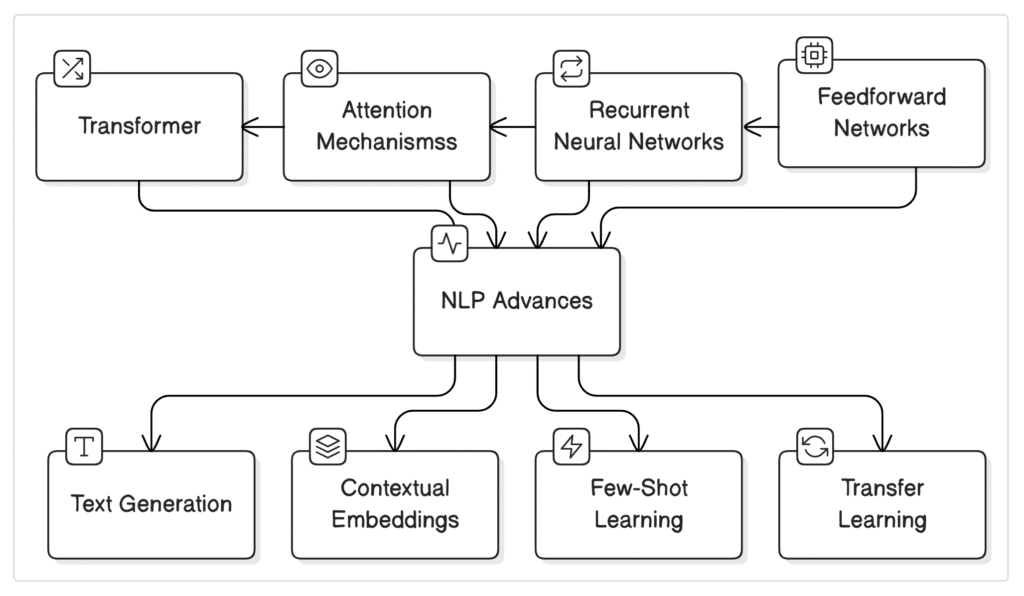

Enter Deep Neural Networks

Neural networks—particularly Recurrent Neural Networks (RNNs) and later Transformers—allowed models to “read” sentences sequentially or in parallel and learn language as a dynamic sequence of relationships.

- Sequence-to-sequence models for translation

- Contextual embeddings with BERT (2018–2019)

- Transfer learning with large pretrained models

The Transformer Revolution

By 2021, state-of-the-art models like GPT-3, T5, and DistilBERT relied on the Transformer architecture introduced by Vaswani et al. in 2017.

Transformers brought major shifts:

- Self-attention mechanisms for understanding full context

- Parallel processing for scalability

- Massive pretraining on web-scale corpora

Neural Networks → Language Understanding

| Neural Network Element | NLP Impact |

|---|---|

| Hidden layers | Capture complex syntactic and semantic features |

| Backpropagation | Enables tuning for linguistic tasks like summarization |

| Attention weights | Learn which words matter most in context |

| Embedding layers | Represent words as dense, context-aware vectors |

| Training with massive data | Facilitates pretraining on Common Crawl, Wikipedia, etc. |

Large Language Models: Scaling Understanding

LLMs like OpenAI’s GPT-3 (175B parameters) and Google’s T5 leverage pretrained knowledge to perform a range of NLP tasks with minimal supervision—via few-shot or zero-shot learning.

Common LLM tasks include:

- Question answering

- Text summarization

- Translation

- Structured information extraction

What’s powerful is that the same model architecture generalizes across multiple tasks by simply altering the prompt.

Why This Matters for Practitioners

For engineers and AI practitioners:

- Fine-tuning BERT or GPT yields custom NLP applications without starting from scratch

- Transfer learning dramatically reduces time-to-deploy

- Optimization techniques like model pruning enable on-device use

What’s Next?

As of late 2021, the NLP landscape is rapidly expanding:

- Emergence of multimodal models (e.g., CLIP, DALL·E)

- Increased focus on explainability and bias mitigation

- Open-source tools like Hugging Face Transformers are lowering the entry barrier

But at its core, the success of modern NLP still comes down to this: Neural networks are the foundation of machine language understanding.

📊 Visual: Evolution of Neural Architectures in NLP