Mastering the Art of Data Science Project Management: Frameworks, Workflows, and Real-World Best Practices: Data Science Project Management That Actually Works

Why Most Data Science Projects Fail (And How To Beat The Odds using Data Science Project Management Techniques

)

Data science projects offer incredible potential for organizations to gain valuable insights from their data and make more informed decisions. However, successfully managing these projects is notoriously challenging. Traditional project management approaches often struggle with the experimental nature and unpredictable outcomes inherent in data science. This stems from the iterative and exploratory nature of these projects.

Understanding the Challenges

A key reason for project failure is scope creep during exploratory analysis. Data scientists, while searching for hidden patterns, can sometimes lose focus, causing delays and deviations from the original project goals. Another common issue is managing stakeholder expectations, particularly when model performance doesn’t meet initial projections. It’s crucial to remember that data science isn’t magic; models are built iteratively, and their performance improves over time. This necessitates ongoing communication and realistic expectation setting with stakeholders. For more information on data governance, see How to master data governance policies.

Furthermore, the inherent uncertainty in data science makes traditional budgeting and timelines difficult to estimate. Unlike traditional software development with clearly defined scopes and deliverables upfront, data science projects can evolve significantly as new insights emerge. This demands a more adaptable approach to project planning and resource allocation. In fact, a major hurdle in data science project management is the high failure rate. Statistics show that over 70% of projects don’t achieve their intended results. This can be attributed to factors like poor planning, inadequate budget management, and underutilization of project management tools. More detailed statistics are available here.

Beating the Odds Through Effective Data Science Project Management

Successfully addressing these challenges requires a specialized approach to data science project management. This involves:

-

Clearly Defining Objectives: Begin with a well-defined business problem and translate it into measurable data science objectives. This keeps the project focused and ensures tangible value.

-

Embracing Agile Methodologies: Agile frameworks, such as Scrum, provide the flexibility to adapt to evolving project needs. Regular sprints and feedback loops help maintain project momentum and alignment with stakeholder expectations.

-

Building Cross-Functional Teams: Successful data science projects require collaboration between data scientists, business analysts, engineers, and other stakeholders. Clear communication and a shared understanding of project goals are crucial.

-

Selecting the Right Tools: Utilizing specialized tools for version control (like Git), experiment tracking (such as MLflow), and model deployment (platforms like TensorFlow Serving) can dramatically improve project efficiency and reproducibility.

-

Communicating Effectively: Regularly communicate progress, challenges, and insights to stakeholders. This manages expectations and builds trust throughout the project lifecycle.

By adopting these strategies, organizations can greatly improve the success rate of their data science projects and unlock the full potential of their data. This positions data science project management as a vital bridge between technical complexities and business goals, ensuring that projects deliver real-world impact.

Building Teams That Actually Work Together

Successful data science projects depend on more than just individual talent. They require teams where technical experts and business stakeholders can effectively collaborate. This means carefully considering team dynamics, especially the potential friction between data scientists focused on technical details and business leaders focused on meeting deadlines. However, if managed well, this tension can actually become a source of strength.

For example, data scientists can learn from the business knowledge of stakeholders, making sure their work has practical applications. In turn, business leaders gain a deeper understanding of what the data can and cannot do through collaboration with data scientists.

Defining Roles for Success in Data Science Project Management

One key to successful collaboration is having clearly defined roles. When everyone understands their responsibilities and how they contribute to the project, the entire team benefits. Think of a data science team like an orchestra: each musician has a specific instrument to play, and their combined effort creates a harmonious symphony.

Similarly, in a data science project, distinct roles like Data Scientist, Project Manager, Business Analyst, and Data Engineer each play a critical role. This division of labor allows each team member to focus on their area of expertise, making the team as a whole more efficient.

Communication: The Key to Keeping Everyone Aligned

Equally important is establishing strong communication channels and regular communication patterns. Regular meetings, clear reporting structures, and shared platforms for documentation and progress updates help keep everyone on the same page and informed. But communication isn’t just about how often it happens; it’s also about how effectively information is shared.

Translating technical jargon into language that business stakeholders understand is crucial. This ensures that everyone understands the project’s progress and challenges. The importance of skilled project management, especially in data science, is growing. According to the PMI’s Talent Gap report, there will be a need for 2.3 million project management professionals each year. This highlights the importance of project management expertise in guiding data-driven initiatives.

Fostering a Culture of Collaboration in Data Science Project Management

Finally, building an environment where both technical excellence and business impact are valued is crucial. This means fostering mutual respect, open communication, and a shared understanding of the project’s overall objectives. Data scientists and business leaders both need to understand and appreciate each other’s perspectives and priorities.

By creating this collaborative atmosphere, organizations can fully leverage the potential of their data science teams and achieve meaningful results.

Methodologies That Embrace Uncertainty Instead Of Fighting It

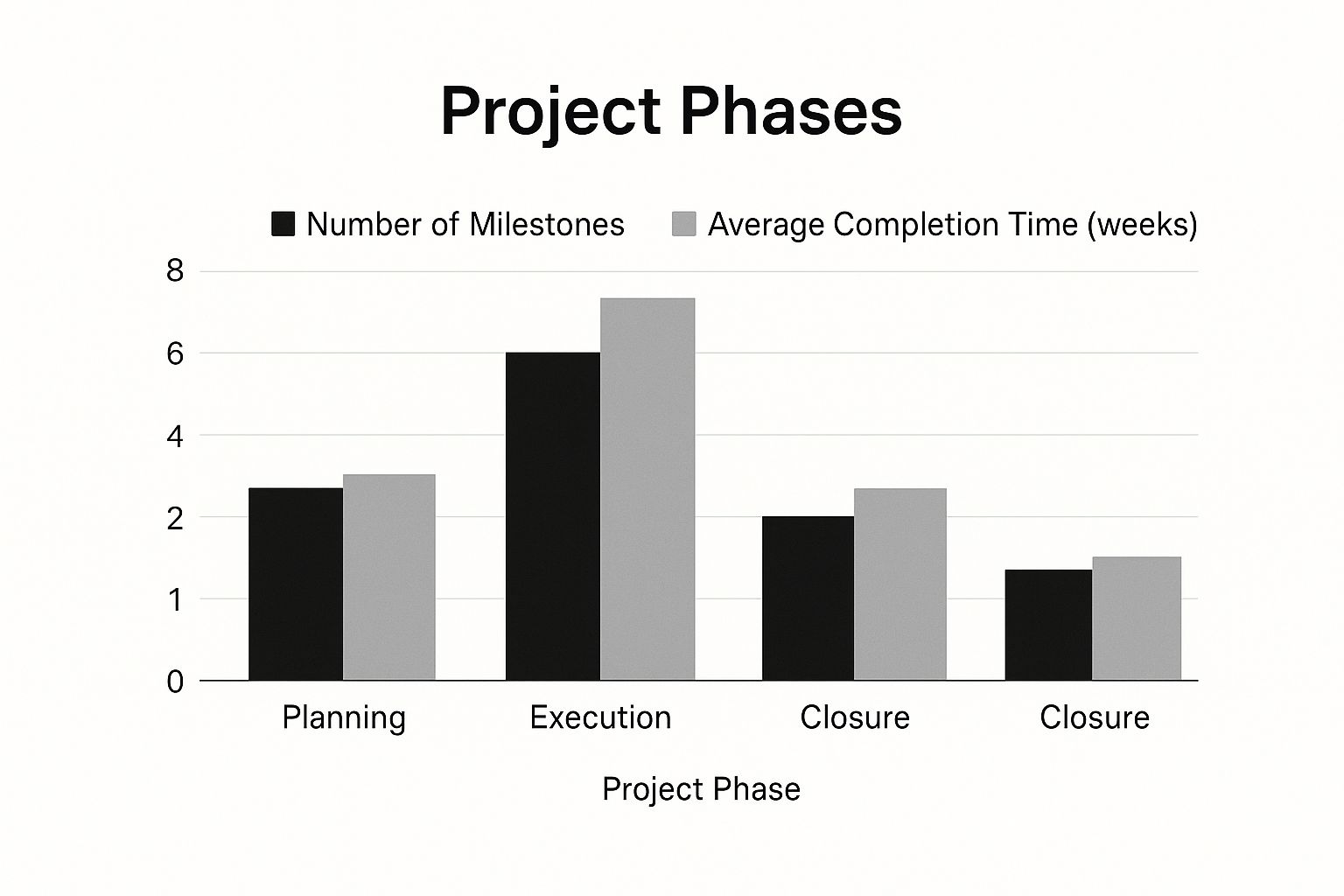

The infographic above illustrates the number of milestones and average completion time across the three project phases: planning, execution, and closure. Notice how the execution phase takes the longest and has the most milestones. This emphasizes the iterative nature of data science. Unexpected discoveries and necessary adjustments frequently occur during this phase.

Traditional, rigid methodologies like waterfall simply aren’t suited for the unpredictable nature of data science. Successful data science teams adapt frameworks like CRISP-DM and Agile to create hybrid approaches. This allows them to maintain scientific rigor while delivering valuable business results. These adaptable methodologies allow for flexibility and embrace the uncertainty inherent in data science, enabling teams to pivot when necessary.

Balancing Structure with Flexibility in Data Science Projects

The key is finding the right balance between structure and flexibility. Too much structure, and the project becomes rigid, unable to adapt to new information. Too little, and the project risks losing focus and direction. Think of it like navigating a ship. You need a charted course (structure), but you also need the ability to adjust your sails to account for shifting winds and currents (flexibility).

For example, a team might use the CRISP-DM framework to give the project an initial structure, outlining the phases from business understanding to deployment. Within each phase, however, they might incorporate Agile principles. This means working in short sprints with regular check-ins and adapting their approach based on the most recent data insights. This blended approach allows for iterative progress while maintaining flexibility.

Managing Timelines in Uncertain Environments

Managing timelines in data science projects can be challenging, as the data often dictates the project’s direction. It’s virtually impossible to know exactly what the data will reveal upfront. One strategy for effective timeline management is breaking down the project into smaller, more manageable parts. This granular approach allows for greater predictability and control.

For each sprint, the team sets realistic goals based on current knowledge and focuses on achieving those specific objectives. The overall plan is adjusted as new information comes to light. This iterative process promotes transparency and allows for mid-course corrections, which is crucial in data science. It provides the necessary flexibility within a structured framework, a key ingredient for successful data science projects.

To illustrate the differences between these project management approaches, take a look at the comparison table below.

Let’s take a closer look at how different methodologies can be applied to data science projects. The table below summarizes the key strengths and weaknesses of each approach.

| Methodology | Best For | Key Strengths | Potential Challenges | Timeline Flexibility |

|---|---|---|---|---|

| Waterfall | Well-defined, predictable projects | Clear structure, easy to manage | Inflexible, doesn’t handle changes well | Low |

| Agile | Projects with evolving requirements | Adaptable, iterative development | Requires strong communication, can be complex | High |

| CRISP-DM | Data science projects | Focuses on the data science process, iterative | Can be time-consuming, requires domain expertise | Medium |

| Hybrid (Agile + CRISP-DM) | Complex data science projects | Combines structure and flexibility | Requires careful planning and coordination | High |

This table provides a concise overview of how these methodologies handle uncertainty and project timelines. The hybrid approach often offers the best solution for the dynamic nature of data science.

Checkpoints That Make Sense for Experimental Work

Traditional project checkpoints usually focus on deliverables. In data science, however, a critical output might be a new understanding of the problem, even if it means abandoning the original hypothesis. Checkpoints should therefore be viewed as opportunities to evaluate progress, validate assumptions, and make informed decisions about the project’s direction.

These checkpoints might include:

- Regular meetings to discuss findings

- Presentations to stakeholders

- Internal documentation of insights and decisions

The goal is to foster frequent communication and iterative evaluation. These practices enable the team to adapt to evolving insights and make course corrections, ultimately contributing to project success.

Tools And Technology That Make The Difference

The sheer number of available tools for data science project management can be daunting. Many platforms claim to be the ideal solution, but how can you discern which ones truly deliver? Effective data science project management relies heavily on choosing technology that simplifies workflows and promotes teamwork. This involves carefully assessing tools, gaining team acceptance, and confirming that your tech investments lead to better project results.

Essential Components of a Data Science Technology Stack

High-performing data science teams use a combination of tools to handle the intricacies of their projects. Key components include:

-

Version Control: Tools like Git are fundamental for tracking changes to code, data, and models. This ensures reproducibility and makes collaboration easier among team members.

-

Experiment Tracking: Platforms like MLflow help manage the many experiments conducted during model development. Tracking parameters, metrics, and artifacts makes comparing and choosing the best-performing models simpler.

-

Collaboration Platforms: Tools like Slack or Microsoft Teams facilitate communication and knowledge sharing within the team. This is essential for keeping everyone coordinated and up-to-date throughout the project.

-

Project Management Software: Traditional project management tools can be adapted for data science projects. Choosing a platform that offers flexibility and can handle the iterative nature of data science is crucial.

Evaluating Tools and Getting Team Buy-In

Choosing the right tools is only the first step. Successfully integrating them into the team’s workflow requires thoughtful planning and open communication. Some practical approaches include:

-

Piloting New Tools: Before fully committing to a new platform, begin with a small pilot project. This gives the team a chance to assess the tool’s usefulness and spot any potential difficulties in a low-stakes setting.

-

Gathering Team Feedback: Include the team in the selection process. Their input is vital to ensure that the chosen tools address their needs and will be easily adopted.

-

Providing Training and Support: Offer thorough training on any new tools. This ensures that everyone understands how to use them effectively. Continuing support and resources for troubleshooting can also ease the transition.

Measuring the Impact of Technology Investments

The main objective of adopting new technology is to improve project outcomes. Measuring the effect of these investments involves tracking key metrics, such as:

-

Project Completion Rate: Are projects finishing on time and within budget?

-

Model Performance: Are the models achieving the targeted accuracy and efficiency?

-

Team Productivity: Are team members working more efficiently and collaboratively?

By monitoring these metrics, you can determine if your tech investments are genuinely making a difference. This data-driven approach helps you maximize the return on your tech spending. The project management software market is growing rapidly. By 2025, it is predicted to reach $7.24 billion, with a 10.67% CAGR from 2025 to 2030. Currently, 82% of companies utilize project management software to enhance organizational efficiency. Learn more about project management statistics here.

Choosing the Right Technology for Your Team

Selecting the right tech stack is essential for successful data science project management. Consider your team’s and project’s unique requirements. A team working on a large-scale deep learning project will have different needs than a team focused on data analysis. The team’s existing skills and familiarity with particular tools should also be considered. By carefully evaluating these factors, you can select the tools that will best help your team and lead to project success, allowing teams to effectively address challenges and boost the likelihood of achieving their goals.

Keeping Stakeholders Happy When Nothing Goes As Planned

In data science project management, maintaining stakeholder confidence when facing unexpected turns is paramount. Sometimes, initial hypotheses prove incorrect, or promising models underperform in real-world applications. Building trust and demonstrating value, even amidst these setbacks, is crucial for securing continued project support.

Communicating Technical Discoveries in Business Language

Data science often involves intricate processes and specialized terminology that can be challenging for non-technical stakeholders to understand. Clearly translating technical findings into concise, business-focused language is essential for maintaining engagement and fostering understanding. This means emphasizing the impact of your discoveries on business objectives, answering the “why” and “so what” questions, rather than delving into technical minutiae.

For instance, instead of explaining the inner workings of a specific algorithm, highlight how its application can improve customer retention or drive sales growth. This approach helps stakeholders grasp the value of the work, regardless of the technical complexity. It effectively bridges the communication gap between technical execution and tangible business results.

Managing Expectations Around Timelines and Outcomes

The inherent exploratory nature of data science makes precise timeline and outcome predictions difficult. Setting realistic expectations from the project’s inception is essential for managing potential disappointment and preserving stakeholder confidence. Openly communicating the iterative process of data science, emphasizing the frequent need for adjustments and discoveries, can prevent misunderstandings and cultivate a collaborative environment.

Furthermore, providing regular updates and transparently communicating about challenges and pivots demonstrates proactive project management. This fosters trust and ensures stakeholders understand the project’s dynamic nature, enabling informed decision-making throughout the project lifecycle. You might be interested in: How to master machine learning in recruitment.

Creating Reporting Frameworks That Demonstrate Progress

Even when encountering obstacles, showcasing progress remains vital. Develop reporting frameworks that extend beyond traditional metrics and highlight valuable learnings, even from unsuccessful experiments. This demonstrates continuous improvement and progress. This might involve showcasing advancements in understanding the business problem, refinements in data collection methods, or identification of key factors influencing model performance.

By focusing on these learnings, rather than solely on achieved milestones, you can demonstrate the project’s inherent value, even when facing challenges. This emphasis on knowledge gained reinforces confidence in the team’s adaptability and ability to deliver valuable insights. It also promotes a growth mindset within the team and among stakeholders.

Building Trust and Maintaining Support

Successfully navigating uncertainty and maintaining stakeholder confidence requires proactive communication, transparent reporting, and a consistent focus on value creation, even when outcomes are unexpected. This approach builds trust and strengthens the perceived importance of data science within the organization. By effectively communicating the project’s value and demonstrating ongoing progress, data science teams can ensure continued stakeholder buy-in and ultimately achieve impactful results.

Measuring Success When Traditional Metrics Fall Short

How do you measure the success of a data science project when the original business question might be off the mark, or the most valuable discoveries come from unexpected places? Standard metrics like on-time and within-budget delivery often don’t capture the true impact. We need a smarter way to define and measure success.

Beyond Time and Budget: Rethinking KPIs For Data Science Project Management

Forward-thinking companies are moving past traditional metrics and developing more insightful frameworks. These frameworks look at not just project execution, but also how the project affects business goals. This recognizes that a data science project’s value can be the knowledge gained, even if the initial aim wasn’t fully achieved.

For example, a project could reveal new customer groups or uncover hidden connections in data. This provides unforeseen business value. This requires Key Performance Indicators (KPIs) that reflect both technical achievements and business impact. Think measuring model accuracy alongside its effect on customer satisfaction or revenue growth.

By connecting technical metrics with business results, companies can show the real-world impact of their data science investments.

Demonstrating ROI When the Value Lies in Learning

Often, the best Return on Investment (ROI) in data science isn’t a finished product, but the discoveries made during the project. Even projects without a deployable model can deliver important value. They can influence business strategy or uncover new avenues to explore.

Showing ROI means demonstrating the value of these insights, even if they didn’t lead to the original goal. This could mean quantifying the impact of better decision-making. Or perhaps highlighting new business opportunities revealed by data analysis. For a deeper dive into this topic, see our guide on How to master machine learning.

Building Feedback Loops For Continuous Improvement

Effective data science teams build constant feedback loops. This ensures projects align with evolving business needs. This means regular communication with stakeholders, integrating their input, and refining models and analyses based on new data.

This ongoing discussion between the data science team and business stakeholders lets projects adapt to changing situations and maximize their influence.

Establishing Meaningful KPIs

The table below illustrates a variety of KPIs across different categories to help track and measure project success. It outlines how to measure and what targets to aim for.

| Category | Metric | Measurement Method | Target Range | Business Impact |

|---|---|---|---|---|

| Technical | Model Accuracy | F1-score, AUC | > 0.8 | Improved prediction accuracy |

| Business | Customer Churn Reduction | Percentage change in churn rate | < 5% | Increased customer retention |

| Operational | Time to Model Deployment | Days from model completion to deployment | < 14 days | Faster time to market |

By using these strategies, organizations can go beyond basic project management metrics. They can build a more comprehensive way to measure data science project success. This approach helps capture the true value of data science investments. It also helps build data-driven cultures. This ensures that data science projects don’t just deliver results, but also contribute to continued learning and innovation.

Creating Systems That Scale Beyond Individual Projects

Successfully managing a single data science project is a great accomplishment. However, for an organization to truly thrive with data science, it needs repeatable and scalable processes that consistently lead to project success. This means establishing robust systems, refining workflows, and cultivating a data-driven culture that supports ongoing project delivery. This section explores how leading organizations build these sustainable capabilities for long-term success in data science.

Developing Internal Expertise

A cornerstone of scaling data science project management is developing in-house expertise. This isn’t just about hiring talented data scientists; it’s about creating clear career paths and providing opportunities for professional development. Organizations can achieve this through structured mentorship programs, specialized training in areas like project management methodologies specific to data science, and encouraging participation in industry conferences and workshops.

Some companies even establish internal communities of practice where data scientists can connect, share knowledge, and learn from each other’s experiences. This fosters a collaborative learning environment that significantly accelerates the development of internal expertise.

Establishing Centers of Excellence

Many organizations create Centers of Excellence (CoEs) to centralize knowledge and resources. But simply establishing a CoE isn’t enough. For a CoE to be truly effective, it needs to actively engage with different teams, offer practical guidance, and promote best practices throughout the organization.

A successful CoE serves as a central hub, sharing valuable insights and ensuring consistent standards for data science project management across all teams. This ensures the entire organization benefits from the collective knowledge and experience within the CoE.

Creating Knowledge Repositories

Another key component of scalable data science is building accessible knowledge repositories. This goes beyond just storing project documentation. It involves actively curating and organizing information so it’s easy to find and use for future projects. It’s like building a well-organized library where past project learnings are readily available to inform new initiatives, preventing teams from having to reinvent the wheel.

This repository could house code libraries, model templates, project documentation, and even recordings of presentations or training sessions. This institutional memory is incredibly valuable for onboarding new team members and maintaining continuity, even with staff changes.

Cultivating a culture of knowledge sharing is crucial. This encourages teams to contribute to the repository and recognize its value as a resource. Regular reviews and updates are also essential to keep the information current and prevent the repository from becoming outdated.

By implementing these strategies, organizations can transition from managing individual data science projects to building a truly robust and comprehensive data science capability. This fosters an environment where data-driven insights become a core organizational strength, fueling innovation and delivering long-term business value. Ready to elevate your data science projects? Explore DATA-NIZANT at https://www.datanizant.com for more insights and expert guidance on AI, machine learning, data science, and more.