Understanding the Building Blocks of Machine Intelligence: 🧠 What Are Neural Networks?

Introduction: From Brains to Bytes

In our previous post on AI, Machine Learning, and Deep Learning, we explored how machines can be trained to learn from data. One of the key driving forces behind this capability is a computational structure inspired by the human brain—Neural Networks.

But what exactly are neural networks, and why have they become so central to modern AI? Let’s break it down in simple terms.

What Is a Neural Network?

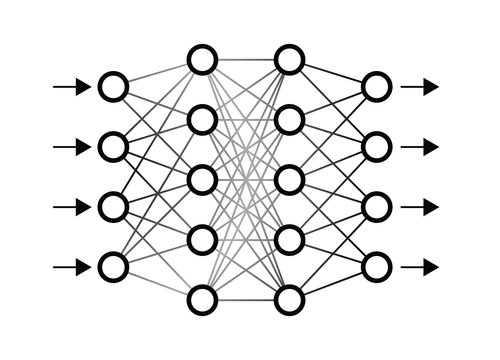

A Neural Network is a series of algorithms that attempt to recognize patterns in data, similar to how our brains process information. It’s called a “network” because it consists of layers of interconnected nodes—also known as neurons—that transmit data to each other.

Each neuron receives input, processes it, and passes it forward to the next layer, gradually transforming raw input into meaningful output.

Basic Structure of a Neural Network

At its simplest, a neural network has:

- Input Layer – Receives the data (e.g., pixel values of an image).

- Hidden Layer(s) – Performs calculations and feature transformations.

- Output Layer – Produces the final prediction or classification.

Each connection between neurons has a weight, which determines the strength of the signal. There’s also a bias term and an activation function—typically a mathematical function that introduces non-linearity (such as sigmoid or ReLU).

Why Use Neural Networks?

Neural networks have emerged as a transformative technology in artificial intelligence, especially for solving problems that are difficult—if not impossible—to address with traditional rule-based programming.

Unlike conventional software, where explicit rules are hard-coded by developers, neural networks learn patterns directly from data. This makes them incredibly powerful in domains where:

- The data is unstructured or high-dimensional

- The relationships between inputs and outputs are non-linear

- There is no clear set of deterministic rules

Here are some real-world scenarios where neural networks shine:

🖼️ Image Recognition

Neural networks—particularly Convolutional Neural Networks (CNNs)—have revolutionized computer vision. They can detect objects, faces, emotions, and even medical anomalies in images with impressive accuracy. For instance:

-

- Self-driving cars use CNNs to detect pedestrians, lanes, and traffic signs.

- Photo apps use neural nets to categorize and tag your pictures automatically.

Traditional code would require exhaustive conditions for every edge case. Neural networks generalize across them.

🎙️ Speech Processing

Speech is naturally variable and complex. With neural networks:

-

- Voice assistants like Siri, Google Assistant, and Alexa can convert spoken language into text (speech recognition).

- Speech synthesis (text-to-speech) has improved in naturalness using deep learning models.

Prior to neural networks, speech systems relied on fragile hand-crafted acoustic models. Deep learning replaced many of those components with more robust learned representations.

🧠 Natural Language Understanding

Neural networks are behind major breakthroughs in natural language processing (NLP), including:

-

- Text classification (e.g., spam detection, sentiment analysis)

- Machine translation (e.g., English to French)

- Question answering and chatbots

By encoding language in numerical vectors (word embeddings) and learning relationships between them, networks can grasp context, tone, and nuance—something traditional grammar-based parsing struggled with.

💳 Fraud Detection

Financial institutions use neural networks to flag suspicious behavior in real time:

-

- Detecting unusual transactions

- Identifying compromised accounts

- Spotting patterns across user histories that correlate with fraud

Since fraudulent behavior often involves subtle anomalies, neural networks outperform rigid, rule-based systems that can’t adapt to evolving threats.

The Common Thread: Pattern Recognition

What ties all these applications together is the neural network’s ability to recognize patterns in vast amounts of data, even when:

-

- The data is noisy or incomplete

- The relationship between input and output isn’t obvious

- Human-written rules are infeasible

This is why neural networks are often described as function approximators—they can model complex relationships and generalize to unseen data.

Learning Through Training

At the heart of a neural network’s intelligence lies its ability to learn from data. This learning process is called training, and it allows the network to discover patterns, adjust internal parameters, and improve its predictions over time.

Rather than being explicitly programmed with rules, neural networks adapt their behavior by analyzing examples—just like a student learning from practice problems.

🧪 The Training Workflow: Step-by-Step

Here’s a simplified breakdown of how a neural network learns:

🔄 1. Forward Propagation

-

-

- The input data (e.g., pixel values from an image or numerical features from a dataset) enters the input layer.

- It flows forward through the hidden layers, where mathematical operations (such as weighted sums and activation functions) transform the data.

- The output layer generates a prediction—such as a class label or a numeric value.

-

This step is purely computational: it shows what the network currently “thinks” based on the current weights.

📉 2. Loss Calculation

-

-

- The prediction is compared to the actual answer (ground truth).

- A loss function (such as Mean Squared Error for regression or Cross-Entropy for classification) quantifies the difference between the predicted output and the actual label.

- This error, or loss, tells the network how far off it was.

-

Think of this as the neural network grading its own performance.

🔁 3. Backpropagation

-

-

- The network then “works backwards” to figure out how much each neuron and connection contributed to the error.

- Using a method called gradient descent, it calculates the direction and magnitude by which the weights should change to reduce the error.

- This is known as backpropagation of error, and it involves computing partial derivatives (gradients) across all layers.

-

It’s like a student realizing which steps in their math solution were wrong and adjusting their method.

🔄 4. Weight Update

-

-

- The weights are adjusted slightly (based on the gradients and a parameter called learning rate).

- Small updates ensure the network gradually converges toward better performance without overshooting the goal.

-

🔁 5. Repeat Over Many Epochs

-

-

- An epoch is one complete pass through the entire training dataset.

- The network undergoes this process across multiple epochs, constantly refining its weights to minimize the loss.

- As training progresses, the network gets better at making accurate predictions.

-

It’s an iterative cycle of improvement—predict → compare → correct → repeat.

🧠 Why This Works

The power of this training loop lies in adjusting internal representations (weights and biases) to capture increasingly abstract features of the input data:

-

In early layers, a network might learn to detect simple shapes (e.g., edges or corners).

-

In deeper layers, it begins recognizing patterns, objects, or even meaning, depending on the data type.

Through repetition and feedback, neural networks can master complex tasks—without being explicitly told how to solve them.

Types of Neural Networks (As of 2019)

While the basic idea remains the same, different tasks require different architectures:

- Feedforward Neural Networks (FNN) – Data flows in one direction; simplest type.

- Convolutional Neural Networks (CNN) – Great for image data.

- Recurrent Neural Networks (RNN) – Useful for sequential data like text and time series.

- Multilayer Perceptrons (MLP) – A stack of layers with full connectivity.

Each of these had started gaining traction by 2019, especially CNNs and RNNs in mainstream deep learning applications.

Challenges and Limitations

In 2019, despite their promise, neural networks faced a few notable challenges:

- Data Hungry – Require large amounts of labeled data.

- Compute Intensive – Need powerful hardware (often GPUs).

- Black Box Nature – Hard to interpret decisions (which led to growing interest in Explainable AI).

Real-World Examples

By 2019, neural networks were already powering:

- Google Translate (behind the scenes)

- Facial Recognition in Social Media

- Product Recommendations

- Voice Assistants like Siri and Alexa

These systems used neural networks under the hood to make intelligent decisions from massive amounts of data.

Conclusion: A Foundational AI Tool

Neural Networks form the backbone of deep learning. While the idea has been around since the 1940s, only in recent years (post-2012) has computational power caught up to the theory, allowing them to shine.

As we continue this AI journey, understanding neural networks gives you the foundation to explore more advanced concepts like deep learning, generative models, and more.