A Deep Dive Into the Inner Workings of Large Language Models: 🧠 Transformer Architecture Explained: The Brain Behind LLMs

🔍 Introduction: Beyond Thought Simulation

In our previous blog on Thought Generation in AI and NLP, we explored how modern AI systems can simulate reasoning, explanation, and creativity. At the heart of this capability lies a game-changing innovation in deep learning: the Transformer architecture.

Originally introduced in the groundbreaking paper Attention is All You Need by Vaswani et al. in 2017, transformers have become the standard building block for nearly every large language model (LLM)—including GPT, BERT, PaLM, and Claude.

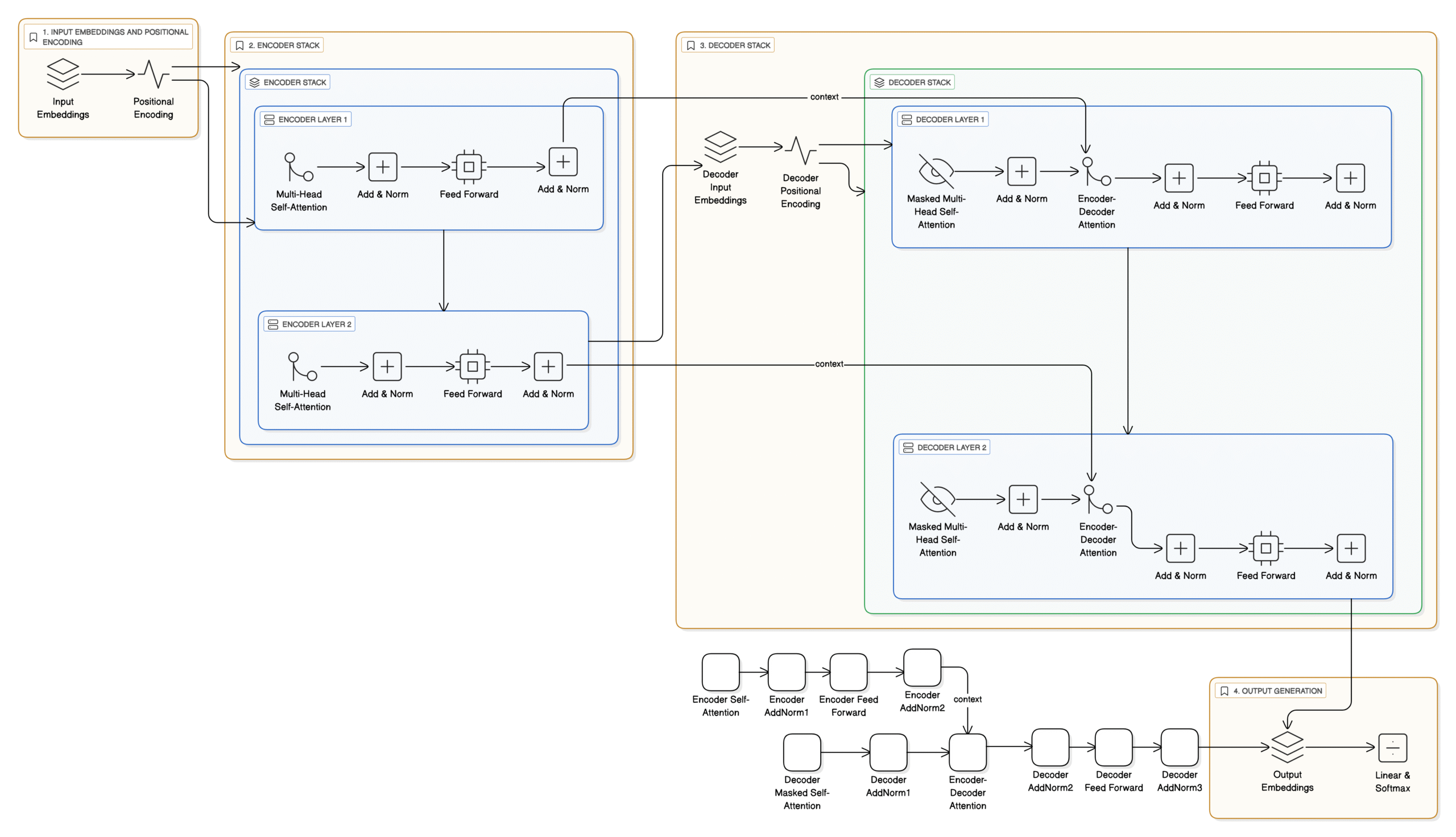

This blog takes a hardcore technical deep dive into the full transformer architecture diagram you see above. Whether you’re a machine learning practitioner, an NLP researcher, or a curious engineer, this post is designed to break down each and every component of the transformer pipeline in clear, sequential detail.

🧭 Overview: What Are Transformers, and Why Do They Matter?

Before we dissect the diagram, let’s ground ourselves with context.

A Transformer is a neural network architecture built around a key idea: instead of processing data sequentially (like RNNs or LSTMs), it uses self-attention mechanisms to process all tokens in a sequence in parallel. This parallelization leads to faster training, better scalability, and longer context handling—all critical to the success of modern LLMs.

🔄 Encoder-Decoder Structure

Transformers have two primary components:

- Encoder Stack: Takes input text and turns it into a contextual representation.

- Decoder Stack: Takes that representation and generates the output text token-by-token.

LLMs like GPT use only the decoder, while BERT uses only the encoder. The full encoder-decoder structure is mostly used in sequence-to-sequence tasks like translation.

🧱 Part 1: Input Embeddings and Positional Encoding

🔡 Input Embeddings

Text cannot be fed directly into a neural network—it must be converted into numerical vectors. This is where token embeddings come in. Each word or subword token is mapped to a fixed-length vector, learned during training.

Example: The word “transformer” might be converted into a 768-dimensional vector representing its meaning and usage in context.

📍 Positional Encoding

Unlike RNNs, transformers don’t process tokens in order—they look at them all at once. So how do they know the order of words?

Positional encodings are special vectors added to the embeddings to give the model information about the position of each word in the sequence.

Without positional encoding: “Dog bites man” and “Man bites dog” could look the same to the model.

There are many ways to design positional encodings—sinusoidal, learned, rotary, etc.—but all serve the same purpose: adding positional awareness.

🧠 Part 2: Encoder Stack

In the next section, we’ll break down the Encoder Stack layer by layer, starting with Multi-Head Self-Attention—one of the most important innovations in deep learning. We’ll explain what it does, how it works, and use a visual to show how multiple attention heads work in parallel to enrich the model’s understanding of input sequences.

🔁 Multi-Head Self-Attention

This is the heart of the transformer. Self-attention allows each token to “look at” every other token in the sequence and weigh their relevance. Multi-head means the model performs this attention operation multiple times in parallel, capturing different types of relationships.

Example: In the sentence “The cat the dog chased ran away,” attention helps the model learn that “cat” is the subject of “ran,” not “dog.”

Each attention head computes a weighted sum of the input embeddings using query, key, and value matrices, and these outputs are concatenated and linearly transformed.

➕ Add & Norm

After attention, the output is added back to the original input (a residual connection) and passed through layer normalization, which stabilizes training.

🛠️ Feed Forward Network

This is a simple neural network applied to each position independently. It increases the model’s ability to transform and project information non-linearly.

The process (Attention → Add & Norm → FFN → Add & Norm) is repeated across multiple encoder layers.

🗣️ Part 3: Decoder Stack

The decoder works similarly to the encoder but has two types of attention layers:

🙈 Masked Multi-Head Self-Attention

This prevents the model from “cheating” by looking at future tokens during training. It masks out future words in the sequence.

🔁 Encoder-Decoder Attention

This layer helps the decoder focus on the encoder’s output. It allows the decoder to pull relevant context from the input sentence.

➕ Add & Norm + Feed Forward

Same as in the encoder: residual connections and layer normalization wrap each sub-layer.

Multiple decoder layers are stacked, culminating in:

🧮 Part 4: Output Generation

The final decoder layer’s output goes through:

- Linear Transformation: Maps the high-dimensional vector to a vocabulary-sized vector.

- Softmax Layer: Converts these scores into probabilities for each token in the vocabulary.

The model selects the token with the highest probability—or samples from the top few—to generate the next word.

🧩 Part 5: Summary Table of Components

| Component | Purpose |

|---|---|

| Input Embeddings | Convert words into vectors |

| Positional Encoding | Encode order of tokens |

| Multi-Head Self-Attention | Learn relationships between all tokens |

| Add & Norm | Stabilize learning and preserve context |

| Feed Forward | Non-linear transformation of token representations |

| Masked Self-Attention (Dec) | Prevent peeking at future tokens during training |

| Encoder-Decoder Attention | Focus on input sequence while generating output |

| Linear + Softmax | Predict next token from model output |

🧠 Final Thoughts

Transformers revolutionized NLP and remain the foundation for all major LLMs today. They replaced older sequential models with a powerful, scalable, and parallelizable structure that understands context deeply and flexibly.

As you continue exploring LLMs, understanding the transformer isn’t just helpful—it’s essential.

In the next post, we’ll contrast encoder-only models like BERT, decoder-only models like GPT, and encoder-decoder hybrids like T5—and how each design influences performance and application.