How LSTM Emerged as the Backbone of AI-Driven Forecasting Across Industries: How LSTM Became the Forecasting Workhorse

🔍 Find out how LSTM Time Series Forecasting transformed the approach to data prediction—and why it beats traditional models

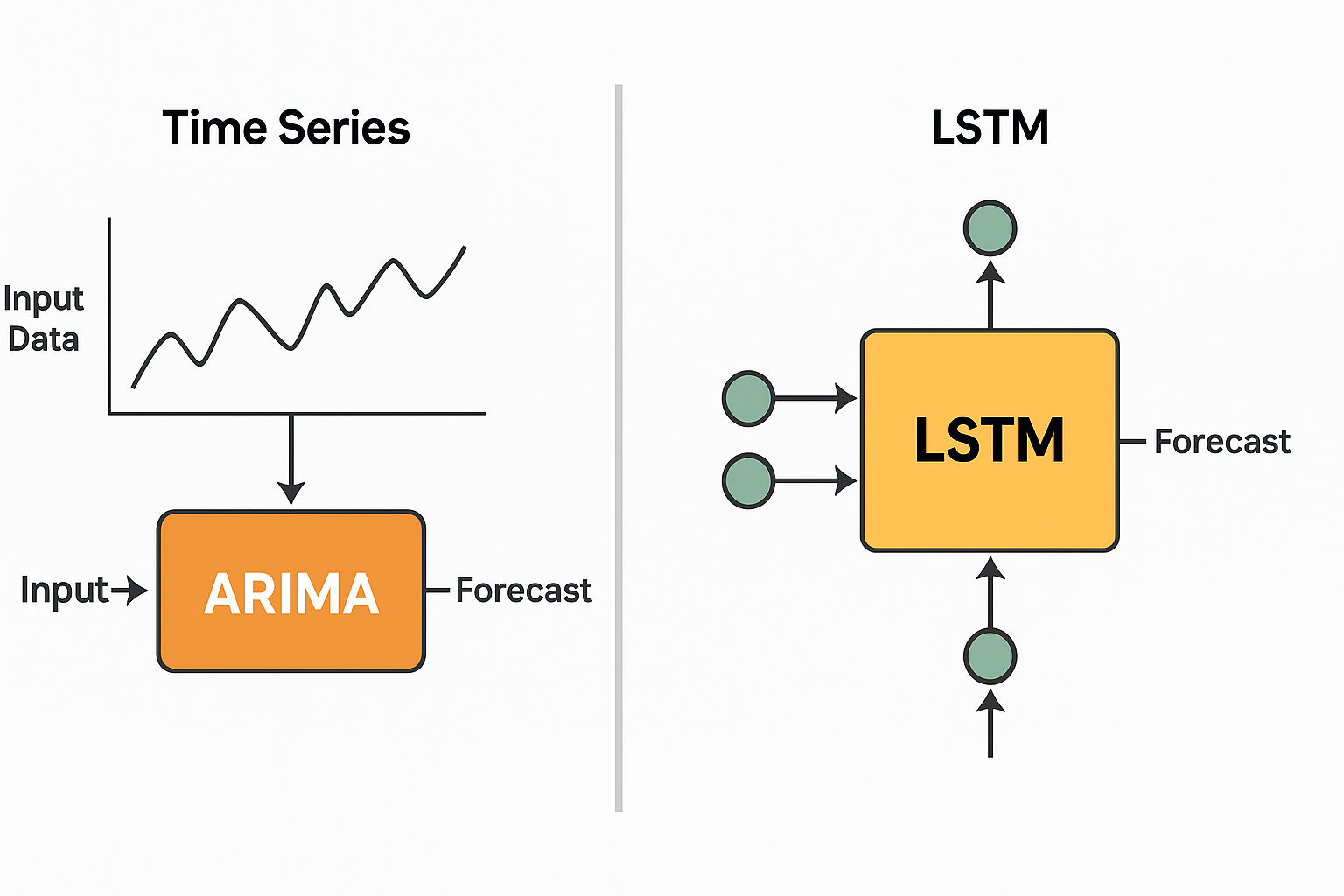

For decades, time series forecasting was synonymous with models like AR, MA, and ARIMA—mathematical frameworks built on assumptions of linearity, stationarity, and seasonality. While powerful in their domain, these models often struggled with nonlinear patterns, sudden regime shifts, and multivariate complexity found in modern datasets.

Enter LSTM (Long Short-Term Memory)—a specialized neural network architecture designed to capture long-range dependencies in sequential data. Unlike traditional models, LSTMs don’t require manual feature engineering or rigid assumptions. They learn directly from the data—detecting trends, cycles, lags, and anomalies with remarkable precision.

What makes LSTM truly transformative is its:

- 🔁 Memory mechanism that mimics human attention to relevant past events

- 🧠 Flexibility across industries—from predicting stock movements to forecasting patient vitals

- 🧰 Ability to handle multivariate inputs, external regressors, and missing data

While classical methods remain valuable for their transparency and simplicity, LSTM represents a paradigm shift—offering deep pattern recognition where human-crafted rules fall short.

It’s not just a new model—it’s a new mindset for predictive modeling.

From Academic Curiosity to Kaggle Hero: The Rise of LSTM in Time Series Prediction

“Sometimes the best ideas take a decade to be understood.” — Sepp Hochreiter

In the world of neural networks, few stories are as redemptive and industry-shaping as that of Long Short-Term Memory (LSTM) networks. Invented in 1997 by Sepp Hochreiter and Jürgen Schmidhuber, LSTMs were designed to solve a critical flaw in Recurrent Neural Networks (RNNs): vanishing gradients. While they worked beautifully on paper, LSTMs sat on the academic shelf for nearly two decades—until 2015–2016, when they exploded onto the machine learning scene.

🧨 What Triggered the LSTM Boom?

By early 2016, a confluence of industry trends made LSTM the go-to architecture for time series forecasting:

-

Kaggle competitions (especially for stock price and energy forecasting) began to see LSTM outperform traditional models like ARIMA, even without hand-tuned features.

-

Google, Amazon, and Uber started publishing research applying LSTMs to demand forecasting, anomaly detection, and multivariate prediction tasks.

-

The rise of TensorFlow (Nov 2015) and Keras made building LSTMs just a few lines of code.

-

Big Data finally met Big Time: LSTMs could now handle long-range temporal dependencies that traditional models could not even model.

🧬 How LSTM Works (Briefly)

At the heart of LSTM is a clever gating mechanism:

| Gate | Function |

|---|---|

| Forget Gate | Decides what to discard from the cell state |

| Input Gate | Decides what new information to store |

| Output Gate | Decides what to pass to the next time step |

Instead of treating each time step equally, LSTMs learn what’s relevant, allowing them to remember seasonality, trends, and lag-based effects—critical for forecasting problems.

This side-by-side diagram illustrates the difference between ARIMA and LSTM approaches to time series forecasting. On the left, ARIMA is shown as a traditional statistical model that receives input data and produces a forecast through a linear process. On the right, LSTM is represented as a deep learning model with multiple inputs flowing into a neural cell that handles both short- and long-term dependencies, producing a more adaptive forecast. The visualization highlights the architectural and conceptual contrast between classical and AI-driven forecasting methods.

🏢 LSTM in the Real World

| Industry | LSTM Use Case |

|---|---|

| Retail | Demand forecasting, inventory management |

| Finance | Stock and crypto prediction, anomaly detection |

| Healthcare | Patient vitals monitoring, sepsis forecasting |

| Energy | Load forecasting, equipment failure prediction |

| Supply Chain | Lead time forecasting, delay mitigation |

These are scenarios where sequence matters, and context over time is king.

🧭 What Made LSTM a Workhorse?

-

Handles sequences of arbitrary length

-

Captures both short- and long-term dependencies

-

Better than RNNs, and often better than ARIMA on nonlinear data

-

Widely supported in PyTorch, TensorFlow, Keras, etc.

📊 ARIMA vs LSTM: Time Series Forecasting Comparison

| Feature / Aspect | ARIMA | LSTM (Long Short-Term Memory) |

|---|---|---|

| Model Type | Statistical (linear) model | Deep learning (nonlinear) neural network |

| Data Requirements | Requires stationary, univariate series | Handles non-stationary, multivariate data with complex patterns |

| Assumptions | Assumes linearity, homoscedasticity, normality | Minimal assumptions; learns patterns from raw data |

| Handles Seasonality | Requires manual decomposition or SARIMA variant | Learns seasonality implicitly |

| Input Representation | Lagged variables | Sequence of observations over time |

| Memory Capability | Limited memory (depends on lag order) | Remembers long- and short-term dependencies via gating mechanisms |

| Interpretability | High (coefficients have statistical meaning) | Low (black-box, requires XAI for interpretability) |

| Computational Cost | Low (efficient to train and run) | High (requires GPUs for optimal performance) |

| Scalability | Good for small to medium datasets | Excellent for large datasets and high-dimensional inputs |

| Forecasting Horizon | Short- to medium-term | Medium- to long-term |

| Industry Fit | Finance, economics, government (where transparency is critical) | E-commerce, energy, healthcare, IoT (complex, high-frequency, nonlinear data) |

| Tooling & Libraries | statsmodels, R’s forecast |

TensorFlow, PyTorch, Keras |