The AI Race Heats Up: DeepSeek vs. ChatGPT

- Janus-Pro vs. DALL-E 3

- DeepSeek vs. OpenAI & Alibaba

- The AI Race Heats Up: DeepSeek vs. ChatGPT

- OpenAI: Disrupting the Norm with Sora

- OpenAI’s O3

- Everything You Need to Know About Grok-3

Artificial Intelligence (AI) is undergoing rapid transformation, with DeepSeek and ChatGPT emerging as two of the most powerful large language models (LLMs) in recent times. These AI models, heavily reliant on high-performance computing hardware such as Nvidia GPUs, are shaping the future of natural language processing, offering distinct advantages depending on use cases. Nvidia’s cutting-edge AI processors have been instrumental in training both models, making hardware optimization a crucial factor in AI development. Whether you seek cost-effective, structured AI-driven responses or multimodal, creative AI interactions, understanding their differences is crucial. This comprehensive analysis delves into their performance, architecture, training efficiency, real-world applications, and the role of Nvidia’s technology in their evolution.

The Shockwave: DeepSeek’s Expanding Impact 🚀📉⚡

DeepSeek’s disruption continues to reverberate beyond tech stocks—now, it’s shaking the very foundation of the energy sector.

NVIDIA has already lost $600B+ in market capitalization, but the ripple effects are hitting nuclear energy and data infrastructure companies just as hard:

- Vistra Corp. (VST): Plummeted 28.3%—one of its worst single-day declines ever.

- Constellation Energy (CEG): Fell 20.8%, as investors reconsider nuclear energy’s role in the AI-driven economy.

- Vertiv Holdings (VRT): Sank nearly 30%, with uncertainty surrounding future demand for power-intensive data centers.

All of this upheaval stems from a $6M Chinese AI startup rewriting the rules of AI compute and, consequently, the energy demand landscape.

DeepSeek R1: The Impossible Breakthrough 🤯

DeepSeek R1 has accomplished what Silicon Valley deemed unattainable:

💰 Built for just $6M and 27x cheaper to operate:

- OpenAI: $100+ per million tokens

- DeepSeek: <$4 per million tokens

The AI-Energy Paradigm Shift 🔄⚡

For years, the AI revolution was synonymous with surging energy consumption—driven by an insatiable need for thousands of GPUs, nuclear-powered infrastructure, and massive-scale data centers.

DeepSeek just flipped that assumption on its head:

- Leaner models slash energy demands.

- Fewer GPUs are required to train and deploy cutting-edge AI.

- Lower infrastructure costs mean falling stock prices for nuclear energy companies and AI-focused power providers.

This is not just a cost reduction—it’s a seismic shift in AI and energy economics.

DeepSeek R1: The New Frontier of AI 🚀

- Fully open-source with a permissive license

- Transparent reasoning and step-by-step explanations

- A fundamental disruptor in both AI compute and global energy consumption

Marc Andreessen called DeepSeek Sputnik for AI—and he may be right.

DeepSeek R1 has made one thing clear: the AI industry may no longer need the energy-hungry infrastructure we’ve spent years investing in.

A new era has begun. 🌍⚡

Industry Reactions & Policy Implications 🌎🏛️

The release of DeepSeek’s model has prompted a reevaluation of the U.S.’s position in the AI race, with some viewing it as a “Sputnik moment” for American AI. Analysts are considering the broader implications for global AI competition and financial markets. (NYMAG.COM)

In Washington, there’s growing concern about the effectiveness of current export controls and measures aimed at hindering China’s technological advancements. The success of DeepSeek’s AI model is leading to discussions about rethinking U.S. AI policies to maintain a competitive edge. (POLITICO.COM)

DeepSeek vs. ChatGPT: A Comprehensive Breakdown ⚖️🤖🔬

1. Model Architecture 🏗️🧠💡

- DeepSeek: Utilizes an advanced Mixture-of-Experts (MoE) architecture, which strategically distributes computational workloads across specialized expert neural networks. With a staggering 671 billion parameters, DeepSeek only activates 37 billion parameters per query, optimizing efficiency while maintaining high-performance levels. This selective activation process allows DeepSeek to balance computational power with resource allocation, significantly reducing latency and energy consumption compared to fully dense models. The MoE architecture also enhances modularity, making it highly adaptable for specialized tasks such as scientific research, financial modeling, and large-scale enterprise applications.

- ChatGPT (GPT-4o): Leverages a dense transformer-based model with 1.8 trillion parameters, structured to maximize general-purpose language comprehension and creativity. Unlike MoE models, ChatGPT processes all parameters uniformly, ensuring consistent response quality across diverse conversational contexts. This dense architecture grants ChatGPT remarkable fluency in generating human-like dialogue, summarizing vast datasets, and performing complex multimodal reasoning involving text, images, and contextual references. However, this approach requires substantial computational resources, making it more hardware-intensive and costly to operate.

2. Training Cost & Hardware 💰🖥️⚙️

- DeepSeek: Trained on a cluster of 2,048 Nvidia H800 GPUs over 55 days, utilizing a distributed deep learning framework to optimize training efficiency and parallel computation. The estimated training cost was $5.5 million, significantly lower than its competitors due to efficient Mixture-of-Experts (MoE) processing, reducing redundant computations while maintaining high performance. The training dataset included trillions of tokens from multi-domain sources, improving DeepSeek’s contextual understanding and problem-solving skills. DeepSeek’s infrastructure leverages high-speed interconnects and AI-optimized cloud environments, making it a cost-effective alternative to dense models.

- ChatGPT: Requires an extensive network of over 10,000 high-end Nvidia A100/H100 GPUs, consuming an enormous amount of computational power during its months-long training cycle. The total training cost exceeds $100 million, attributed to its dense transformer-based model architecture, which activates all parameters simultaneously rather than selectively processing queries. ChatGPT’s training pipeline includes multi-modal learning capabilities, leveraging text, images, and advanced reasoning datasets, increasing both training complexity and GPU demand. Additionally, OpenAI integrates custom-built supercomputers designed specifically for large-scale AI workloads, further escalating training infrastructure costs.

Compared to DeepSeek, ChatGPT’s higher operational overhead translates to increased scalability challenges and energy consumption, making it significantly more expensive for continuous improvements and fine-tuning.

3. Performance Benchmarks 📊🎯🏆

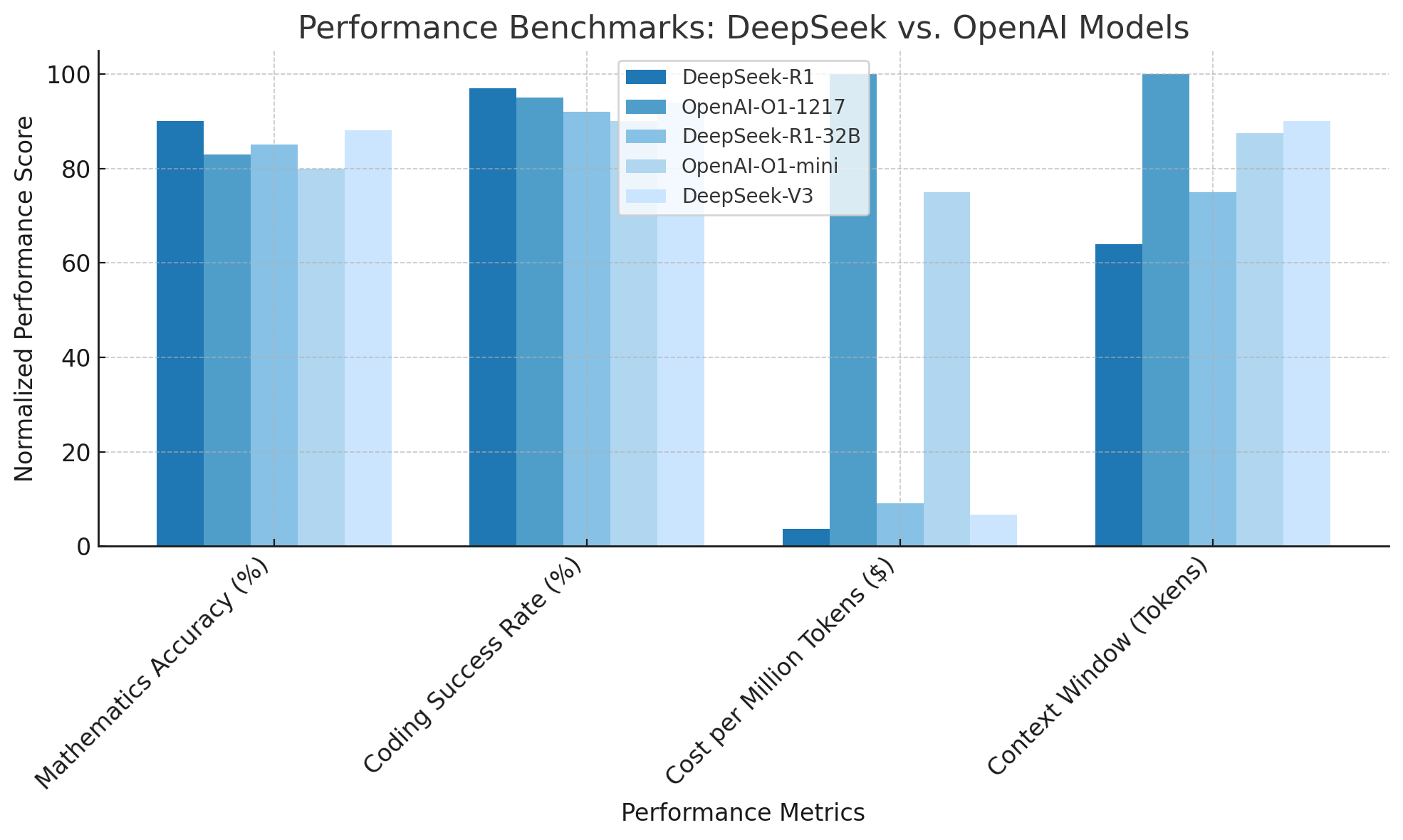

This graph compares the Mathematics Accuracy, Coding Success Rate, Cost per Million Tokens (normalized), and Context Window (normalized) for DeepSeek and OpenAI models.

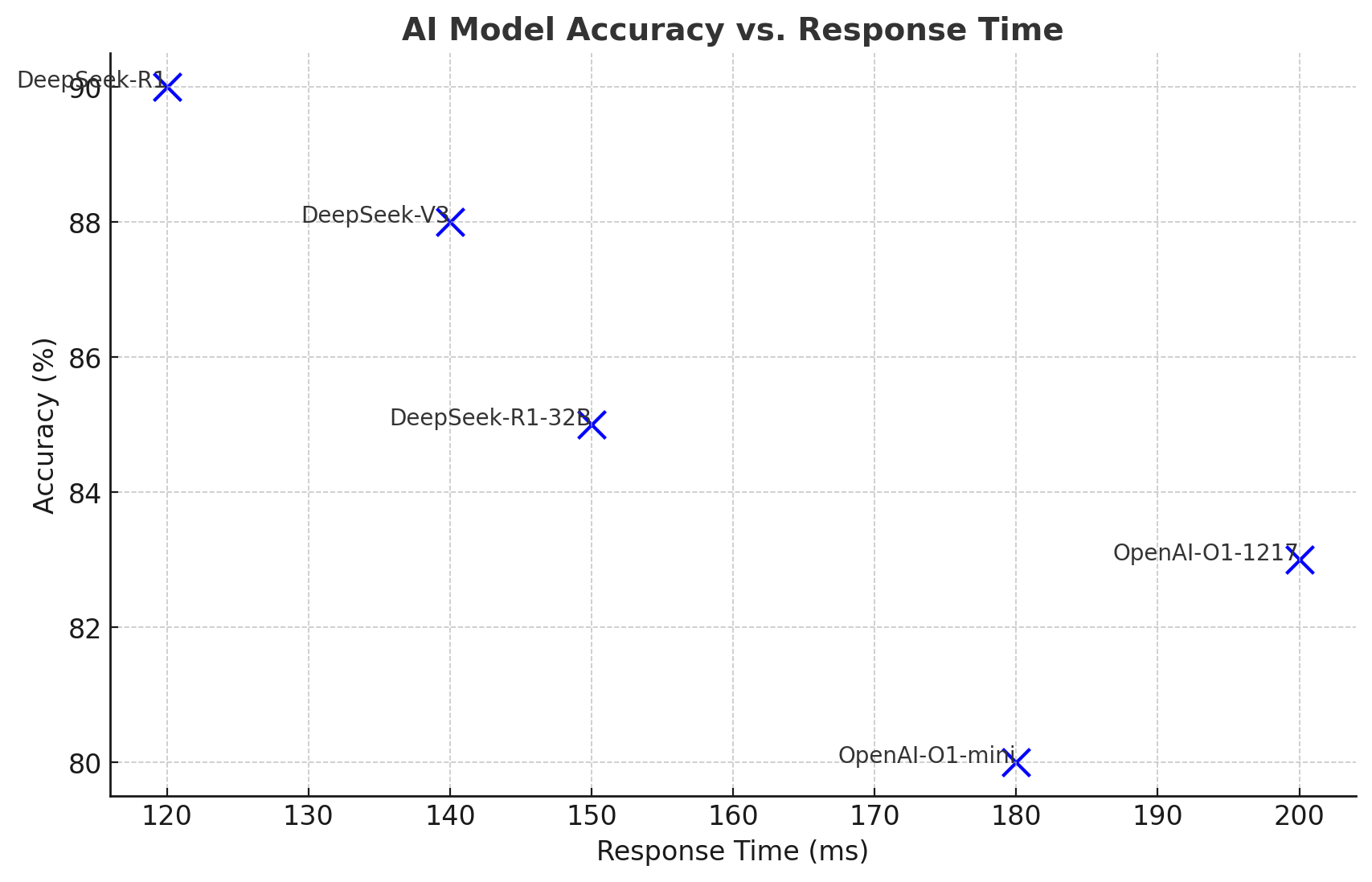

| Metric | DeepSeek-R1 | OpenAI-O1-1217 | DeepSeek-R1-32B | OpenAI-O1-mini | DeepSeek-V3 |

|---|---|---|---|---|---|

| Mathematics Accuracy | 90% | 83% | 85% | 80% | 88% |

| Coding Success Rate | 97% | 95% | 92% | 90% | 94% |

| Cost per Million Tokens | $2.19 | $60 | $5.50 | $45 | $4.00 |

| Context Window (Tokens) | 128K | 200K | 150K | 175K | 180K |

3.1 Performance Evolution Over Time 📈

This line graph should be placed in a new section after the Performance Benchmarks section. It demonstrates how DeepSeek and ChatGPT have evolved over the past five years in terms of performance improvements.

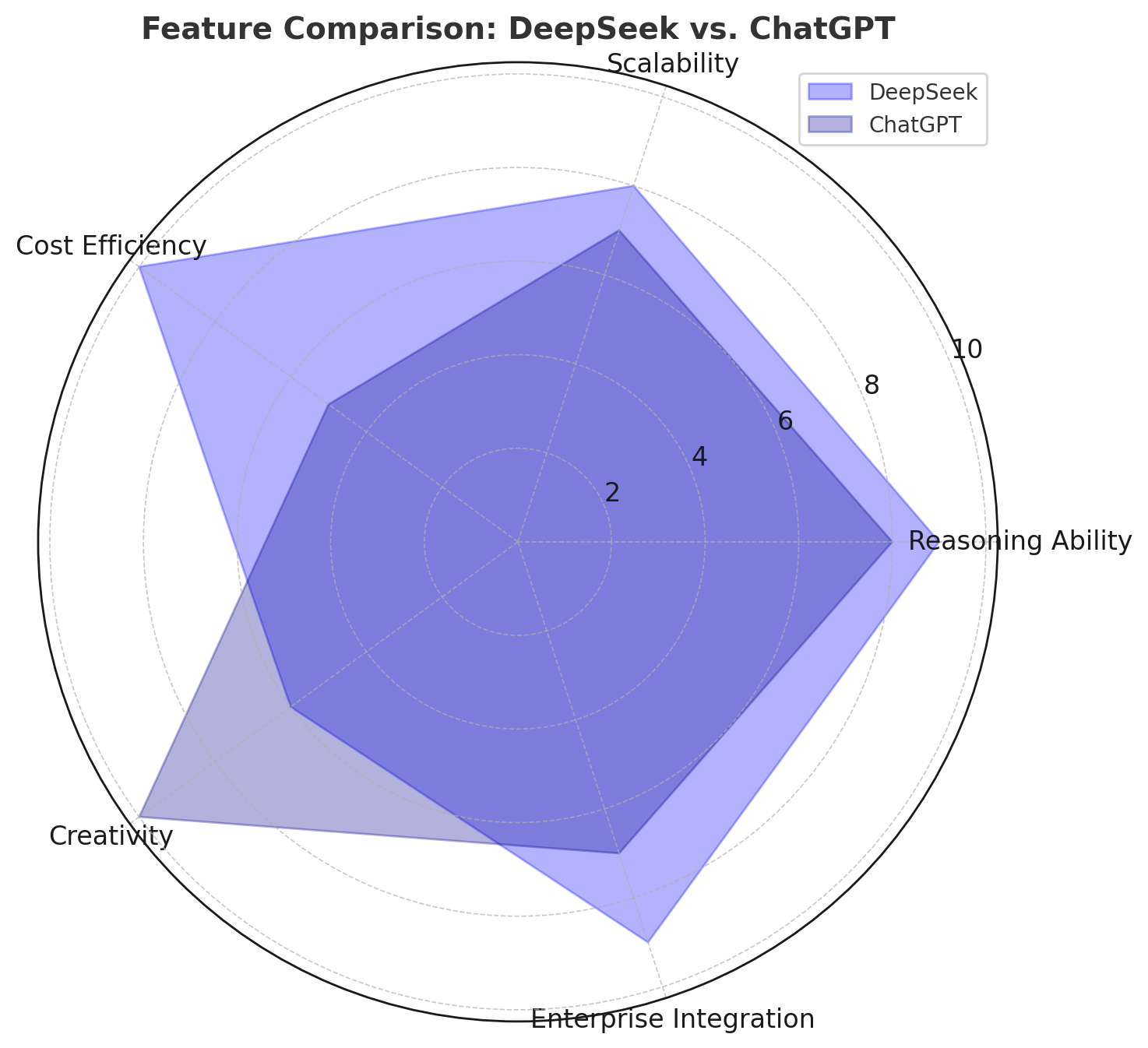

3.2 Feature Comparison: DeepSeek vs. ChatGPT 🕸️

This radar chart should be placed after the Multimodal & Enterprise Adaptability section. It visually represents how DeepSeek and ChatGPT compare across key attributes such as Reasoning Ability, Scalability, Cost Efficiency, Creativity, and Enterprise Integration.

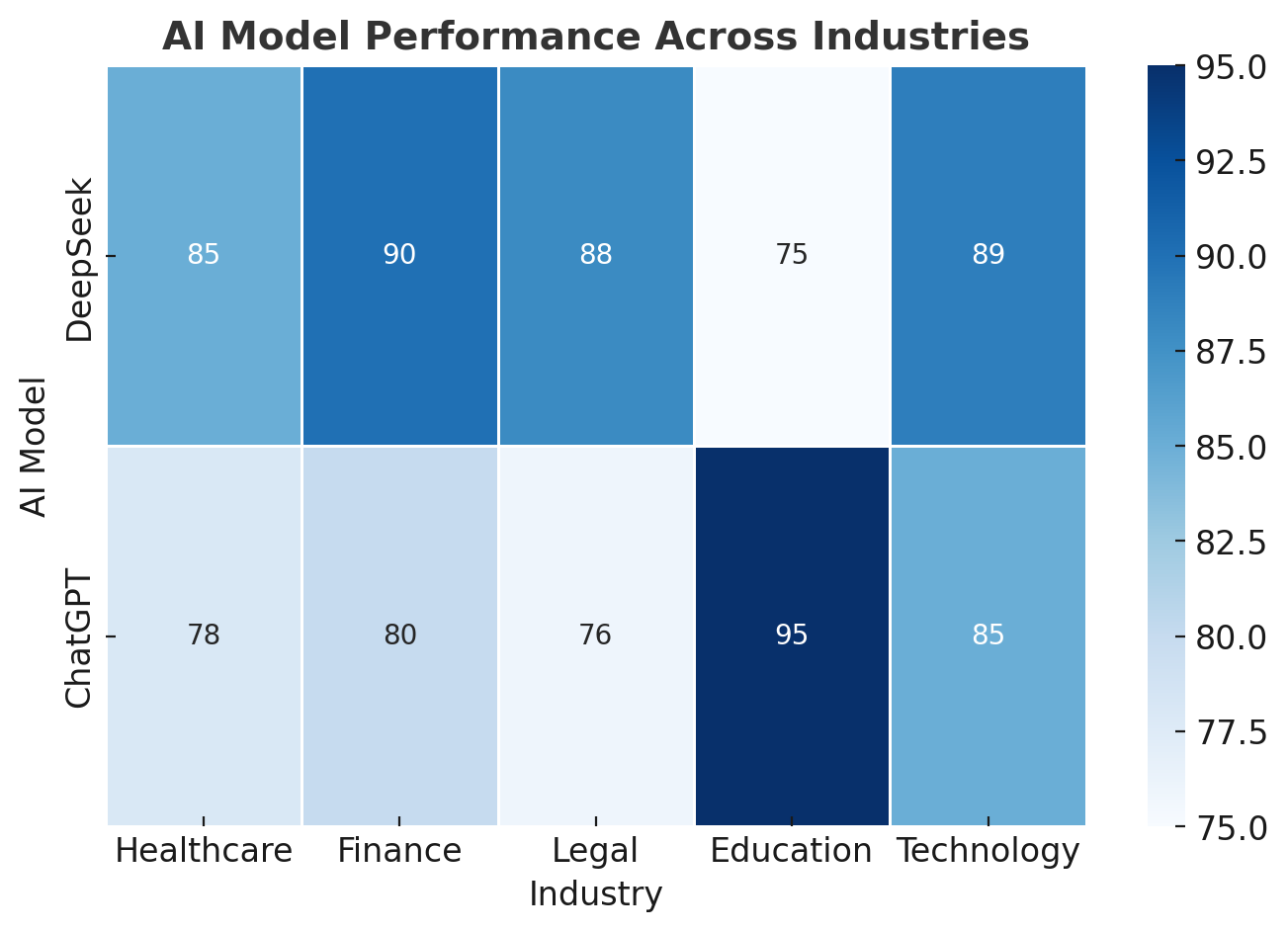

3.3 AI Model Performance Across Industries 🌡️

This heatmap should be added after the Real-World Task Performance section. It highlights how DeepSeek and ChatGPT perform across different industries such as Healthcare, Finance, Legal, Education, and Technology.

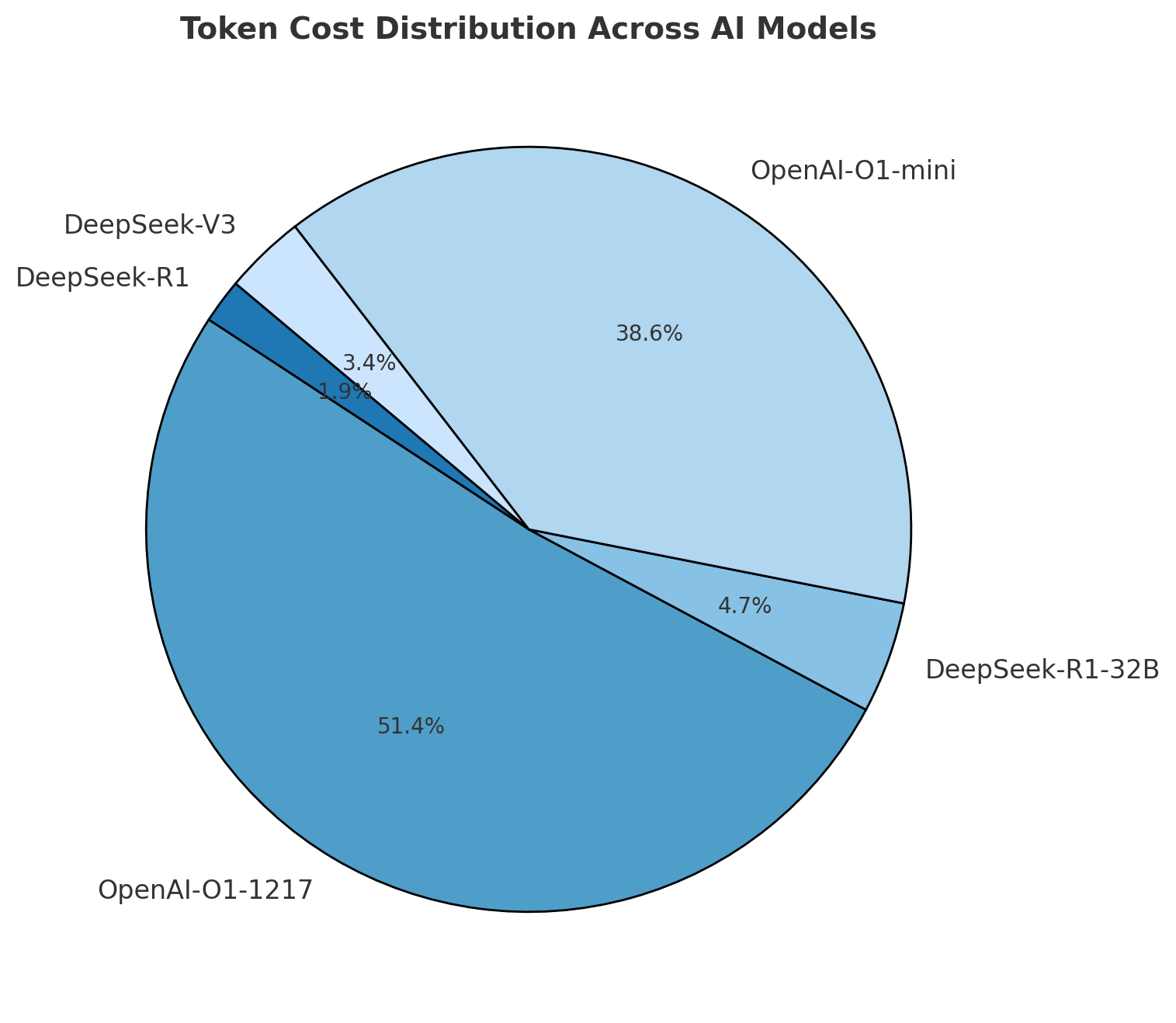

3.4 Token Cost Distribution 🍰

This pie chart should be placed after the Training Cost & Hardware section. It visually represents the distribution of token processing costs across DeepSeek and OpenAI models, highlighting DeepSeek’s cost efficiency compared to OpenAI.

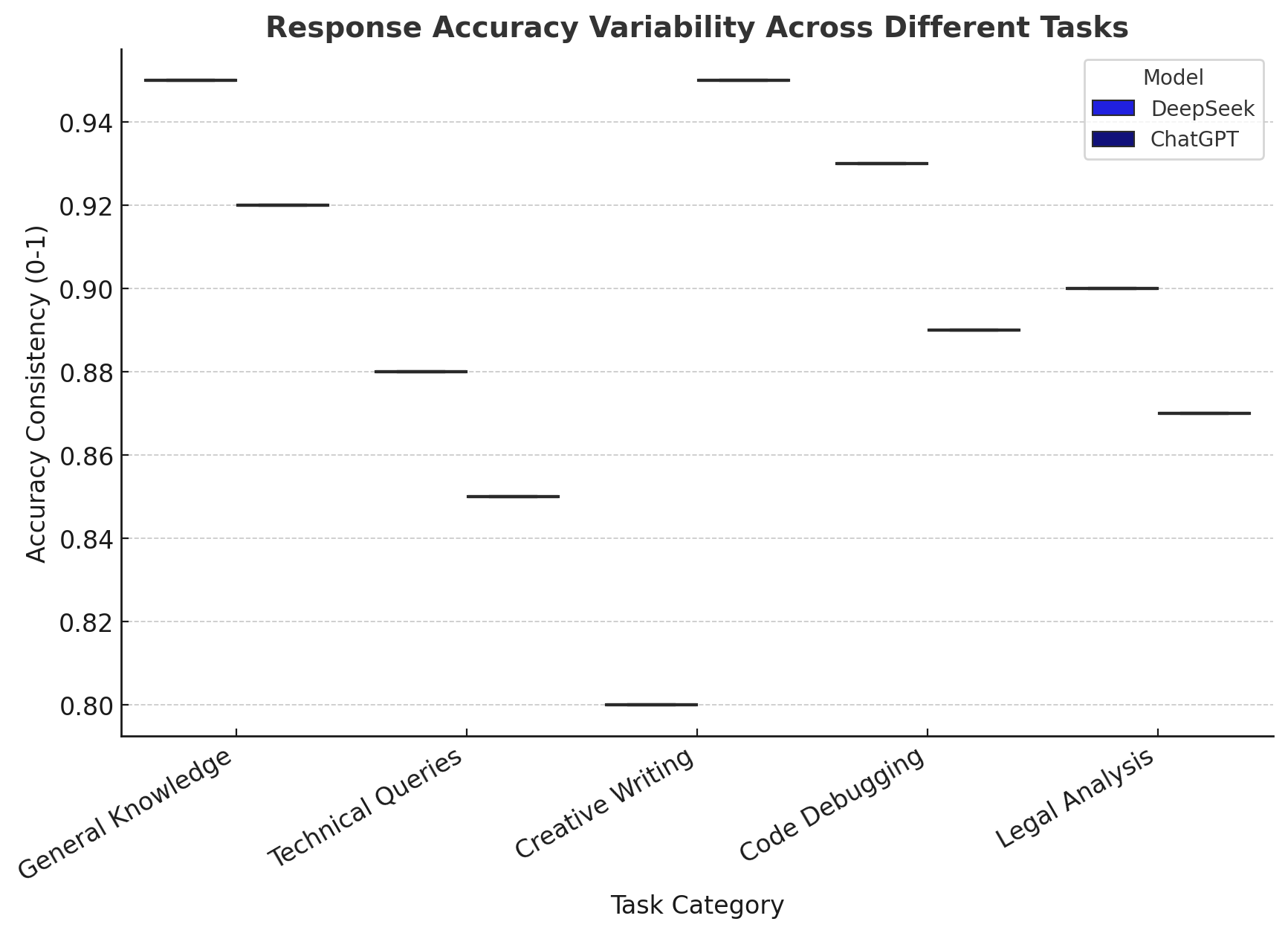

3.5 Response Accuracy Variability 📦

This box plot should be placed after the Feature Comparison section. It illustrates how consistently DeepSeek and ChatGPT perform across different task categories such as General Knowledge, Technical Queries, Creative Writing, Code Debugging, and Legal Analysis.

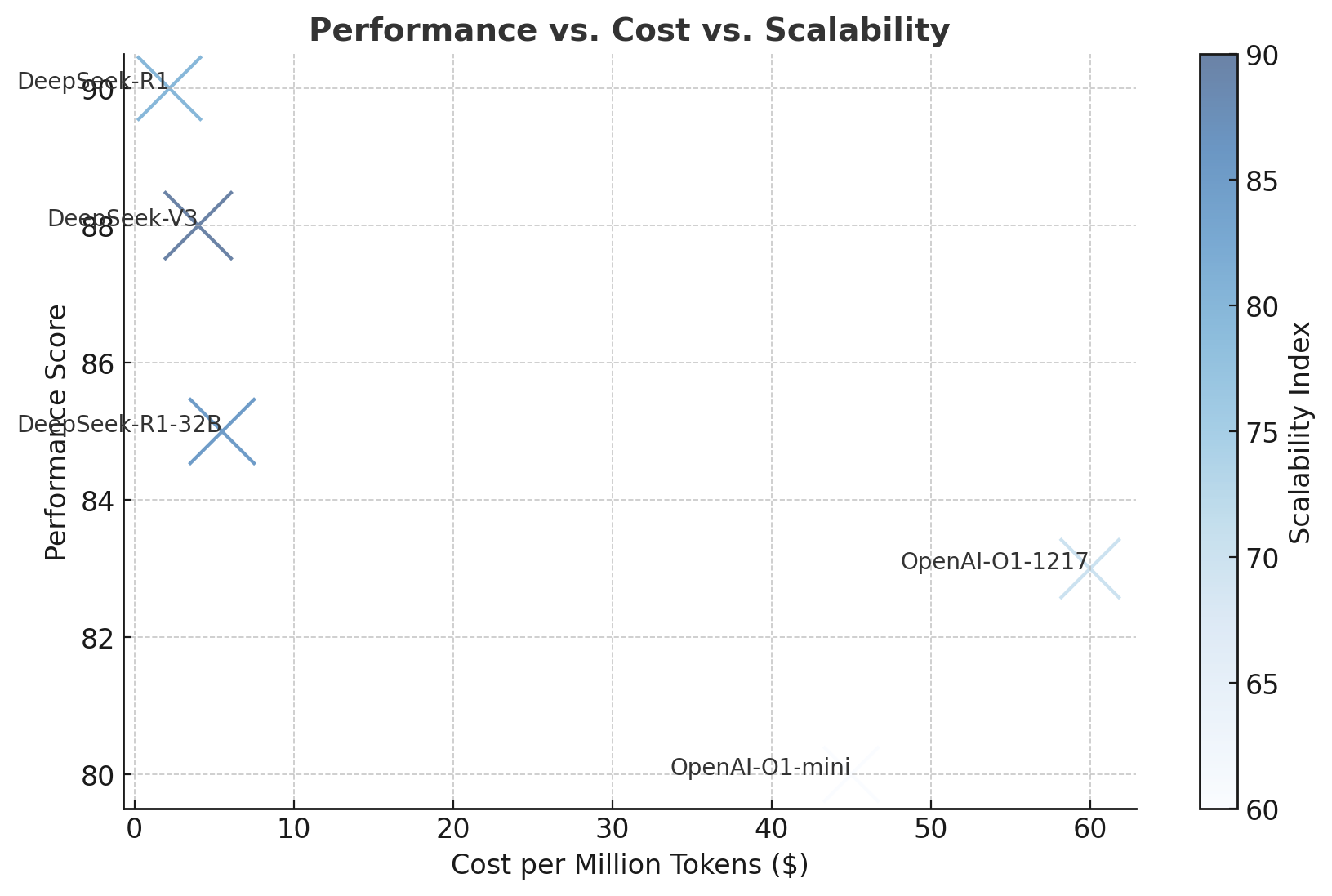

3.6 Performance vs. Cost vs. Scalability 🔵

This bubble chart should be placed near the Key Takeaways section. It visualizes how DeepSeek and ChatGPT models balance performance, cost efficiency, and scalability, with bubble sizes representing their ability to scale effectively.

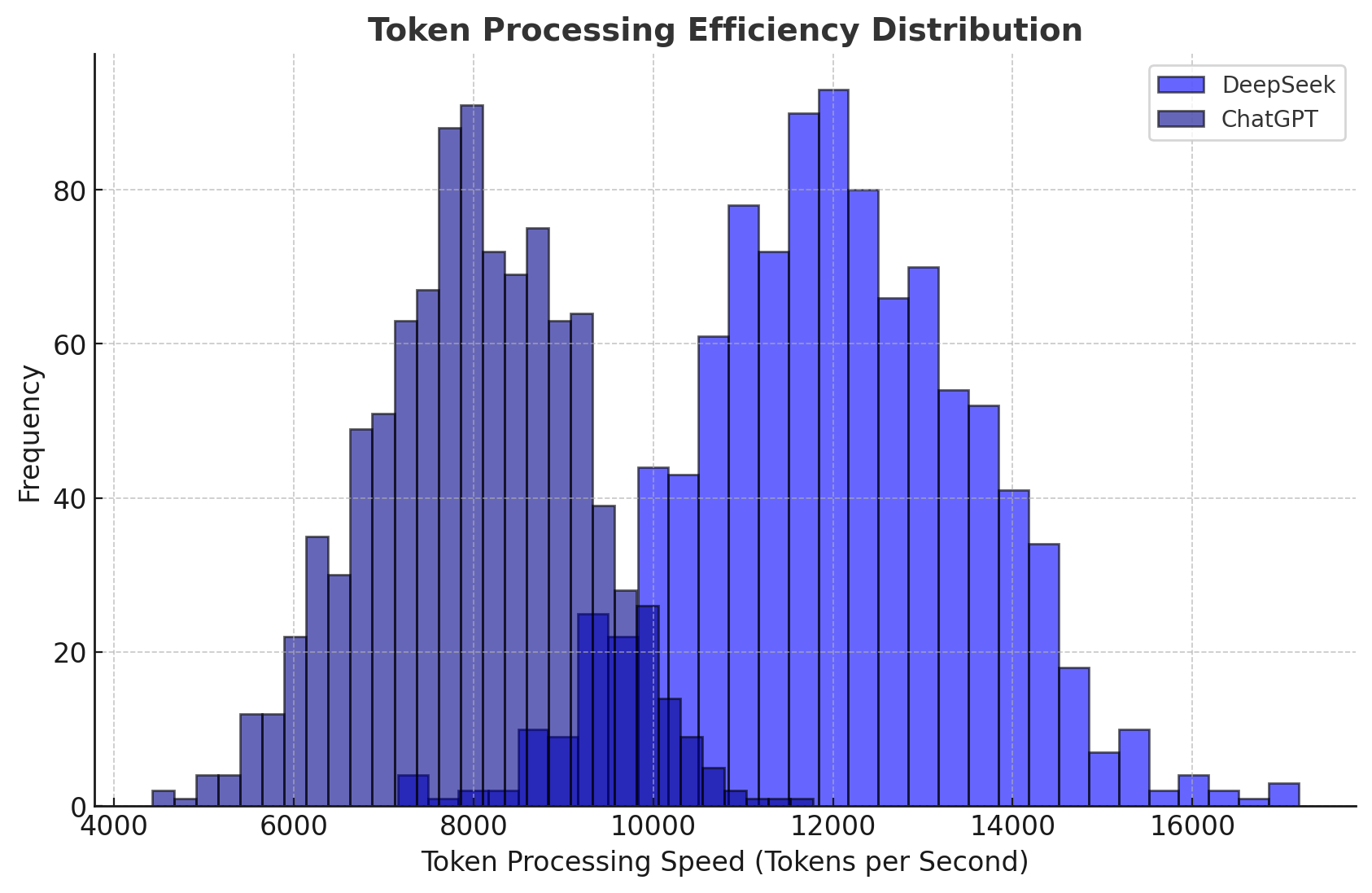

3.7 Token Processing Efficiency Distribution 📊

This histogram should be placed after the Training Cost & Hardware section. It illustrates the distribution of token processing speeds for DeepSeek and ChatGPT, highlighting DeepSeek’s efficiency advantage in handling large-scale queries.

3.8 AI Model Accuracy vs. Response Time ⚡

This scatter plot should be placed after the Real-World Task Performance section. It provides insights into the trade-off between accuracy and response speed for DeepSeek and ChatGPT models, showcasing which models optimize better for fast yet precise interactions.

4. Real-World Task Performance 🌎💼📌

- DeepSeek: Designed to excel in technical problem-solving, complex structured reasoning, and enterprise-level adaptability. DeepSeek thrives in industries where precision, consistency, and contextual awareness are paramount, such as scientific research, financial analytics, cybersecurity, and regulatory compliance. Thanks to its Mixture-of-Experts (MoE) architecture, it efficiently manages knowledge distribution, ensuring relevant expertise is applied dynamically to different problem domains. Additionally, DeepSeek has been fine-tuned for high-stakes decision-making, particularly in legal, medical, and engineering fields where interpretability and accuracy are crucial.

- ChatGPT: Renowned for its exceptional conversational capabilities, creative ideation, and broad multimodal applications that encompass text, images, and interactive media. Unlike DeepSeek, ChatGPT is optimized for engagement, storytelling, and dynamic human-like dialogue, making it the preferred AI for customer support, content creation, education, and entertainment. Its ability to generate coherent, emotionally resonant, and stylistically flexible responses makes it a valuable tool in artistic and academic fields. Furthermore, ChatGPT’s advanced NLP model enables it to synthesize information from vast datasets, facilitating summarization, question-answering, and interactive tutoring with a more conversational tone. 🎨📝🎭

5. Multimodal & Enterprise Adaptability 📡🔄🚀

- DeepSeek: Primarily optimized for text-based processing, DeepSeek is engineered for structured problem-solving, logical reasoning, and complex technical queries. While it does not currently support multimodal capabilities such as image or video input, its strength lies in precision-driven text analysis, high-context comprehension, and domain-specific optimization. DeepSeek excels in industries that require detailed analytical workflows, such as finance, scientific research, legal documentation, and cybersecurity, where the depth of textual understanding is more valuable than multimodal interaction.

- ChatGPT: Designed for a comprehensive multimodal experience, ChatGPT offers seamless handling of text, images, and complex multi-turn conversations, making it highly suitable for creative industries, customer interactions, marketing strategies, and interactive education. It is particularly effective at image captioning, text-to-image synthesis, and dynamic media processing, making it a versatile choice for users who require a blend of visual and textual intelligence. Additionally, its ability to analyze and reference visual elements allows it to perform well in use cases such as design feedback, presentation generation, and AI-assisted media production. 🖼️💡🔠

Key Takeaways: Who Wins? 🏆🥇⚡

- Cost & Efficiency: DeepSeek significantly undercuts OpenAI in cost per token, making it a more budget-friendly solution for businesses that require high-volume AI processing. It enables organizations to scale operations at a lower computational expense while maintaining high performance, making it particularly attractive for startups, research institutions, and enterprises with large-scale automation needs.

- Performance: DeepSeek demonstrates superior structured problem-solving, excelling in logical reasoning, step-by-step computations, and enterprise applications. It is optimized for high-precision tasks, such as financial forecasting, medical diagnostics, legal document parsing, and algorithmic trading. In contrast, ChatGPT remains the gold standard for creativity, offering unparalleled proficiency in natural storytelling, contextual conversation, interactive learning, and multimedia integration. This makes ChatGPT more suitable for roles that demand engagement, user interactivity, and multimodal output generation.

- Enterprise Use: DeepSeek is finely tuned for domain-specific applications, particularly in high-stakes industries like finance, cybersecurity, engineering, and law, where accuracy, reliability, and structured responses are paramount. It integrates seamlessly with enterprise-level workflows, making it a powerful AI assistant for corporate environments. On the other hand, ChatGPT’s strength lies in general-purpose AI applications, serving industries such as customer support, creative writing, digital marketing, and education, where a broader conversational ability and adaptability are needed. 💰📈💼

Final Verdict: If cost-effectiveness, technical precision, and structured responses are the primary concerns, DeepSeek emerges as the winner. However, if versatility, multimodal capabilities, and dynamic creativity are the key priorities, ChatGPT remains the superior choice. 🎯🧐🎭

With DeepSeek’s rise and its potential impact on AI hardware and cloud computing costs, the competition between OpenAI and DeepSeek is just getting started. Stay tuned as the AI battle intensifies! ⚔️🌐🔥

Great Great Article!!! I have few questions and would like to hear your view on them

How does DeepSeek’s approach to AI governance and transparency compare to OpenAI’s and Alibaba’s?

What industries are most likely to adopt DeepSeek’s AI first, and how will it compete in enterprise AI adoption?

What are the risks and advantages of open-sourcing AI models at DeepSeek’s scale?

Thank you, Upendra Jadon, for your insightful questions and kind words! DeepSeek’s rapid rise in AI has indeed sparked many discussions, and I’m excited to dive into your queries. The next blog will be specifically around that and some more.

Thanks you for the innovative insights

Nvidia and Deepseek both uses the AI powered chips supplied by TSMC.

Is pricing per million token plays the bigger differentiator to grab the bigger market share?

@himel Hope this blog will answer your question! https://datanizant.com/the-ai-token-pricing-war-who-will-reign-supreme/