Unlocking Large Language Models: The Game-Changing Powerhouse of Modern NLP

Introduction

Large Language Models (LLMs) are revolutionizing Natural Language Processing (NLP), enabling machines to generate and interpret human language with unprecedented accuracy and creativity. But what are LLMs, and how do they differ from traditional NLP? This blog will guide you through the essentials of NLP and LLMs, explain why LLMs are gaining popularity, and even show you how to create a simple, data-driven AI tool on your Mac.

Whether you’re a tech enthusiast or an AI professional, this guide will help you understand and leverage the transformative power of LLMs.

1. What is NLP, and Why is it Used?

1. What is NLP, and Why is it Used?

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) focused on enabling computers to understand, interpret, and generate human language. NLP combines linguistic insights with machine learning techniques, empowering applications that require natural language interaction.

Key Uses of NLP

NLP applications are fundamental across industries, helping businesses understand, analyze, and respond to language-based data in the following ways:

- Text Analysis: NLP tools assess content to extract sentiment, topics, and intent in real time. For instance, sentiment analysis helps companies understand customer satisfaction trends by analyzing reviews and social media.

- Machine Translation: NLP powers translation systems like Google Translate, breaking down language barriers for seamless communication.

- Chatbots and Virtual Assistants: NLP enables chatbots to understand user intent and provide natural, conversational responses, enhancing customer support and engagement.

- Speech Recognition and Summarization: NLP applications, like Siri and Alexa, recognize spoken language, while summarization models condense lengthy texts into concise summaries.

In short, NLP facilitates human-computer interaction, making language data accessible and actionable across fields.

2. What is a Large Language Model (LLM), and Why is it Used?

Large Language Models (LLMs) are advanced NLP models built using Transformer architecture, which allows them to understand and generate high-quality, human-like text. LLMs are trained on vast datasets, giving them millions or billions of parameters to capture intricate patterns in language.

Why LLMs Are Used

LLMs are transforming the NLP landscape due to their versatility and depth of language understanding. They are widely used for:

- Complex Content Generation: LLMs can write essays, reports, and even creative content like poetry. For instance, businesses use LLMs to create marketing copy and social media content tailored to specific audiences.

- Customer Support Automation: LLMs can analyze and respond to complex customer queries, enhancing service speed and personalization.

- Q&A and Data Summarization: LLMs can analyze and condense documents, providing concise answers and summaries that make information retrieval efficient.

- Personalized Learning and Tutoring: LLMs are being used in education to answer student questions, explain concepts, and adapt responses based on student needs.

LLMs stand out because they are generalists—capable of handling diverse language tasks without requiring extensive retraining.

3. NLP vs. LLMs: Similarities and Differences

| Aspect | NLP | LLM |

|---|---|---|

| Core Goal | Enable machines to understand human language | Use advanced language models to generate and interpret human-like text |

| Scale | Typically smaller, task-specific models | Very large models with billions of parameters |

| Architecture | Diverse (e.g., RNNs, CNNs, etc.) | Primarily based on Transformer architecture |

| Training Data | Often focused, task-specific datasets | Extensive and diverse datasets for broad knowledge |

| Purpose | Typically designed for specific tasks | Designed to handle a wide range of tasks |

| Flexibility | Often retrained for new tasks | Multi-functional without retraining |

Similarities: Both NLP and LLMs are dedicated to processing and understanding language data.

Differences: LLMs are distinguished by their size, versatility, and Transformer architecture. While traditional NLP models are smaller and more task-specific, LLMs can handle a variety of tasks without retraining, making them incredibly adaptable.

Popularity of LLMs

LLMs are more popular today because of their exceptional performance, versatility, and accessibility. Widely available models like GPT-4, BERT, and T5 have fueled their adoption, allowing companies to quickly deploy them for diverse applications.

4. Setting Up and Using an LLM on Your Mac

Choosing an LLM

For local experimentation, choose an open-source model that’s manageable on consumer hardware. Recommended options include:

- GPT-Neo and GPT-J (EleutherAI): Open-source alternatives to GPT-3, suitable for a variety of language tasks.

- LLaMA (Meta): Research-oriented and ideal for experimentation with lighter tasks.

- DistilBERT and MiniLM: Distilled versions of BERT, perfect for quick NLP applications on limited resources.

Setting Up Hugging Face Transformers on Your Mac

To run an LLM locally, you’ll need Python and libraries like Hugging Face’s transformers and torch. Here’s a quick setup guide:

- Install Libraries:

- Load a Pre-Trained Model: Here’s an example with GPT-Neo for text generation:

- Using the Model for a Data-Driven Task: Suppose you want to summarize customer reviews. You can input the reviews and use the model to generate summaries:

5. Practical Applications for LLMs on a Local Machine

With a setup like this, here are some practical applications you can explore on your Mac:

- Customer Feedback Analysis: Analyze sentiment in customer feedback by summarizing reviews or using a sentiment analysis pipeline. This helps businesses quickly gauge customer satisfaction and identify areas for improvement.

- Educational Content Summarization: Summarize lengthy documents or educational materials, making it easier for students and teachers to digest complex information.

- Q&A Systems for Knowledge Bases: Build a Q&A tool for internal documents, allowing employees to get answers to common questions without extensive searching.

- Content Creation for Blogs or Marketing: Generate ideas, drafts, or full posts by giving the LLM prompts related to your target audience. This can save considerable time for content marketers and bloggers.

Datasets

There are several sources where you can find datasets suitable for training and testing a customer feedback analysis application. Here are some reliable options, including Kaggle and other platforms:

1. Kaggle Datasets

Kaggle offers a wide range of datasets that can be used for customer feedback analysis, sentiment analysis, and text summarization. Here are some popular ones:

- Amazon Customer Reviews: Kaggle hosts datasets with Amazon product reviews, which include text, ratings, and helpfulness scores. Amazon Product Reviews Dataset on Kaggle

- Yelp Reviews: A comprehensive dataset containing reviews, ratings, and additional metadata from Yelp. It’s excellent for sentiment and summarization tasks. Yelp Dataset on Kaggle

- IMDB Movie Reviews: Contains sentiment-labeled movie reviews, which can also be applied to test sentiment analysis models. IMDB Movie Reviews

2. UCI Machine Learning Repository

The UCI Machine Learning Repository also hosts some customer review and sentiment datasets. These datasets are generally well-documented and used widely in research.

- Online Retail Dataset: Although this dataset focuses on e-commerce transactions, it includes customer feedback which can be adapted for sentiment analysis. Online Retail Data Set

3. Google Dataset Search

Google Dataset Search is a search engine for publicly available datasets across various domains. You can search for terms like “customer reviews dataset,” “sentiment analysis data,” or “product reviews.” Google Dataset Search

4. Hugging Face Datasets

The Hugging Face Datasets Hub is another excellent source, particularly for NLP tasks. Hugging Face hosts datasets specifically curated for NLP tasks, including sentiment analysis and summarization.

- Amazon Reviews (multi-lingual): This dataset includes reviews from Amazon in multiple languages, which can be helpful for multilingual sentiment analysis.

- Rotten Tomatoes Movie Reviews: Contains sentiment-labeled movie reviews that can be used to test the sentiment analysis model. Hugging Face Datasets Hub

5. Custom Data Collection

If you need a highly specific dataset, you can consider:

- Web Scraping: Use tools like BeautifulSoup or Scrapy to scrape customer reviews from public e-commerce or review sites.

- APIs: Many platforms (e.g., Twitter, Yelp) offer APIs that allow you to collect customer feedback data. However, ensure you comply with each platform’s usage policies and rate limits.

Using These Datasets

- Download the Dataset: Download the chosen dataset and place it in the

data/directory of your project.- Data Preprocessing: Clean and preprocess the data by selecting the review text and, if available, sentiment labels. Ensure the data is compatible with the preprocessing steps in your application.

- Fine-tuning or Testing: Use the labeled data to fine-tune your sentiment analysis model or validate the performance of the summarization model.

By using publicly available datasets, you can avoid the need to manually collect and label customer reviews, which accelerates the process of building and testing your customer feedback analysis application. Let me know if you’d like guidance on any of these specific datasets or help with data preprocessing!

5.1 Customer Feedback Analysis Use Case

Business Use Case

Imagine a company receives hundreds or thousands of customer reviews daily through emails, social media, and product pages. Manually analyzing this feedback is time-consuming, so the company decides to use an AI-driven solution to:

- Summarize Reviews: Condense each review into a short summary that captures the core message.

- Sentiment Analysis: Classify the sentiment (positive, neutral, negative) of each review to gauge customer satisfaction.

- Generate Insights: Aggregate sentiment scores and summaries to identify trends, allowing the business to make data-driven improvements.

By automating customer feedback analysis, businesses can assess customer satisfaction efficiently and identify areas for improvement without manual intervention.

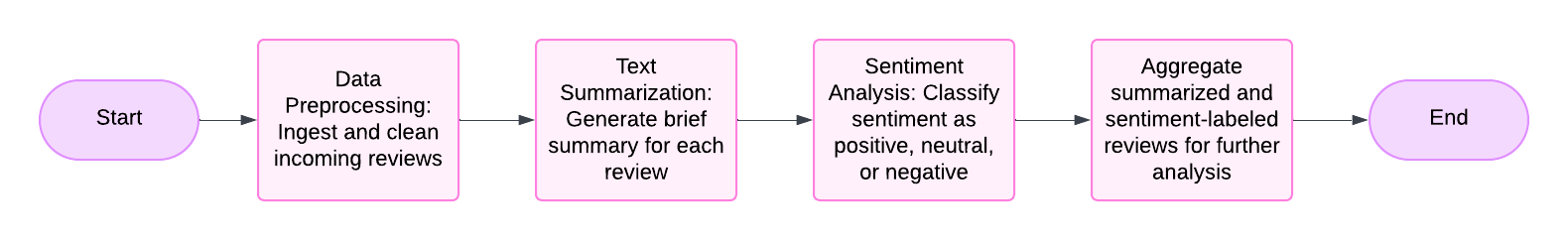

Low-Level Design

The application will be composed of three core modules:

- Data Preprocessing: Prepares incoming reviews for analysis.

- Text Summarization: Generates concise summaries of reviews.

- Sentiment Analysis: Classifies the sentiment of each review as positive, neutral, or negative.

Project Structure

Here’s a sample project structure for a Python-based application:

Step-by-Step Approach to Implementing Yelp Data

Here’s how we can process and utilize the Yelp dataset in the project:

- Download and Load the Dataset

- Download the Yelp dataset from Kaggle and save it in the

data/folder. - Load the dataset in

preprocess.py:

- Download the Yelp dataset from Kaggle and save it in the

- Data Preprocessing (preprocess.py)

- Clean the review text and categorize sentiment based on star ratings.

- Summarization (summarization.py)

- Using the cleaned Yelp reviews, summarize each review for concise insights.

- Sentiment Analysis (sentiment_analysis.py)

- Instead of training a new model, map the sentiment labels based on the Yelp star ratings, and use a pre-trained model to verify or enhance sentiment classification.

- Main Application Script (main.py)

- Integrate all steps, load the Yelp reviews, preprocess, summarize, and assign sentiment.

Testing and Validation with Yelp Data

- Testing Setup

- Use a sample subset of the Yelp dataset for initial testing to ensure that each module (preprocessing, summarization, sentiment analysis) is working correctly.

- Expected Test Results

- Preprocessing: Cleaned review text should be free from unwanted characters, and star ratings should correctly map to sentiment labels.

- Summarization: Each review should be summarized effectively, capturing the main points in a concise format.

- Sentiment Verification: Sentiment labels should align with expectations based on the original star ratings.

- Evaluation Metrics

- Accuracy: Measure accuracy by comparing

model_sentimentwith the mapped sentiment from Yelp star ratings. - Insights Analysis: Aggregate sentiment results by category (e.g., business type or location) and generate reports on customer satisfaction trends.

- Accuracy: Measure accuracy by comparing

Expected Outcome and Business Benefits

After implementing and testing the application, here’s what we expect:

- Customer Satisfaction Trends: An overview of positive, neutral, and negative sentiment distribution across different locations or business types.

- Actionable Insights: Summaries and sentiment insights allow the business to pinpoint strengths and weaknesses, enabling them to address specific issues highlighted by customer feedback.

5.2 Educational Content Summarization: Helping Students and Teachers with Summarized Insights

Business Use Case

Educational materials, such as research papers, textbooks, and lengthy lecture notes, often contain complex information that can be overwhelming for students. By leveraging an AI-driven summarization tool, educational institutions, teachers, and students can:

- Summarize Long Documents: Create concise summaries of chapters, articles, or research papers.

- Highlight Key Concepts: Quickly access key points or essential concepts without reading entire texts.

- Aid Learning and Revision: Use summarized content to enhance understanding, retention, and revision efficiency.

This summarization tool can help students manage large volumes of information while allowing teachers to provide focused content, improving overall learning effectiveness.

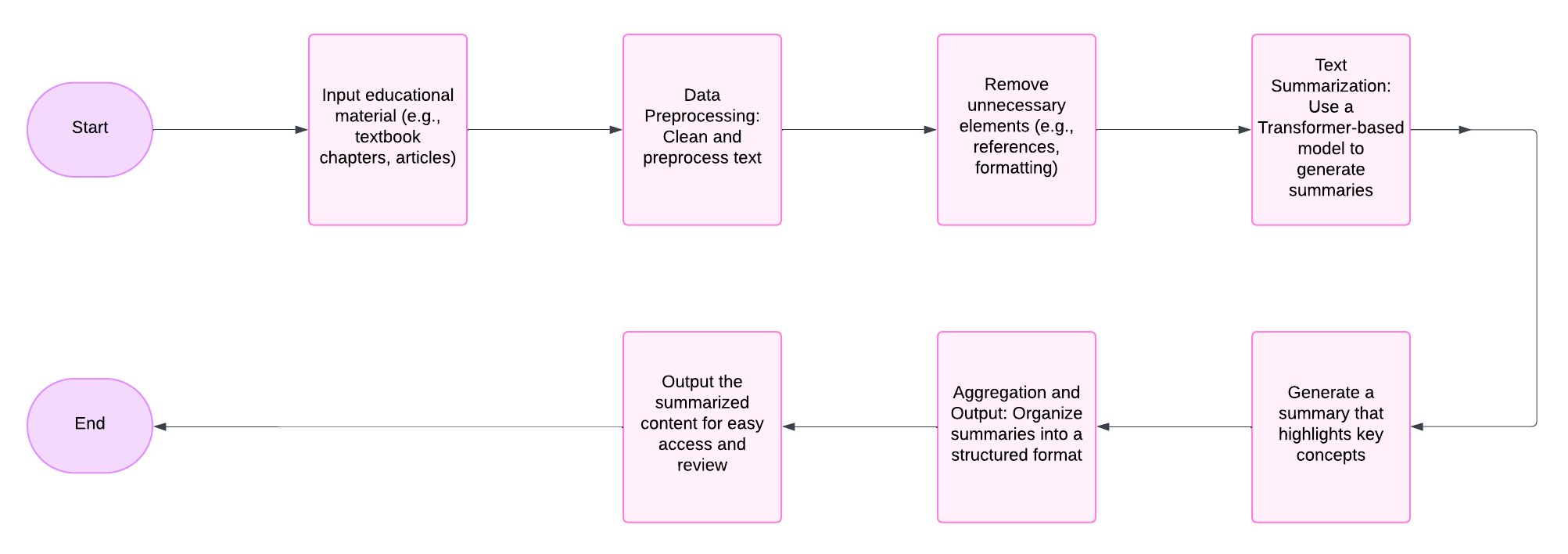

Low-Level Design

The application will have three main components:

- Data Preprocessing: Cleans and preprocesses educational text for optimal summarization.

- Text Summarization: Uses a Transformer-based model to generate summaries of long documents.

- Aggregation and Output: Organizes summaries into a structured output format for easy access and review.

Project Structure

Here’s a sample project structure for a Python-based summarization application:

Step-by-Step Approach to Create the Application

Step 1: Set Up the Environment

- Tools and Language: Python 3, PyMuPDF or pdfplumber (for PDF extraction), Hugging Face Transformers, and PyTorch.

- Dependencies: Install necessary libraries.

Step 2: Data Preprocessing (preprocess.py)

- Import necessary libraries for text extraction and cleaning.

- Implement functions to extract and preprocess text from PDFs or raw text files.

Step 3: Summarization Module (summarization.py)

- Load a summarization model from Hugging Face (e.g., T5 or BART) to generate summaries.

- Split the text into manageable chunks if the document is long, then apply the summarization model to each chunk.

Step 4: Main Application Script (main.py)

- Integrate all steps into a single workflow.

- Run the script with input and output files:

Testing the Application

Testing Setup

- Test Data: Use sample chapters or educational articles in PDF format to test the summarization process.

- Unit Tests: Test each function in

preprocess.pyandsummarization.pyto ensure proper functionality. - Integration Testing: Run the entire pipeline using the main script to verify that the summarization works end-to-end.

Expected Test Results

- Preprocessing: Extracted text should be clean, with references, special characters, and extra whitespace removed.

- Summarization: The summary should capture the main concepts and ideas of the document, with essential information retained.

- Final Output: The output file should contain a readable, concise summary of the original content.

Validation and Evaluation

- Quality Assessment: Manually compare the generated summary with the source material to verify that it retains the main ideas.

- Summarization Accuracy: Ensure that the model produces coherent and meaningful summaries, especially for dense academic or technical content.

- User Testing: Get feedback from educators or students to ensure the summary is helpful and highlights key information effectively.

Expected Outcome and Educational Benefits

After running the summarization tool, users should gain access to:

- Concise Summaries: Easily readable summaries of long educational documents, ideal for quick review and comprehension.

- Highlighted Key Points: Summaries that focus on essential concepts, aiding in the study process and helping students grasp complex topics faster.

- Time Savings: Reduced time spent on reading lengthy documents, enabling students to focus on comprehension and retention.

Example Use Cases in Education

- Course Material Summarization: Instructors can use the tool to summarize weekly readings, helping students focus on core ideas.

- Research Paper Abstracts: Students working on literature reviews can quickly extract key insights from multiple research papers, enabling efficient review.

- Lecture Note Summaries: Summarize long lecture notes or recordings for students to review quickly, making studying easier.

By leveraging this summarization tool, educational institutions, teachers, and students can navigate dense information more effectively, transforming how complex content is accessed and understood.

5.3 Q&A Systems for Knowledge Bases: Streamlining Internal Information Access

Business Use Case

In large organizations, employees often need quick answers to routine questions related to company policies, internal processes, and documentation. Searching through extensive internal documents or knowledge bases can be time-consuming. A Q&A system powered by an AI-driven tool can:

- Instantly Answer Common Queries: Employees can input questions and receive quick, accurate answers based on internal documents.

- Reduce Search Effort: Instead of manually searching through lengthy documents, employees can rely on a Q&A system for efficient information retrieval.

- Improve Productivity: By reducing the time spent searching for information, employees can focus more on critical tasks.

This solution is especially useful for internal knowledge bases that contain policy documents, process guides, and HR FAQs.

Dataset Selection and Justification

For training a Q&A model capable of understanding and retrieving answers from internal knowledge bases, the SQuAD (Stanford Question Answering Dataset) is highly recommended.

Justification for Selecting the SQuAD Dataset:

- Realistic Q&A Structure: The SQuAD dataset contains pairs of questions and answers derived from Wikipedia articles. This structure mirrors the type of questions employees might ask about company documents, making it suitable for simulating a knowledge base environment.

- High-Quality Annotations: SQuAD has high-quality, human-annotated answers that align with relevant context. This enables the model to learn to identify precise answers within a larger body of text.

- Adaptability: The Q&A model trained on SQuAD can be fine-tuned on organization-specific documents to improve its accuracy in a real-world setting.

- Widely Used for Q&A Models: SQuAD is one of the most popular datasets for Q&A model training, ensuring compatibility with many pre-trained Transformer models and easy integration with tools like Hugging Face.

Dataset Link: SQuAD Dataset on Hugging Face

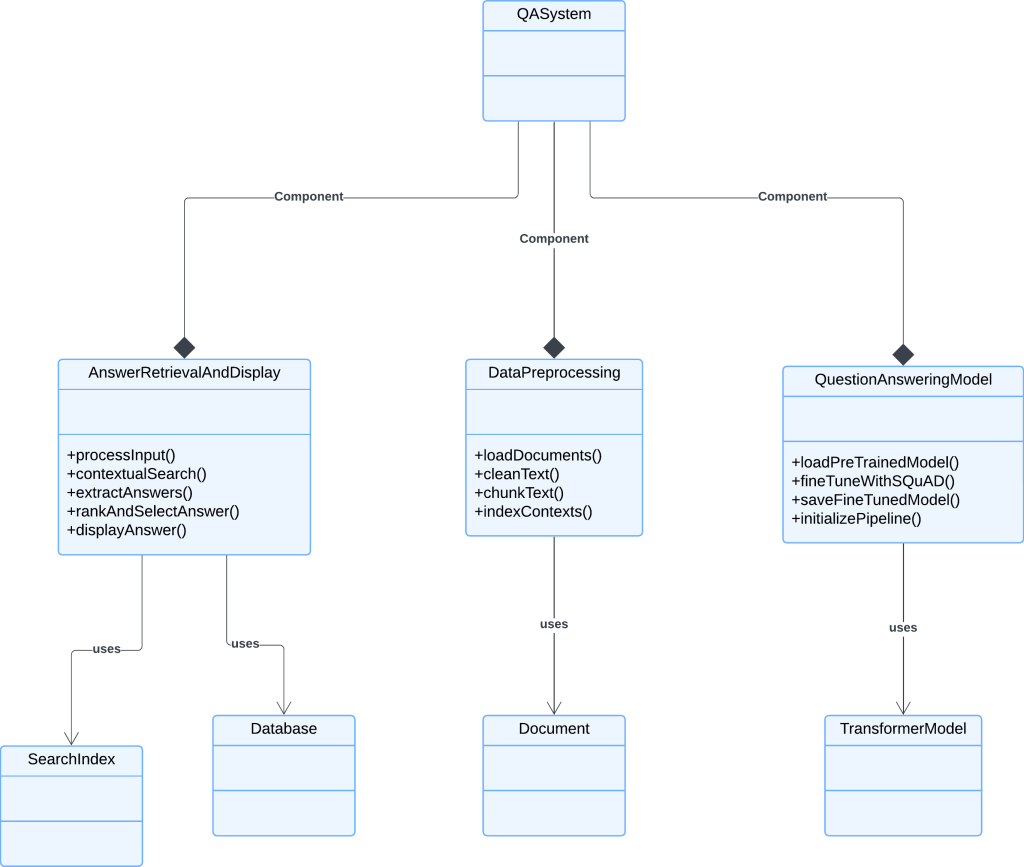

Low-Level Design

The application will consist of three main components:

- Data Preprocessing: Prepares internal documents for effective Q&A by formatting them in a searchable format.

- Question-Answering Model: Uses a pre-trained Transformer-based model, fine-tuned on the SQuAD dataset, to respond to user questions.

- Answer Retrieval and Display: Retrieves the answer from the document context and displays it to the user.

The workflow:

Project Structure

Here’s a sample project structure for the Q&A system:

Step-by-Step Approach to Create the Application

Step 1: Set Up the Environment

- Tools and Language: Python 3, Jupyter Notebook (for testing), Hugging Face Transformers, PyTorch.

- Dependencies: Install necessary libraries.

Step 2: Data Preprocessing (preprocess.py)

- Import libraries and load the internal documents:

- Tokenize and clean the documents for better processing by the model:

Step 3: Model Fine-Tuning (qa_model.py)

- Fine-tune a pre-trained Q&A model on the SQuAD dataset and save it for use in the application.

Step 4: Question-Answering Functionality (qa_model.py)

- Load the fine-tuned model and use it to answer questions.

Step 5: Main Application Script (main.py)

- Integrate all steps into a single workflow for interactive Q&A.

- Run the script with a question as input:

Testing the Application

Testing Setup

- Test Data: Use sample company policy documents or process guides as input data.

- Unit Tests: Test each function in

preprocess.pyandqa_model.pyto ensure correct functionality. - Integration Testing: Run the entire pipeline to confirm that questions are answered accurately based on document context.

Expected Test Results

- Preprocessing: The text should be cleaned and formatted correctly for model input.

- Question Answering: The system should return concise and accurate answers to input questions.

- Output Validation: The final output should display clear, relevant answers based on the input context.

Acknowledgment

Our Q&A System for Knowledge Bases project was inspired by the need for efficient information retrieval tools in corporate environments. By leveraging the SQuAD dataset, we can train a robust question-answering model suitable for internal knowledge retrieval, ensuring that employees receive accurate answers without extensive searching. The SQuAD dataset provides an excellent foundation for training, given its high-quality Q&A pairs and realistic format, making it adaptable for an organization’s unique knowledge base requirements.

5.4 Content Creation for Blogs or Marketing: Accelerating Content Creation with LLMs

Business Use Case

In content marketing, creating consistent, high-quality blog posts and marketing materials is crucial for engaging audiences and driving traffic. However, generating content ideas, drafts, or full articles from scratch can be time-consuming. An AI-driven content creation tool allows content marketers and bloggers to:

- Generate Ideas and Outlines: Use prompts to brainstorm ideas or create structured outlines based on target audience interests.

- Draft Content Quickly: Generate drafts that serve as a starting point for blog posts, social media content, or email marketing.

- Enhance Creativity and Save Time: AI-generated content helps reduce writer’s block and streamline the drafting process, enabling marketers to focus on refining and tailoring content.

By automating content ideation and drafting, this solution helps marketers and bloggers save time while maintaining content consistency and relevance.

Dataset Selection and Justification

For training a content generation model, the OpenWebText dataset is highly recommended. This dataset is a collection of high-quality, diverse web content, similar to the text found on Reddit, and is designed to capture varied topics and writing styles relevant to content creation.

Justification for Selecting the OpenWebText Dataset:

- Realistic Content Style: The OpenWebText dataset closely resembles the informal yet informative writing styles commonly found in blogs, forums, and social media, making it an ideal choice for training models to generate engaging marketing content.

- Topic Diversity: It covers a broad range of topics, including technology, lifestyle, health, and business, providing the model with exposure to the diverse subject matter that marketers and bloggers typically cover.

- High-Quality Source Material: OpenWebText is curated from reputable web sources, ensuring the training data is high-quality and representative of the type of content needed for professional blogs and marketing material.

- Publicly Available: As a publicly accessible dataset, OpenWebText is widely used in NLP research, allowing for easy access, reusability, and compatibility with pre-trained language models.

Dataset Link: OpenWebText Dataset on Hugging Face

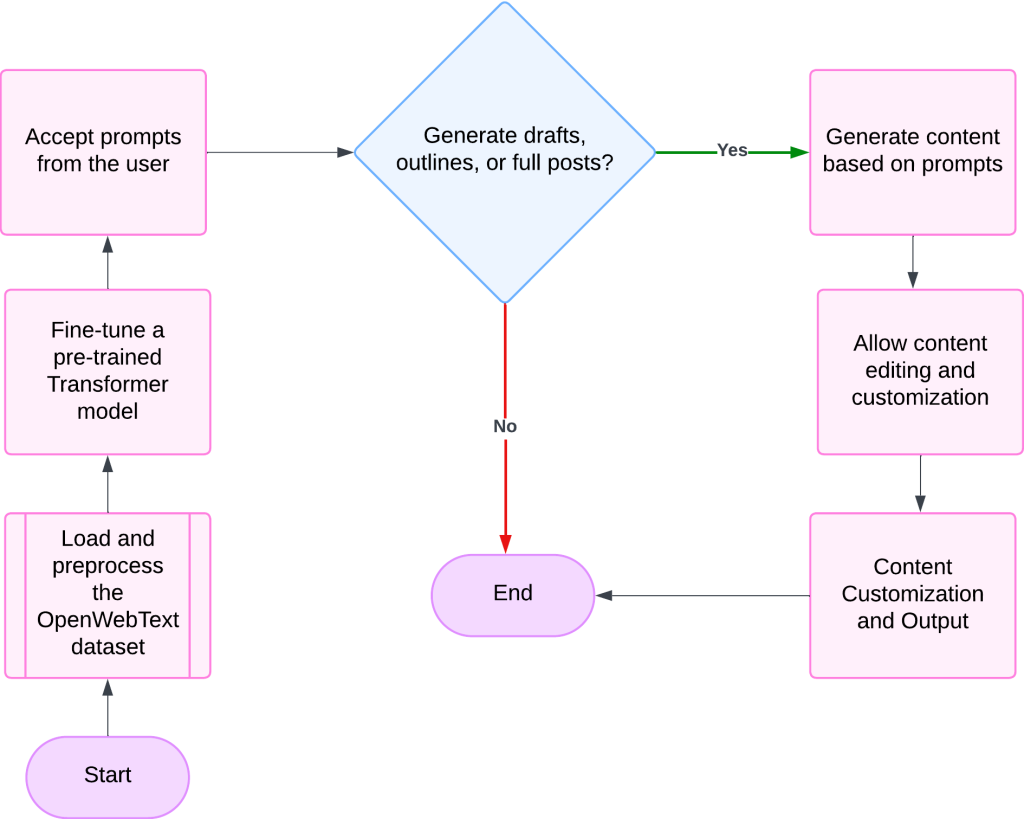

Low-Level Design

The application will consist of three main components:

- Data Preprocessing: Prepares the dataset for training by cleaning and structuring content samples.

- Content Generation Model: Uses a Transformer-based model fine-tuned on OpenWebText to generate content based on given prompts.

- Content Customization and Output: Allows users to refine and edit generated content for specific marketing needs.

The workflow:

Project Structure

Here’s a sample project structure for the content creation tool:

Step-by-Step Approach to Create the Application

Step 1: Set Up the Environment

- Tools and Language: Python 3, Jupyter Notebook (for testing), Hugging Face Transformers, PyTorch.

- Dependencies: Install necessary libraries.

Step 2: Data Preprocessing (preprocess.py)

- Import necessary libraries and load the OpenWebText dataset:

- Implement functions to clean and preprocess content samples:

Step 3: Content Generation Model (content_model.py)

- Load a pre-trained model (e.g., GPT-2) and fine-tune it on the OpenWebText dataset.

Step 4: Content Generation Functionality (content_model.py)

- Load the fine-tuned model and use it to generate content based on user prompts.

Step 5: Main Application Script (main.py)

- Integrate all steps into a single workflow to handle prompt-based content generation.

- Run the script with a prompt input:

Testing the Application

Testing Setup

- Test Data: Use various prompts that reflect common marketing or blogging topics (e.g., “Benefits of Remote Work,” “Top Digital Marketing Trends”).

- Unit Tests: Test each function in

preprocess.pyandcontent_model.pyto ensure correct functionality. - Integration Testing: Run the entire pipeline to confirm that prompts result in coherent, relevant content.

Expected Test Results

- Preprocessing: Content samples should be clean and ready for training.

- Content Generation: The system should generate meaningful, structured content based on given prompts.

- Output Validation: The generated text should be checked for readability, relevance, and coherence.

Acknowledgment

Our Content Creation for Blogs or Marketing project, designed to generate ideas, drafts, and full posts, was inspired by the need to streamline content production in digital marketing. We selected the OpenWebText dataset for fine-tuning the content generation model due to its high-quality, diverse web content and relevance to typical blog and marketing topics. This dataset is publicly available and aligns well with the writing styles commonly used in content marketing.

Explore more about the OpenWebText dataset here: OpenWebText Dataset on Hugging Face.

6. Optimizing for Performance

Running LLMs locally can be demanding, but here are tips to make it manageable:

- Quantized Models: Use quantized versions to reduce memory usage with minimal impact on performance.

- Half-Precision (float16): Running models in half-precision can cut memory requirements if your hardware supports it.

These techniques allow you to run LLMs on a Mac without taxing your system excessively.

7. Learning and Mastering LLMs

To build a solid foundation in LLMs, focus on combining theoretical understanding with hands-on practice:

- Foundational NLP Knowledge: Learn NLP basics such as tokenization, embeddings, and traditional architectures.

- Experiment with Open-Source Models: Hugging Face offers pre-trained models that make it easy to experiment with LLMs on your specific tasks.

- Understand Transformer Architecture: Study concepts like attention mechanisms, positional embeddings, and how these enable language understanding.

- Build Small Projects: Start with simpler projects like Q&A bots, summarizers, or text generators before progressing to more complex applications.

Recommended Resources

- Textbooks: “Deep Learning” by Ian Goodfellow and “Natural Language Processing with Transformers” by Lewis Tunstall.

- Courses: Look for NLP and Transformer-focused courses on Coursera, edX, and DeepLearning.AI.

- Hugging Face Model Hub: Access to open-source LLMs for experimentation.

Conclusion

LLMs are redefining the future of NLP, enabling more natural and powerful language interactions than ever before. With open-source models and platforms like Hugging Face, you can explore the power of LLMs right from your Mac, creating tools that convert data into actionable insights. By starting with NLP fundamentals and diving into LLM experimentation, you’ll be equipped to harness the incredible potential of large language models. The era of LLMs is here, and with it, endless possibilities for transforming language-driven tasks.