Apache Pinot for Production: Deployment and Integration with Apache Iceberg

- Apache Pinot Series Summary: Real-Time Analytics for Modern Business Needs

- Advanced Apache Pinot: Custom Aggregations, Transformations, and Real-Time Enrichment

- Apache Pinot for Production: Deployment and Integration with Apache Iceberg

- Advanced Apache Pinot: Optimizing Performance and Querying with Enhanced Project Setup

- Advanced Apache Pinot: Sample Project and Industry Use Cases

- Pinot™ Basics

Originally published on December 14, 2023

In this installment of the Apache Pinot series, we’ll guide you through deploying Pinot in a production environment, integrating with Apache Iceberg for efficient data management and archival, and ensuring that the system can handle real-world, large-scale datasets. With Iceberg as the long-term storage layer and Pinot handling real-time analytics, you’ll have a powerful combination for managing both recent and historical data.

For those interested in brushing up on Presto concepts, check out my detailed Presto Basics blog post. If you’re new to Apache Iceberg, you can find an introductory guide in my Apache Iceberg Basics blog post.

Sample Project Enhancements for Production-Readiness

To make our social media analytics project production-ready, we’ll add Iceberg as an archival solution for storing large datasets efficiently. This setup allows us to offload historical data from Pinot to Iceberg, which can be queried when needed while keeping Pinot lean and responsive for real-time analytics.

Updated Project Structure:

data: Simulated large-scale datasets for testing production performance.config: Production-ready schema and table configurations, with an Iceberg data sink.scripts: Automated scripts for setting up the Iceberg table and managing data movement.monitoring: Metrics and monitoring configurations to track data flow between Pinot and Iceberg.

Deploying Apache Pinot with Iceberg for Data Archival

This setup involves deploying Iceberg on a data lake (e.g., S3, HDFS, or ADLS) and configuring Pinot to store recent data, with older data regularly offloaded to Iceberg for cost-efficient storage.

1. Setting Up Zookeeper and Kafka Clusters

Use Kubernetes for high availability in Zookeeper and Kafka deployments, as explained in the previous blog. Zookeeper coordinates Pinot nodes, while Kafka handles real-time data ingestion.

2. Deploying Pinot and Iceberg on Kubernetes

We’ll deploy Pinot in the same way, but with the addition of Iceberg, we’ll create a new workflow for data archival and retrieval.

- Deploy Pinot: Follow the same configurations as in the previous blog for deploying Pinot components (Controller, Broker, and Server) on Kubernetes.

- Deploy Iceberg: Set up Iceberg on an object storage system like Amazon S3, HDFS, or a local file system (for testing).

3. Configuring Iceberg as the Archival Layer

To configure Iceberg as a storage layer, we’ll use a batch job to move historical data from Pinot to Iceberg regularly (e.g., every 90 days).

- Configure an Archival Job:

- Write a Spark job to query historical segments from Pinot and move them to Iceberg.

- Use the Iceberg Spark connector to write Pinot data into an Iceberg table.

- Iceberg Table Schema:

- Ensure that the schema in Iceberg matches the schema in Pinot, allowing seamless data transfer and querying.

Using Apache Iceberg for Data Retention and Cost-Effective Storage

With Iceberg in the data lake, you can define data retention policies directly within Iceberg, which allows for schema evolution, partitioning, and management of large volumes of historical data.

Defining a Retention Policy in Iceberg

To manage historical data in Iceberg:

- Time-Based Partitioning:

- Partition data by

datein Iceberg, making it easy to manage and query data based on time.

- Partition data by

- Automated Data Archival:

- Schedule a batch Spark job to archive Pinot segments older than 90 days into Iceberg.

- Optimize Iceberg Storage:

- Use data compaction and metadata pruning in Iceberg to improve query performance and storage efficiency over time.

Querying Iceberg and Pinot Together

In this setup, Pinot will handle real-time data queries, while Iceberg serves as the historical data store. You can use Trino(formerly PrestoSQL) to perform federated queries that span both Pinot and Iceberg:

Example Querying Both Pinot and Iceberg

- Real-Time Analytics in Pinot:

- Query recent data in Pinot, leveraging its low-latency capabilities for immediate insights.

- Historical Analytics in Iceberg:

- Query archived data from Iceberg directly for long-term trends.

- Federated Query with Trino:

- Combine results from Pinot and Iceberg in a single query with Trino to get a unified view across real-time and historical data.

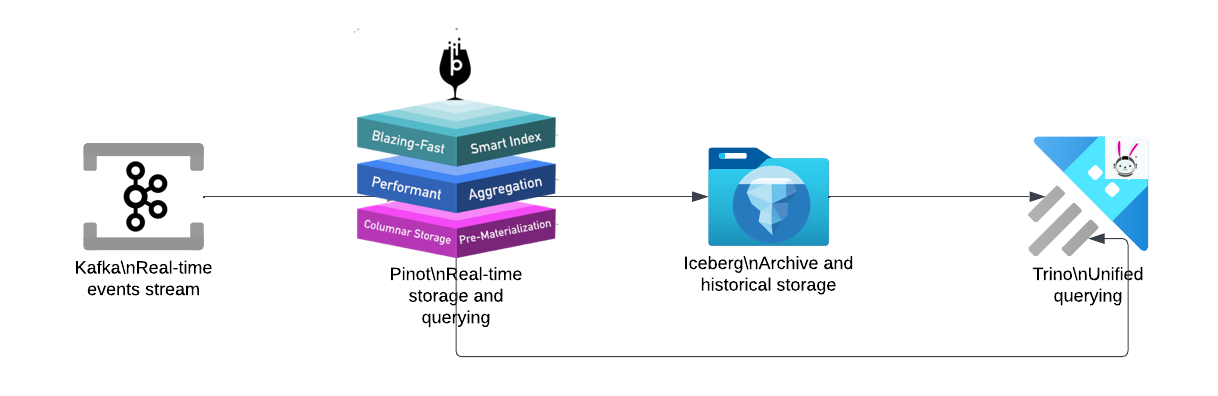

Enhanced Data Flow and Architecture with Iceberg Integration

In a production environment with Iceberg, the data flow supports seamless transitions from real-time analytics in Pinot to archival storage in Iceberg.

- Data Ingestion: Real-time events are streamed into Kafka and ingested by Pinot.

- Real-Time Querying: Pinot provides low-latency responses for recent data (e.g., last 90 days).

- Archival with Iceberg: Historical data is regularly moved from Pinot to Iceberg for cost-effective storage and long-term querying.

- Unified Querying with Trino: Using Trino, you can query across both real-time data in Pinot and historical data in Iceberg.

Conclusion

In this post, we covered how to deploy Apache Pinot in production with Apache Iceberg for managing historical data. This setup allows you to maintain efficient, cost-effective data storage while still benefiting from Pinot’s real-time capabilities.

In the next post, we’ll explore advanced data processing techniques with Pinot, including custom aggregations, transformations, and more complex data flows.