Apache Druid Basics

- Summary of the Apache Druid Series: Real-Time Analytics, Machine Learning, and Visualization

- Securing and Finalizing Your Apache Druid Project: Access Control, Data Security, and Project Summary

- Visualizing Data with Apache Druid: Building Real-Time Dashboards and Analytics

- Extending Apache Druid with Machine Learning: Predictive Analytics and Anomaly Detection

- Mastering Apache Druid: Performance Tuning, Query Optimization, and Advanced Ingestion Techniques

- Advanced Apache Druid: Sample Project, Industry Scenarios, and Real-Life Case Studies

- Apache Druid Basics

What is Apache Druid?

Apache Druid is a high-performance, real-time analytics database designed for fast and interactive queries on large datasets. It is optimized for applications that require quick, ad-hoc queries on event-driven data, such as real-time reporting, monitoring, and dashboarding.

Key Features of Apache Druid

- Real-time Data Ingestion: Druid allows for continuous ingestion of data from various sources (e.g., Kafka, Kinesis, Hadoop) and can perform analytics in real-time as new data arrives.

- High Query Performance: Druid is designed to deliver sub-second query performance by combining a columnar storage format with distributed, massively parallel processing, making it ideal for high-performance, OLAP-style queries.

- Scalability: Druid can scale horizontally, meaning that you can add more nodes to your cluster to handle more data or queries.

- Fault Tolerance: Provides high availability and fault tolerance by replicating data across multiple nodes.

- Complex Aggregations: Supports a range of aggregations and complex queries, useful for detailed analytics over time-series or event data.

- Data Compression: Compresses data to reduce storage costs and minimize I/O, which boosts query performance.

- Time-series Focused: Particularly suited for time-series data, enabling complex calculations over data broken down by time intervals.

Druid Architecture: How Druid Works

Apache Druid is designed with a distributed, scalable architecture optimized for real-time and historical data ingestion and fast querying. Its architecture is composed of various types of nodes, each serving a specific purpose within a cluster. Here’s an overview of Druid’s key components and how they work together to deliver high-performance analytics:

Key Components of Druid Architecture

- Coordinator Node

The coordinator node manages the data distribution across historical nodes in the Druid cluster. It ensures that segments are well-balanced and manages the lifecycle of data segments, including load and deletion. The coordinator also plays a role in segment compaction and managing cluster capacity. - Overlord Node

The overlord is responsible for task management and ingestion. It accepts ingestion tasks (such as batch or real-time ingestion) and assigns these tasks to middle manager nodes. The overlord monitors and coordinates these tasks, ensuring the data is ingested and indexed properly. - Historical Nodes

Historical nodes store and serve immutable, historical data segments. They are optimized for handling large volumes of stored data and respond to query requests for historical information. Historical nodes work in conjunction with the broker node to provide fast, reliable querying. - Middle Manager Nodes

Middle manager nodes handle ingestion tasks and real-time data ingestion. They manage the data ingestion process and store the data temporarily before it is handed over to historical nodes. Middle managers also handle data transformations and filtering during ingestion. - Broker Node

The broker node acts as a query router. When a client submits a query, the broker receives it and routes it to the appropriate historical or real-time nodes based on the query’s data range. The broker consolidates the responses from different nodes and returns the final result to the client. - Router Node (Optional)

The router node provides a unified access point for Druid’s APIs and can be used to route client requests to the appropriate Druid services. It’s particularly useful in complex deployments where service discovery is needed. - Deep Storage

Druid relies on external deep storage (such as Amazon S3, HDFS, or Google Cloud Storage) to store data segments for long-term retention. Historical data segments are retrieved from deep storage as needed, and it provides resilience by acting as the primary backup for data. - Metadata Store

Druid uses a metadata store, typically a relational database like MySQL or PostgreSQL, to store cluster configurations, task information, and metadata related to segments. This metadata is essential for Druid’s operations and helps keep track of the cluster’s state.

How Druid Processes Data and Queries

- Ingestion

Druid supports both real-time and batch ingestion. During ingestion, data is transformed and indexed to allow fast querying. Real-time ingestion flows directly into middle manager nodes, while batch ingestion tasks are processed as periodic jobs. - Storage

Once ingested, data is stored as segments. Segments are partitioned by time and, optionally, by other attributes to optimize query performance. These segments are immutable, ensuring that Druid can store and retrieve data efficiently. - Query Processing

When a query is received by the broker node, it’s divided into sub-queries and routed to the appropriate historical and real-time nodes. These nodes process their respective segments and return partial results to the broker. The broker node then combines these results, applies any necessary final aggregations or filters, and returns the response to the client.

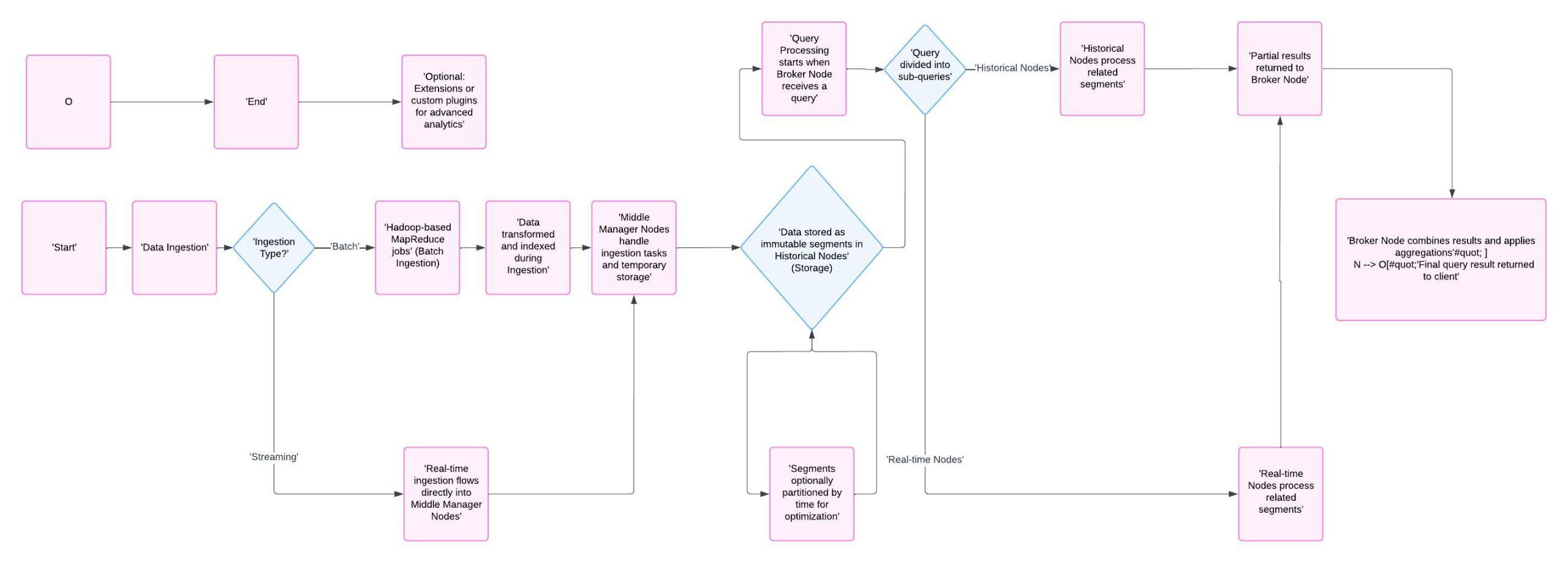

Below is a diagram that visually represents Apache Druid’s architecture:

Druid Architecture Diagram

This diagram illustrates the flow of data and queries across Druid’s components, showing how ingestion, storage, and query processing work together to deliver high-performance analytics.

Typical Use Cases for Apache Druid

- Real-time analytics for web applications, IoT systems, and other event-driven applications

- Dashboards for monitoring business metrics

- Fraud and anomaly detection

- Streaming data analytics

- Complex drill-down reports and business intelligence

Setting Up Apache Druid

Installation Steps

Prerequisites

Ensure you have Java 8 or higher installed. Confirm with:

Your output should show a compatible version, like:

Adequate system resources (memory and CPU) will vary based on data volume.

Download Druid

Visit the Apache Druid download page and download the latest stable release.

Visit the Apache Druid download page and download the latest stable release.

Extract and Configure

- Extract the downloaded archive.

- Configure: Navigate to the

confdirectory to configure Druid.- For development, you can use the quickstart configuration provided.

Start Druid Services

To start Druid, use:

Example output:

Access Druid Console

Open http://localhost:8888/ in your browser to access the Druid web console.

Ingesting Data into Apache Druid

To analyze data, you need to ingest it into Druid. Here are the primary methods to do so:

Batch Ingestion

Use Druid’s Native Batch Ingestion for importing data from files (e.g., CSV, JSON). This can be ideal for ingesting historical data from MySQL, which can be exported as CSV.

Real-Time Ingestion

Using Apache Kafka as a buffer between MySQL and Druid is a robust method for streaming data, enabling continuous data ingestion.

JDBC Ingestion (Recommended for MySQL)

This approach allows for direct data ingestion from MySQL using JDBC.

Steps:

- Download the latest MySQL JDBC driver.

- Place the driver in Druid’s extensions directory.

- Configure an ingestion spec to define the connection to your MySQL database.

Example Ingestion Spec

An example of an ingestion specification in JSON format for MySQL ingestion:

Submitting the Ingestion Task

- Use Druid’s Overlord API to submit the ingestion spec.

- Alternatively, use the Druid Console to submit and monitor tasks.

Tips for Working with Druid

- Leverage Real-Time Ingestion: For applications requiring live data updates, such as financial trading platforms or social media analytics, real-time ingestion through Kafka or Kinesis is ideal.

- Optimize Queries with Compression: Druid’s data compression allows for faster, more efficient queries—ideal for massive datasets.

- Utilize Columnar Storage: Design your tables around columnar storage to maximize query speed for analytics workloads.

- Consider Sharding and Replication: For larger datasets, optimize scalability and availability through Druid’s shard and replica configurations.

- Use JSON APIs: Druid’s JSON-based configuration makes integration with other systems straightforward, allowing for easy automation and scripting.

Recommended Books on Druid and Real-Time Analytics

- “Building Real-Time Analytics Systems: Leveraging Apache Druid” by Eric Tschetter

- “Learning Apache Druid: Real-Time Analytics at Scale” by Gian Merlino

- “Streaming Systems” by Tyler Akidau (Apache Druid is frequently referenced for streaming applications)

Conclusion

Apache Druid is a versatile, high-performance analytics database optimized for real-time and interactive data queries, especially well-suited for applications that rely on event-driven data. From real-time dashboards to ad-hoc queries, Druid excels in handling fast, OLAP-style queries on large datasets. In this foundational guide, we’ve covered the basics of Apache Druid and set up a functional ingestion pipeline from MySQL. Stay tuned for more as we dive into advanced Druid configurations, performance tuning, and integration with other tools in the modern data ecosystem!