Advanced Apache Pinot: Custom Aggregations, Transformations, and Real-Time Enrichment

- Apache Pinot Series Summary: Real-Time Analytics for Modern Business Needs

- Advanced Apache Pinot: Custom Aggregations, Transformations, and Real-Time Enrichment

- Apache Pinot for Production: Deployment and Integration with Apache Iceberg

- Advanced Apache Pinot: Optimizing Performance and Querying with Enhanced Project Setup

- Advanced Apache Pinot: Sample Project and Industry Use Cases

- Pinot™ Basics

Originally published on December 28, 2023

In this concluding post of the Apache Pinot series, we’ll explore advanced data processing techniques in Apache Pinot, such as custom aggregations, real-time transformations, and data enrichment. These techniques help us build a more intelligent and insightful analytics solution. As we finalize this series, we’ll also look ahead to how Apache Pinot could evolve with advancements in AI and ModelOps, laying a foundation for future exploration.

Sample Project Enhancements for Real-Time Enrichment

We’ll take our social media analytics project to the next level with real-time data transformations, custom aggregations, and enrichment. These advanced techniques help preprocess and structure data during ingestion, making it ready for faster querying and deeper insights.

Final Project Structure:

data: Sample enriched data with aggregations and transformations.config: Schema and table configurations with transform functions.scripts: Automated scripts to manage data transformations.enrichment: Real-time enrichment scripts for joining external data sources, such as demographic information or location data.

Implementing Custom Aggregations in Pinot

Custom aggregations allow us to compute more complex metrics during ingestion, helping reduce query load and making results available immediately.

Adding a Moving Average Metric

- Define the Metric in the Schema: Add fields for moving averages of

likesandsharesto capture trends over time. - Transformation Function for Moving Average: Configure a transformation function to calculate a 7-day moving average:

Real-Time Percentile Calculation

- Define a Percentile Metric: Pinot’s Percentile Estimation function can calculate real-time engagement levels or popularity for posts, based on

likes.

These aggregations are useful for quickly querying complex metrics without overloading Pinot with computation.

Real-Time Data Transformation: Adding Enrichment Layers

For applications requiring contextual insights, real-time data enrichment adds valuable dimensions to incoming data.

Enriching Data with External Sources

To illustrate, we’ll enhance user interactions with location-based data for region-focused analysis.

- Join with Location Dataset: Using a real-time Spark job, join events with an external location dataset based on

geo_location. Feed both raw and enriched data points into Pinot.

Calculating Engagement Scores with Custom Formulas

Custom fields can calculate engagement scores that weigh various interactions.

With these custom metrics, you gain flexibility in calculating a composite engagement score, which can offer deeper insights into the types of content users find most engaging.

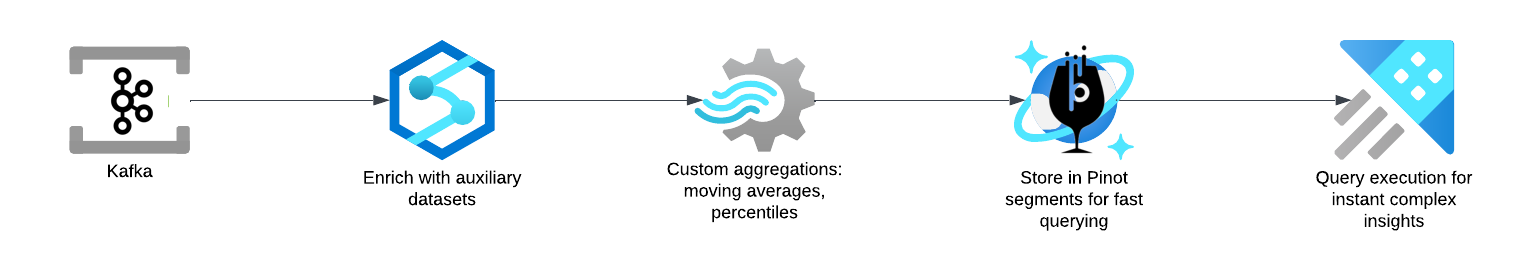

Data Flow and Architecture Diagram for Real-Time Enrichment and Aggregation

With real-time enrichment and custom aggregations in place, the data flow evolves to accommodate additional transformations.

- Data Ingestion: Events are ingested from Kafka and enriched with auxiliary datasets, such as demographic or location information.

- Aggregation and Transformation: Custom aggregations, like moving averages and percentiles, are calculated.

- Storage and Querying: Enriched data is stored in Pinot segments, ready for fast querying.

- Query Execution: With precomputed metrics available, Pinot can return complex insights instantly.

Example Queries for Enriched and Aggregated Data

These advanced queries leverage custom metrics for enriched data analysis.

Engagement Analysis by Location

Moving Average of Likes Over Time

Top Percentile of Most Liked Posts

Looking to the Future: AI, ModelOps, and Apache Pinot

With these enhancements, our Apache Pinot project is ready for production-grade analytics. However, as the fields of AI and ModelOps continue to evolve, there are exciting possibilities for integrating machine learning and predictive analytics directly into Pinot.

Some potential future directions include:

- Real-Time ML Model Scoring: Integrate machine learning models within Pinot’s data pipeline to score events in real time, enabling predictive analytics on-the-fly.

- Automated Anomaly Detection: With AI-driven anomaly detection, Pinot could flag unusual patterns in data as they occur.

- Integration with ModelOps Platforms: As ModelOps tools and pipelines mature, Pinot could serve as the foundational layer for deploying and serving machine learning models in analytics environments.

While this series concludes here, we may revisit it in the future as advancements in AI and ModelOps unfold, bringing new capabilities to real-time analytics with Apache Pinot.

Conclusion

In this series, we’ve journeyed from the fundamentals of Apache Pinot to advanced configurations, custom aggregations, real-time data transformations, and production deployments. As data analytics demands evolve, Apache Pinot stands ready to handle scalable, low-latency querying for both traditional and advanced analytics.

Thank you for following along, and stay tuned for future updates as we explore emerging opportunities in AI-powered real-time analytics with Apache Pinot!