Advanced Apache Druid: Sample Project, Industry Scenarios, and Real-Life Case Studies

- Summary of the Apache Druid Series: Real-Time Analytics, Machine Learning, and Visualization

- Securing and Finalizing Your Apache Druid Project: Access Control, Data Security, and Project Summary

- Visualizing Data with Apache Druid: Building Real-Time Dashboards and Analytics

- Extending Apache Druid with Machine Learning: Predictive Analytics and Anomaly Detection

- Mastering Apache Druid: Performance Tuning, Query Optimization, and Advanced Ingestion Techniques

- Advanced Apache Druid: Sample Project, Industry Scenarios, and Real-Life Case Studies

- Apache Druid Basics

Introduction

Following our initial blog on Apache Druid basics, this guide dives into more advanced configurations and demonstrates a sample project. Apache Druid’s speed and scalability make it a go-to choice for real-time analytics across many industries. This blog covers setting up an analytics dashboard for a sample project, showcases Druid’s use in industry, and provides case studies highlighting the business benefits of Druid.

Sample Project: E-commerce Sales Analytics Dashboard

In this project, we’ll set up an analytics dashboard for an e-commerce platform. The dashboard will use Apache Druid to track, analyze, and visualize sales, customer behavior, and product interactions in real time.

Project Structure

The project will have a well-defined structure to organize ingestion, configuration, and visualization.

Step-by-Step Guide

Step 1: Set Up Apache Druid

Follow the setup steps from our previous Druid blog if you haven’t already. Ensure Druid is running on your machine.

Step 2: Prepare Sample Data

Prepare or download a sample CSV file with the following format:

This data represents sales transactions, and the columns include:

- timestamp: The time of the transaction

- order_id: A unique identifier for each order

- customer_id: A unique identifier for each customer

- product_id: A unique identifier for each product

- category: The product category

- amount: The order amount

- quantity: Quantity purchased

Step 3: Define the Ingestion Specification

In the druid_configs/ingestion_spec.json file, define a batch ingestion spec:

Step 4: Ingest Data into Druid

Submit the Ingestion Task: Use the Druid console or curl command:

Monitor the Task: Check the task status on Druid’s console at http://localhost:8888.

Industry Scenarios and Case Studies

1. Telecommunications: Real-Time Network Monitoring

- Example: Verizon uses Apache Druid to monitor millions of network events per second, ensuring real-time detection of faults and issues.

- Benefit: By using Druid, Verizon significantly improved its real-time monitoring capabilities, allowing proactive responses to network issues and improved customer satisfaction.

2. Media & Entertainment: Personalized Content Recommendations

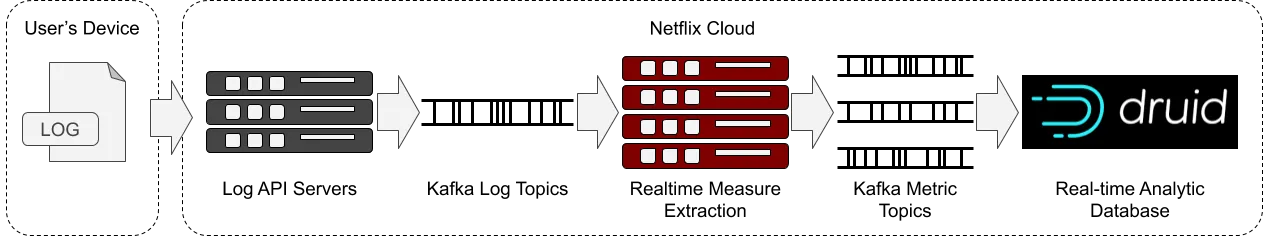

- Example: Netflix employs Apache Druid to provide real-time analytics on content consumption, user activity, and preferences.

- Benefit: Netflix has seen higher user engagement and retention by dynamically adapting content recommendations based on real-time data.

- More Read: How Netflix uses Druid for Real-time Insights to Ensure a High-Quality Experience

3. Financial Services: Fraud Detection

- Example: Capital One utilizes Druid to analyze transaction data in real time, identifying fraudulent activity within seconds.

- Benefit: Capital One reduced fraud losses by over 40% by integrating Druid with its fraud detection systems, enhancing response times and improving customer trust.

4. Retail: Inventory Management and Customer Insights

- Example: Walmart uses Druid to track real-time inventory levels across stores, optimize restocking, and analyze customer purchase patterns.

- Benefit: By leveraging Druid, Walmart reduced out-of-stock incidents by 25%, improving inventory efficiency and customer satisfaction.

“After we switched to Druid, our query latencies dropped to near sub-second and in general, the project fulfilled most of our requirements. Today, our cluster ingests nearly 1B+ events per day (2TB of raw data), and Druid has scaled quite well for us.”

– Amaresh Nayak | Senior Distinguished Architect, Walmart Global Tech

Tips and Best Practices

- Use Aggregators Wisely: Overuse of aggregators can slow down queries. Limit aggregators to only those that are necessary for your metrics.

- Implement Caching: Caching frequent queries can reduce server load and speed up response times.

- Optimize Sharding: Configure sharding based on expected query patterns to balance data distribution and performance.

- Monitor Performance: Regularly check query times and resource usage, and consider scaling your Druid cluster if you notice bottlenecks.

Conclusion

In this advanced guide, we explored the power of Apache Druid for real-time analytics with a hands-on e-commerce analytics project. Apache Druid’s adaptability and performance make it a strong choice for companies needing high-speed data processing. As demonstrated by real-life case studies, Druid helps industries ranging from telecommunications to retail achieve competitive advantages through enhanced insights and rapid data analysis. Stay tuned for more on optimizing Druid, as we dive into Druid’s performance tuning, query optimization, and more advanced ingestion techniques!