Beyond Scale: Innovating to Build Smarter, Efficient, and Scalable AI Models

- AI in Today’s World: Machine Learning & Deep Learning Revolution

- Building Ethical AI: Lessons from Recent Missteps and How to Prevent Future Risks

- Generative AI: The $4 Billion Leap Forward and Beyond

- Beyond Scale: Innovating to Build Smarter, Efficient, and Scalable AI Models

- AI in the Workplace: How Enterprises Are Leveraging Generative AI

- RAG AI: Making Generative Models Smarter and More Reliable

- Concluding the AI Innovation Series: A Transformative Journey Through AI

Introduction: The Changing Landscape of AI Scalability

📌 Icon Insight: From foundational neural networks to revolutionary Large Language Models (LLMs) like GPT-4 and Google’s Gemini, AI’s journey has been driven by scaling. While expanding model sizes initially led to significant performance improvements, recent scaling attempts have faced mounting challenges in cost, energy, and complexity. Scaling is no longer about going bigger—it’s about going smarter.

🔍 Key Takeaway: The future of AI scalability lies in optimizing efficiency and adaptability through innovative approaches like Sparse AI and Modular AI.

Challenges in Scaling AI Models

As LLMs grow in size, several bottlenecks arise, limiting their scalability and practical deployment.

1. Technical Bottlenecks

-

- Computational Costs: Training models like GPT-4 requires thousands of GPUs over weeks, consuming energy equivalent to powering a small town.

- Key Statistic: GPT-4 reportedly required 23,000 NVIDIA A100 GPUs, costing millions and consuming vast computational resources.

- Latency Issues: Larger models often have slower inference times, making them impractical for real-time applications.

- Energy Consumption: Training GPT-3 emitted 552 tons of CO₂, equivalent to driving 1.2 million miles in a car.

- Computational Costs: Training models like GPT-4 requires thousands of GPUs over weeks, consuming energy equivalent to powering a small town.

2. Financial Constraints

-

- High Training Costs: The financial barrier to training large models restricts innovation to tech giants.

- Example: GPT-4’s training cost is estimated to have exceeded $100 million.

- Cost of Deployment: Beyond training, hosting and inference add continuous financial strain.

- High Training Costs: The financial barrier to training large models restricts innovation to tech giants.

3. Diminishing Returns

-

- Performance Saturation: Incremental performance improvements diminish as model size grows. For example, scaling from 100 billion to 1 trillion parameters might only yield a marginal increase in accuracy.

4. Environmental Impact

-

- Sustainability Concerns: Training large-scale models is environmentally taxing. Without innovations in energy efficiency, the carbon footprint of AI will continue to grow.

📊 Performance Gains vs. Model Size

| Model Size (Billion Params) | Performance Improvement (%) |

|---|---|

| 1 | 40 |

| 10 | 70 |

| 100 | 85 |

| 1000 | 90 |

📉 Interpreting Results: The returns diminish significantly as models scale past a certain threshold, highlighting the inefficiency of brute-force growth.

Innovative Solutions: Building Smarter AI Models

To overcome these challenges, researchers and organizations are focusing on smarter techniques to improve efficiency, scalability, and flexibility without relying on sheer size.

1. Sparse AI: Efficiency Without Compromise

Sparse AI activates only a subset of model parameters during inference, significantly reducing computational and energy costs.

Techniques in Sparse AI

-

- Dynamic Sparsity: Adjusts active parameters during training, ensuring only relevant connections are used.

- Mixture of Experts (MoE): Activates specialized sub-networks for specific tasks, improving performance at reduced computational cost.

Real-World Applications of Sparse AI

-

- Google’s Switch Transformer: Achieved GPT-3-level performance at a fraction of the cost by activating only necessary parameters.

- OpenAI Sparse Transformers: Efficiently process sequence data for tasks like language generation and recommendation systems.

- Healthcare: Sparse convolutional networks (CNNs) analyze X-rays or CT scans, enabling real-time diagnostics on edge devices.

Industries That Benefit Most from Modular AI

Modular AI’s flexibility, scalability, and adaptability make it a powerful tool across various industries. Here are the industries that benefit most from Modular AI, with real-world applications and specific advantages:

1. Healthcare

Why Modular AI is Beneficial in Healthcare?

Why Modular AI is Beneficial in Healthcare?

-

-

- Modular AI allows healthcare providers to customize solutions for specific needs, such as diagnostics, patient monitoring, and treatment planning.

- Different AI modules can address various aspects of healthcare workflows, from imaging to predictive analytics.

-

Applications:

-

-

- Medical Imaging Analysis:

- A dedicated imaging module processes X-rays, MRIs, and CT scans to detect anomalies.

- Example: IBM Watson Health uses modular AI for medical imaging and oncology treatment planning.

- Patient Monitoring:

- Real-time patient data is analyzed by monitoring modules to detect irregularities like arrhythmias.

- Example: Wearables like Fitbit integrate AI modules for real-time health tracking.

- Medical Imaging Analysis:

-

Benefits:

-

-

- Flexibility to deploy only the required modules.

- Improved patient outcomes with task-specific AI.

-

2. E-Commerce and Retail

Why Modular AI is Beneficial in e-Commerce & Retail?

-

-

- Modular AI enables retailers to integrate customer-centric functionalities like recommendation engines, inventory management, and dynamic pricing as separate modules.

- Modules can be updated independently to meet changing market demands.

-

Applications:

-

-

- Product Recommendation Systems:

- Tailored modules suggest products based on customer browsing and purchase history.

- Example: Shopify uses modular AI to personalize customer experiences.

- Inventory and Demand Forecasting:

- AI modules optimize stock levels and predict future demand.

- Example: Amazon uses modular AI to optimize its supply chain with real-time inventory updates.

- Product Recommendation Systems:

-

Benefits:

-

-

- Faster implementation of features during seasonal sales or promotions.

- Enhanced customer satisfaction through real-time personalization.

-

3. Autonomous Vehicles

Why Modular AI is Beneficial:

-

-

- Autonomous vehicles rely on modular AI to manage multiple subsystems, such as perception, navigation, and decision-making.

- Each module can be improved or scaled independently, enabling continuous innovation.

-

Applications:

-

-

- Object Detection and Lane Tracking:

- Perception modules analyze road conditions and identify obstacles.

- Example: Tesla’s Autopilot system uses modular AI for vision processing and path planning.

- Navigation and Route Planning:

- AI modules optimize routes based on real-time traffic data.

- Object Detection and Lane Tracking:

-

Benefits:

-

-

- Faster updates for individual components, such as integrating new sensor data.

- Reduced development time for scaling autonomous driving capabilities.

-

4. Financial Services

Why Modular AI is Beneficial:

-

-

- Financial institutions can modularize their AI systems to handle fraud detection, risk assessment, and customer service separately.

- Independent modules ensure quick scaling for specific tasks like fraud detection during high transaction volumes.

-

Applications:

-

-

- Fraud Detection:

- AI modules analyze transaction patterns for anomalies in real-time.

- Example: JP Morgan Chase uses modular AI for fraud detection and financial forecasting.

- Customer Sentiment Analysis:

- Sentiment modules gauge customer feedback to improve services.

- Fraud Detection:

-

Benefits:

-

-

- Improved response times during peak transaction periods.

- Enhanced security with independently scalable fraud modules.

-

5. Manufacturing and Industry 4.0

Why Modular AI is Beneficial:

-

-

- Modular AI supports the automation of industrial processes, predictive maintenance, and quality control.

- Modules can be tailored to specific manufacturing lines or processes.

-

Applications:

-

-

- Predictive Maintenance:

- AI modules analyze machine data to predict failures.

- Example: Siemens uses modular AI for maintenance and efficiency optimization.

- Quality Control:

- Vision-based modules inspect products for defects.

- Predictive Maintenance:

-

Benefits:

-

-

- Reduced downtime through targeted scaling of predictive maintenance systems.

- Cost-effective scaling across multiple factories.

-

6. Telecommunications

Why Modular AI is Beneficial:

-

-

- Telecommunication providers benefit from modular AI for network optimization, customer service, and predictive analytics.

- Independent modules ensure flexibility in managing complex, high-traffic networks.

-

Applications:

-

-

- Network Optimization:

- AI modules identify bottlenecks and optimize bandwidth usage.

- Example: AT&T uses modular AI for 5G traffic management.

- Virtual Assistants:

- Customer service modules respond to queries in real-time.

- Network Optimization:

-

Benefits:

-

-

- Cost-efficient scaling for high-demand services like 5G deployment.

- Improved customer satisfaction through intelligent virtual assistants.

-

7. Education and EdTech

Why Modular AI is Beneficial:

-

-

- Modular AI enables personalized learning experiences through adaptive learning modules and content recommendation systems.

- Modules can be deployed to address specific learning needs or topics.

-

Applications:

-

-

- Intelligent Tutoring Systems:

- AI modules provide real-time feedback and custom lesson plans.

- Example: Khan Academy’s AI-powered tutor, Khanmigo, uses modular AI to adapt to individual student needs.

- Automated Grading Systems:

- AI modules assess student assignments with high accuracy.

- Intelligent Tutoring Systems:

-

Benefits:

-

-

- Cost-effective deployment of educational tools in diverse settings.

- Scalable modules ensure consistent learning experiences for large student populations.

-

8. Smart Homes and IoT

Why Modular AI is Beneficial:

-

-

- Modular AI enables integration of various smart home functionalities, such as lighting control, security, and energy management.

- Modules operate independently, allowing easy customization.

-

Applications:

-

-

- Home Security:

- AI modules analyze camera feeds for suspicious activities.

- Example: Ring’s modular AI system provides security alerts based on motion detection.

- Energy Optimization:

- AI modules adjust heating and cooling based on user behavior.

- Example: Nest Thermostat optimizes energy usage using modular AI.

- Home Security:

-

Benefits:

-

-

- Flexible expansion of smart home features as user needs evolve.

- Improved efficiency through energy-saving modules.

-

9. Agriculture

Why Modular AI is Beneficial:

-

-

- Modular AI systems optimize crop management, irrigation, and pest control with specialized modules.

- Independent modules allow targeted applications in different environments.

-

Applications:

-

-

- Crop Health Monitoring:

- AI modules analyze drone or satellite data to identify stressed areas.

- Example: John Deere’s smart tractors use modular AI for real-time field analysis.

- Irrigation Optimization:

- AI modules automate water distribution based on soil and weather data.

- Crop Health Monitoring:

-

Benefits:

-

-

- Increased productivity through efficient resource allocation.

- Scalable modules enable cost-effective adoption across farms of varying sizes.

-

10. Entertainment and Media

Why Modular AI is Beneficial:

-

-

- Modular AI supports content recommendation, personalization, and media analytics.

- Independent modules allow quick adaptation to audience preferences.

-

Applications:

-

-

- Recommendation Engines:

- AI modules tailor content recommendations for users.

- Example: Netflix uses modular AI for personalized movie suggestions.

- Content Generation:

- AI modules create personalized media, such as automated video summaries.

- Recommendation Engines:

-

Benefits:

-

-

- Enhanced viewer engagement through real-time personalization.

- Flexible scaling during high-traffic events like premieres or live streams.

-

Summary of Benefits by Industry

| Industry | Key Benefit of Modular AI |

|---|---|

| Healthcare | Customizable deployment for specific medical tasks like imaging or diagnostics. |

| E-Commerce | Personalized shopping experiences with independently scalable modules. |

| Autonomous Vehicles | Flexible updates for vision, navigation, and decision-making modules. |

| Financial Services | Improved fraud detection and scalability during peak transaction periods. |

| Manufacturing | Cost-effective automation and predictive maintenance for Industry 4.0. |

| Telecommunications | Scalable network optimization and real-time customer service. |

| Education | Personalized learning and adaptive tutoring for diverse student needs. |

| Smart Homes | Seamless integration of new smart devices and features. |

| Agriculture | Efficient crop management and irrigation tailored to specific environments. |

| Entertainment | Real-time content personalization and scalable recommendation engines. |

Limitations of Sparse AI

Sparse AI, while highly efficient and scalable, faces certain challenges that can limit its effectiveness in some applications. Here’s an overview of the primary limitations:

1. Training Complexity

Challenge:

Training sparse models requires specialized techniques, such as pruning, dynamic sparsity, or sparse tensor computations. These methods add complexity to the training process compared to dense models.

Impact:

-

-

- Increases the time and computational resources needed during training.

- Requires expertise in sparse architectures, limiting accessibility for teams without specialized knowledge.

-

Example:

Dynamic Sparse Training (DST) introduces significant computational overhead in identifying and updating which connections to keep or prune.

2. Hardware Inefficiencies

Challenge:

Many existing hardware systems (e.g., GPUs, TPUs) are optimized for dense matrix operations. Sparse computations, due to irregular memory access patterns, may not utilize hardware efficiently.

Impact:

-

-

- Reduces the expected performance gains from sparsity.

- May lead to slower processing despite the theoretical reduction in computational requirements.

-

Example:

Sparse tensor operations often suffer from cache misses, which slow down computations on GPUs optimized for dense data.

3. Limited Support in AI Frameworks

Challenge:

AI frameworks like TensorFlow and PyTorch have only recently begun to support sparse models, and the tools for creating and managing sparse architectures are less mature than for dense models.

Impact:

-

-

- Developers face challenges in implementing, debugging, and deploying sparse AI models.

- Limited interoperability with other optimization techniques, such as quantization or mixed-precision training.

-

Example:

Sparse model support in frameworks like TensorFlow Sparse is less comprehensive than for dense models, requiring additional development effort.

4. Accuracy Trade-Offs

Challenge:

Reducing the number of active parameters can lead to a loss in the model’s expressive power, which may reduce accuracy for complex tasks.

-

-

- Over-aggressive pruning can remove critical connections.

- Sparse models may struggle with tasks requiring high-dimensional representation.

-

Impact:

-

-

- Sparse models might underperform compared to dense models for some tasks, especially those with complex relationships in data.

-

Example:

In image recognition, overly sparse convolutional layers can miss subtle features, reducing classification accuracy.

5. Generalization Issues

Challenge:

Sparse models are sensitive to the quality of training data. If the data used to determine sparsity patterns is not representative, the resulting model may generalize poorly to new inputs.

Impact:

-

-

- Limited adaptability to unseen data or tasks.

- Increased risk of overfitting to the training dataset.

-

Example:

A sparse NLP model trained on a narrow dataset may fail to generalize well for diverse language inputs.

6. Real-Time Inference Latency

Challenge:

While sparsity reduces the number of computations, the irregular memory access and dynamic activation patterns can increase latency during real-time inference.

Impact:

-

-

- Sparse models may not achieve the desired speedups in applications requiring low-latency responses, such as chatbots or autonomous driving.

-

Example:

Sparse attention mechanisms in transformers like Reformer can introduce additional overhead during token clustering, slowing inference in real-time applications.

7. Data Dependency

Challenge:

Sparse models often rely heavily on the structure of the data. If the input data changes or is highly variable, the sparsity patterns determined during training may no longer be optimal.

Impact:

-

-

- Requires frequent retraining or fine-tuning to adapt to new data distributions.

- Reduces the efficiency and effectiveness of sparsity over time.

-

Example:

A sparse recommendation system optimized for one product catalog may perform poorly if the catalog changes significantly.

8. Scalability of Training

Challenge:

While sparse models excel in inference efficiency, their training may require distributed systems to handle dynamic sparsity patterns and gradient updates.

Impact:

-

-

- Adds complexity to scaling sparse model training in distributed environments.

- May offset the efficiency gains achieved during inference.

-

Example:

Sparse models with Mixture of Experts (MoE) architectures require advanced load-balancing techniques to manage distributed training.

9. Evaluation Challenges

Challenge:

Sparse models often require new evaluation metrics to measure the trade-offs between sparsity, accuracy, and efficiency. Standard metrics like FLOPs or latency may not fully capture these aspects.

Impact:

-

-

- Difficulty in benchmarking sparse models against dense models.

- Inconsistent performance reporting across different implementations.

-

Example:

Sparse models may show reduced FLOPs but higher latency on certain hardware, complicating comparisons.

10. Interpretability Issues

Challenge:

Sparse models, especially those with dynamic sparsity, can be harder to interpret than dense models. The reasoning behind which parameters are active may not be immediately clear.

Impact:

-

-

- Reduces trust and transparency, especially in high-stakes applications like healthcare or finance.

- Increases debugging complexity.

-

Example:

In a sparse fraud detection model, understanding why specific weights were pruned or activated may be challenging.

11. Cost of Maintaining Sparsity

Challenge:

Maintaining sparsity patterns through dynamic updates or periodic pruning adds computational overhead during training and fine-tuning.

Impact:

-

-

- Increases the complexity and cost of maintaining sparse models compared to static dense models.

- Can negate some of the efficiency benefits of sparsity.

-

Example:

Dynamic sparse training methods like lottery ticket hypothesis require multiple iterations of training and pruning to achieve optimal sparsity.

12. Limited Use Cases

Challenge:

Sparse models are most effective for tasks with naturally sparse data or high-dimensional inputs. Tasks requiring dense representations may not benefit from sparsity.

Impact:

-

-

- Reduces the applicability of sparse models across diverse domains.

- Dense models may remain the better choice for applications like image synthesis or dense semantic search.

-

Example:

Dense language models outperform sparse ones in tasks requiring nuanced context understanding across long sequences.

Comparison: Sparse vs. Dense AI

| Aspect | Sparse AI | Dense AI |

|---|---|---|

| Efficiency | High for inference | High computational cost |

| Training Complexity | High due to sparsity techniques | Lower, with well-established methods |

| Accuracy | Slightly lower for complex tasks | Generally higher |

| Hardware Compatibility | Limited support | Optimized for dense operations |

| Scalability | Better for inference scaling | Better for training scalability |

Strategies to Overcome Limitations

-

- Hardware Innovation:

- Develop hardware optimized for sparse tensor operations, such as NVIDIA’s sparse-compatible GPUs.

- Improved Frameworks:

- Enhance support for sparse computations in AI frameworks like TensorFlow and PyTorch.

- Balanced Sparsity:

- Use hybrid models combining sparsity with dense components for critical tasks.

- Regular Fine-Tuning:

- Periodically retrain sparse models to adapt to changing data distributions.

- Advanced Algorithms:

- Use techniques like dynamic sparsity and Mixture of Experts to balance performance and efficiency.

- Hardware Innovation:

Key Takeaway

Sparse AI offers significant advantages in efficiency and scalability but comes with challenges in training complexity, hardware compatibility, and generalization. Addressing these limitations requires advancements in algorithms, hardware, and frameworks to unlock its full potential.

2. Modular AI: Flexibility and Scalability

Modular AI breaks down complex systems into independent components, or “modules,” each optimized for specific tasks. This architecture allows for easier updates, scalability, and fault isolation.

Applications of Modular AI

-

- Autonomous Vehicles: Independent modules for vision processing, navigation, and decision-making.

- E-Commerce: Separate modules for recommendation engines, inventory management, and pricing optimization.

- Healthcare: Modular components for patient monitoring, imaging analysis, and predictive diagnostics.

Key Benefits of Modular AI

-

- Fault Isolation: Errors in one module don’t affect the entire system.

- Scalability: Modules can be updated or added independently.

- Flexibility: Organizations can deploy task-specific modules without redesigning the entire system.

Key Features of Modular AI

-

- Task-Specific Modules

- Each module is optimized for a particular task, such as text generation, image recognition, or recommendation systems.

- Reusability

- Modules can be reused across different projects, reducing development time and effort.

- Scalability

- Modular AI systems can scale easily by adding or updating individual components without affecting the entire system.

- Interoperability

- Modules communicate through well-defined interfaces, enabling seamless integration of components developed by different teams or vendors.

- Customizability

- Users can mix and match modules to create AI solutions tailored to specific needs.

- Task-Specific Modules

How Modular AI Works

-

- Decomposition of Functions

- Break the AI system into smaller modules, each responsible for a specific function or task.

- Defined Interfaces

- Establish communication protocols or APIs to ensure interoperability between modules.

- Central Orchestration

- Use an orchestrator or controller to coordinate the modules and manage the flow of data.

- Dynamic Assembly

- Combine modules dynamically based on task requirements, enabling flexible and adaptive AI systems.

- Decomposition of Functions

How Modular AI Improves Scalability

Modular AI fundamentally enhances scalability by breaking down complex AI systems into smaller, independent, and reusable components (modules). This design approach allows businesses to efficiently scale systems in response to increasing demands or changing requirements while minimizing costs and complexity.

Key Ways Modular AI Improves Scalability

1. Independent Module Scaling

-

-

- How It Works: Each module can be scaled independently based on its workload or demand. For example, if a recommendation module experiences high traffic, only that module can be scaled, leaving other components untouched.

- Benefit: Reduces resource waste by scaling only the necessary parts of the system, leading to more cost-efficient operations.

-

Example:

-

-

- E-Commerce Platforms: During peak shopping seasons, the recommendation engine module in an e-commerce system can be scaled independently without affecting inventory or pricing modules.

-

2. Parallel Development and Deployment

-

-

- How It Works: Teams can develop and deploy individual modules in parallel, enabling faster updates and reducing bottlenecks in the development cycle.

- Benefit: Enables continuous improvement and quicker scaling of specific functionalities without waiting for changes to the entire system.

-

Example:

-

-

- Autonomous Vehicles: A vision processing module can be upgraded or scaled separately to handle new sensor data without requiring updates to navigation or control modules.

-

3. Reusability Across Applications

-

-

- How It Works: Modular AI components are reusable across multiple systems and applications. Instead of building a new AI system from scratch, organizations can reuse existing modules in different deployments.

- Benefit: Reduces development costs and accelerates scaling for new projects.

-

Example:

-

-

- Healthcare Systems: A medical imaging analysis module used for X-rays can be repurposed for MRI scans, scaling its utility across different departments or hospitals.

-

4. Dynamic Resource Allocation

-

-

- How It Works: Modular AI systems can dynamically allocate resources to different modules based on real-time demand or priorities. This ensures that high-demand modules receive adequate resources while idle modules consume minimal resources.

- Benefit: Optimizes resource utilization, making the system more responsive and cost-effective.

-

Example:

-

-

- Cloud AI Services: In a chatbot system, the NLP module can scale up during high query volumes, while sentiment analysis modules scale down when not in use.

-

5. Plug-and-Play Expansion

-

-

- How It Works: New modules can be added to the system without affecting existing ones, enabling seamless expansion of capabilities.

- Benefit: Businesses can scale their systems incrementally, avoiding the need for a complete overhaul.

-

Example:

-

-

- Smart Home Systems: A smart assistant like Alexa can integrate a new home security module without disrupting other functionalities like music playback or smart lighting.

-

6. Improved Fault Isolation

-

-

- How It Works: Issues in one module are isolated, ensuring that the rest of the system remains functional. This minimizes downtime and improves overall reliability.

- Benefit: Makes scaling safer by reducing the risk of widespread system failures during expansion.

-

Example:

-

-

- Finance Systems: If a fraud detection module encounters an issue, payment processing and transaction modules remain unaffected, ensuring business continuity.

-

7. Cost-Efficient Scaling

-

-

- How It Works: By scaling only the modules needed, businesses save on computational resources, hardware costs, and energy consumption compared to scaling monolithic systems.

- Benefit: Enables organizations to achieve high scalability within budget constraints.

-

Example:

-

-

- Streaming Platforms: Scaling only the recommendation engine during a surge in viewers rather than the entire system reduces costs while maintaining user experience.

-

8. Central Orchestration for Large Systems

-

-

- How It Works: A central orchestrator manages the interactions between modules, ensuring efficient communication and workload distribution.

- Benefit: Simplifies the scaling of complex, multi-module systems while maintaining performance.

-

Example:

-

-

- Autonomous Factories: A factory’s central AI orchestrator dynamically adjusts the scaling of modules for robotic arms, quality control, and inventory tracking.

-

Visualizing Scalability in Modular AI

| Aspect | Monolithic AI Systems | Modular AI Systems | Scalability Advantage |

|---|---|---|---|

| Scaling Focus | Entire system | Specific modules | Reduced costs by scaling only high-demand components. |

| Fault Isolation | Difficult | Easy | Issues in one module do not affect the entire system. |

| Resource Allocation | Inefficient | Dynamic | Optimizes resource utilization, ensuring critical modules are prioritized. |

| Development Speed | Slower due to tight coupling | Faster due to parallel development | Faster updates and scaling through independent module upgrades. |

| Cost | High for system-wide scaling | Lower due to modular adjustments | Significant cost savings, especially for large-scale, cloud-based deployments. |

Real-World Examples of Modular AI

Modular AI is transforming industries by enabling scalable, flexible, and task-specific AI solutions. Below are prominent real-world examples of Modular AI across various domains:

1. Google’s Modular AI for Multimodal Tasks

Use Case: Multitasking Systems

-

-

- Google employs a modular architecture combining language, vision, and reasoning AI modules. These systems seamlessly integrate NLP, image processing, and decision-making capabilities.

- Example Application:

- Google Search now integrates multiple modules for tasks like visual search, summarization of webpage content, and interactive Q&A.

- Modules like Google Lens handle visual input, while BERT processes text.

-

Benefits:

-

-

- Task-specific modules improve overall system accuracy.

- Modular updates allow rapid improvements without overhauling the entire system.

-

2. IBM Watson Health

Use Case: Personalized Healthcare

-

-

- IBM Watson Health uses modular AI to deliver tailored healthcare solutions such as diagnosis, imaging analysis, and treatment planning.

- Example Application:

- Hospitals use Watson’s imaging analysis module for X-rays, while the decision-support module assists doctors with personalized treatment recommendations.

-

Benefits:

-

-

- Each hospital can deploy only the modules they need.

- Modular updates improve accuracy for specific applications like cancer diagnosis.

-

3. OpenAI Codex

Use Case: Modular Programming Assistance

-

-

- OpenAI’s Codex is a specialized module derived from GPT-4, designed for programming and code generation.

- Example Application:

- Integrated into platforms like GitHub Copilot, Codex suggests code snippets, automates debugging, and provides language-specific insights.

-

Benefits:

-

-

- Modular integration allows Codex to work with broader AI systems, combining programming with other functionalities like document analysis.

-

4. Tesla’s Autopilot

Use Case: Autonomous Driving

-

-

- Tesla’s Autopilot system uses a modular design, with separate AI modules handling vision processing, sensor fusion, path planning, and vehicle control.

- Example Application:

- Vision modules detect road signs and obstacles, while decision-making modules determine optimal driving routes.

-

Benefits:

-

-

- Modules can be updated or replaced independently (e.g., upgrading the vision module to handle new sensor data).

- Real-time adaptability ensures safer navigation.

-

5. Amazon Alexa Skills

Use Case: Smart Home and Voice Assistance

-

-

- Amazon’s Alexa platform operates using a modular skill architecture, where individual modules handle specific tasks like playing music, setting reminders, or controlling smart devices.

- Example Application:

- Users can enable new skills (modules) to expand Alexa’s functionality, such as integrating third-party apps like Spotify or Philips Hue.

-

Benefits:

-

-

- Modular updates let developers add new functionalities without modifying the core system.

- Encourages third-party innovation by allowing independent developers to create specialized skills.

-

6. Modular AI in E-Commerce (Shopify)

Use Case: Personalized Recommendations

-

-

- Shopify uses modular AI to deliver tailored shopping experiences. Modules for recommendation systems, inventory management, and dynamic pricing work together to optimize the e-commerce pipeline.

- Example Application:

- A recommendation module suggests products based on browsing history, while the dynamic pricing module adjusts prices in real-time based on demand.

-

Benefits:

-

-

- Independent modules enable easy scaling for seasonal sales or customer-specific campaigns.

- Retailers can integrate only the features they need.

-

7. Microsoft Azure Cognitive Services

Use Case: AI-as-a-Service (AIaaS)

-

-

- Microsoft Azure offers modular AI services such as speech recognition, language understanding, and computer vision, allowing businesses to build custom AI systems.

- Example Application:

- A retailer integrates Azure’s vision module for inventory tracking and the sentiment analysis module to monitor customer feedback.

-

Benefits:

-

-

- Businesses can pay only for the modules they use.

- Flexible APIs make it easy to integrate modules into existing systems.

-

8. Nvidia Clara for Healthcare

Use Case: AI for Medical Imaging

-

-

- Nvidia’s Clara platform uses modular AI to process medical imaging data, develop drug simulations, and provide real-time analytics.

- Example Application:

- Hospitals use the imaging module for MRI scans and the analytics module for monitoring patient vitals.

-

Benefits:

-

-

- Modular design allows healthcare providers to add or upgrade capabilities incrementally.

- Task-specific optimization reduces computational overhead.

-

9. Modular AI in Gaming (Unity ML-Agents)

Use Case: Game Development

-

-

- Unity’s ML-Agents framework enables modular AI to control NPC behavior, optimize game environments, and enhance player experience.

- Example Application:

- Developers use separate modules for NPC decision-making and adaptive difficulty scaling.

-

Benefits:

-

-

- Developers can test and refine individual modules without impacting other game components.

- Modular AI enables rapid prototyping and deployment of new game features.

-

10. Modular AI in Finance (JP Morgan Chase)

Use Case: Fraud Detection and Financial Forecasting

-

-

- JP Morgan Chase employs modular AI to analyze transaction patterns, predict market trends, and detect fraudulent activities.

- Example Application:

- A fraud detection module monitors real-time transactions, while a forecasting module provides investment insights.

-

Benefits:

-

-

- Independent modules ensure scalability as transaction volumes grow.

- Task-specific optimization reduces latency in real-time fraud detection.

-

Visual Summary: Real-World Examples of Modular AI

| Industry | Use Case | Example System | Modules |

|---|---|---|---|

| Autonomous Driving | Navigation and Safety | Tesla Autopilot | Vision, path planning, sensor fusion, vehicle control |

| Healthcare | Imaging and Diagnosis | IBM Watson Health | Imaging analysis, decision support, personalized treatment |

| Voice Assistance | Smart Home Integration | Amazon Alexa | Music, reminders, third-party integrations (e.g., Spotify, smart lights) |

| E-Commerce | Personalized Shopping | Shopify | Recommendations, inventory management, dynamic pricing |

| Finance | Fraud Detection and Insights | JP Morgan Chase | Fraud detection, financial forecasting, sentiment analysis |

| AI-as-a-Service | Custom AI Systems | Microsoft Azure Cognitive | Speech recognition, sentiment analysis, computer vision |

Challenges of Scaling Modular AI

-

- Integration Complexity:

- Managing communication and dependencies between modules during scaling can introduce complexities.

- Orchestration Overheads:

- Scaling multiple modules may require advanced orchestration, increasing system management costs.

- Security and Compatibility:

- Scaling modules independently may create vulnerabilities if security protocols or compatibility checks are overlooked.

- Integration Complexity:

Future Trends in Modular AI Scalability

-

- Dynamic Scaling with AI Orchestration:

- AI-driven orchestrators will predict demand spikes and scale modules proactively.

- Composable AI Marketplaces:

- Businesses will purchase and scale pre-built AI modules from marketplaces, reducing development overhead.

- Edge AI Integration:

- Modular AI systems will scale across edge devices, enabling decentralized and efficient processing in IoT networks.

- Auto-Healing Systems:

- Modular systems will automatically detect and replace underperforming modules to ensure consistent scalability.

- Dynamic Scaling with AI Orchestration:

Limitations of Modular AI

While Modular AI offers significant advantages in flexibility, scalability, and efficiency, it also presents challenges that organizations must address to fully leverage its potential. Below are the key limitations of Modular AI:

1. Integration Complexity

-

- Challenge: Ensuring seamless communication between modules can be challenging, especially when modules are developed by different teams or vendors. Incompatibilities in APIs, data formats, or communication protocols can lead to integration issues.

- Impact: Increased development time and potential for system errors during deployment.

Example:

In a healthcare system, integrating a patient monitoring module with an imaging analysis module may require significant effort to standardize data formats and interfaces.

2. Orchestration Overhead

-

- Challenge: Managing the interactions between multiple modules adds an orchestration layer, which can introduce additional computational and operational complexity.

- Impact: Increased latency and resource usage in systems with high module interdependence.

Example:

A modular AI system in e-commerce that orchestrates inventory management, recommendation engines, and pricing modules might experience delays in real-time decision-making due to coordination overhead.

3. Dependency Management

-

- Challenge: Modules often depend on shared resources, such as databases or hardware. Managing these dependencies becomes more complex as the number of modules increases.

- Impact: Risk of resource contention, bottlenecks, or failure propagation across modules.

Example:

In an autonomous vehicle system, simultaneous demands on the vision module and decision-making module could lead to resource contention, slowing the system.

4. Security Risks

-

- Challenge: Modular systems increase the number of interfaces and communication points, each of which is a potential vulnerability. Ensuring the security of these interactions can be complex.

- Impact: Greater exposure to cyberattacks, such as data interception or unauthorized access to individual modules.

Example:

A financial system with separate fraud detection and transaction processing modules could be at risk if the communication channel between the two is not adequately secured.

5. Redundancy and Inefficiency

-

- Challenge: Modular systems may duplicate functionalities across modules, leading to inefficiencies. This is particularly true when modules are developed independently without proper coordination.

- Impact: Higher resource usage and potential performance degradation.

Example:

In a modular AI healthcare system, both patient monitoring and diagnostic modules might independently process the same vital signs data, wasting computational resources.

6. Cost of Maintenance

-

- Challenge: Maintaining a modular system involves managing updates, compatibility, and performance tuning for each module.

- Impact: Increased maintenance costs and risk of system downtime during module upgrades.

Example:

Regular updates to an AI-powered recommendation engine may require retesting its integration with inventory and pricing modules, increasing operational overhead.

7. Limited Cross-Module Learning

-

- Challenge: Modular AI systems may limit the ability of modules to learn from each other, as they often operate in isolation. This could reduce the overall system’s ability to optimize across tasks.

- Impact: Suboptimal performance in tasks requiring holistic understanding or interdependence between components.

Example:

In a natural language processing (NLP) system, separate sentiment analysis and language translation modules might miss nuances that could be captured in a unified architecture.

8. Latency in Real-Time Applications

-

- Challenge: Modular AI systems often introduce latency due to the need for inter-module communication and orchestration. This can be problematic for real-time applications requiring low response times.

- Impact: Reduced system responsiveness in time-critical scenarios.

Example:

An autonomous drone system might experience delays in obstacle avoidance due to the time taken for vision and decision-making modules to coordinate.

9. Lack of Standardization

-

- Challenge: The lack of standardized frameworks for building and integrating modular AI systems can lead to inconsistencies and reduced interoperability.

- Impact: Organizations may face vendor lock-in or compatibility issues when adopting third-party modules.

Example:

Different AI vendors providing modules for a smart home system may use incompatible protocols, making integration difficult.

10. Suboptimal Performance for Specialized Tasks

-

- Challenge: Modular systems designed for general-purpose use may not achieve optimal performance for highly specialized tasks.

- Impact: Reduced effectiveness in niche applications compared to monolithic systems fine-tuned for specific use cases.

Example:

In a financial AI system, a modular fraud detection module might not perform as well as a monolithic system designed specifically for fraud analysis.

11. Difficulty in Debugging

-

- Challenge: Identifying the source of errors in a modular AI system can be complex, as issues may arise from individual modules or their interactions.

- Impact: Increased debugging time and difficulty isolating faults.

Example:

In a modular AI-powered chatbot, determining whether a delay is caused by the NLP module, the sentiment analysis module, or their orchestration can be challenging.

12. Resource Overheads

-

- Challenge: While modular AI can save resources in some areas, it can also introduce overheads, such as additional storage for modular dependencies or computational costs for orchestration.

- Impact: Higher resource consumption in certain scenarios compared to a monolithic system.

Example:

A modular recommendation engine in a video streaming platform may consume more resources when handling frequent data exchanges between modules.

Strategies to Mitigate Limitations

-

- Adopt Standardized Protocols:

- Use interoperability standards like ONNX for seamless integration across modules.

- Efficient Orchestration Tools:

- Leverage container orchestration platforms like Kubernetes to manage dependencies and communication.

- Secure Interfaces:

- Implement robust encryption and authentication mechanisms for module communication.

- Regular Dependency Audits:

- Monitor and manage dependencies to reduce redundancy and conflicts.

- Performance Optimization:

- Fine-tune modules for inter-module communication to minimize latency and inefficiencies.

- Invest in Explainability:

- Develop tools to trace errors and optimize debugging across modular components.

- Adopt Standardized Protocols:

Key Takeaway

While Modular AI offers significant benefits in flexibility, scalability, and reusability, it requires careful design, management, and standardization to address its inherent limitations. By proactively mitigating these challenges, organizations can maximize the potential of Modular AI while minimizing its downsides.

💡 Pro Tip: Start with a well-defined architecture and adopt tools like Docker and TensorFlow Serving to streamline modular integration and management.

Sparse AI vs. Modular AI: A Comparative Analysis

Sparse AI and Modular AI address different aspects of AI development and deployment, each with its strengths and limitations. Sparse AI focuses on computational efficiency and resource optimization by activating only a subset of parameters, while Modular AI emphasizes breaking systems into independent, task-specific components for scalability and flexibility. Here’s a detailed comparison:

1. Conceptual Focus

| Aspect | Sparse AI | Modular AI |

|---|---|---|

| Definition | Reduces computational load by selectively activating parameters or weights. | Decomposes AI systems into independent, task-specific modules. |

| Objective | Optimize resource usage and improve efficiency. | Enhance flexibility, scalability, and adaptability. |

| Core Approach | Dynamically or statically sparsify models to reduce complexity. | Design modular components that can operate independently. |

2. Key Features

| Feature | Sparse AI | Modular AI |

|---|---|---|

| Efficiency | Achieves efficiency by activating only a fraction of model parameters. | Focuses on efficiency by reusing and independently scaling modules. |

| Scalability | Scales well for inference tasks by reducing active computation. | Scales through independent module updates and additions. |

| Reusability | Limited; sparsity patterns are specific to a task or dataset. | High; modules can be reused across systems or applications. |

| Adaptability | Adaptable to new tasks with retraining for new sparsity patterns. | Highly adaptable; new modules can be added without affecting existing ones. |

| Fault Tolerance | Errors in sparsity patterns can degrade the entire model. | Errors are isolated to specific modules, reducing system-wide impact. |

3. Use Cases

Sparse AI:

- Ideal for: Tasks with high-dimensional data or natural sparsity, where computational efficiency is critical.

- Applications:

- Language Models: Sparse transformers like Reformer and BigBird for long-sequence NLP tasks.

- Image Recognition: Sparse convolutional networks for resource-constrained devices.

- Recommendation Systems: Efficient sparse matrix factorization for real-time recommendations.

Modular AI:

- Ideal for: Complex, multi-task AI systems requiring flexibility and task-specific optimization.

- Applications:

- Healthcare: Modular AI for imaging, diagnostics, and patient monitoring.

- E-Commerce: Independent modules for recommendation engines, inventory management, and pricing.

- Autonomous Vehicles: Separate modules for vision, navigation, and decision-making.

4. Advantages

| Aspect | Sparse AI | Modular AI |

|---|---|---|

| Computational Efficiency | Significantly reduces computational and memory overhead. | Efficient resource allocation for specific tasks or workflows. |

| Energy Efficiency | Lower energy consumption during inference. | Reduces redundant processing by isolating functionality. |

| Task Optimization | High performance for narrowly defined tasks. | Flexibility to optimize for multiple, diverse tasks. |

| Scalability | Scales well for resource-constrained environments like edge AI. | Easily scales by adding or updating modules. |

5. Limitations

Sparse AI:

- Hardware Support: Sparse models often underutilize traditional hardware, reducing efficiency gains.

- Training Complexity: Requires specialized techniques like pruning or dynamic sparsity.

- Generalization Challenges: Sparse patterns may not adapt well to new tasks or datasets.

Modular AI:

- Integration Complexity: Inter-module communication and dependencies can increase system complexity.

- Latency: Orchestration overhead may introduce latency in real-time applications.

- Maintenance Costs: Requires managing and updating multiple modules independently.

6. Complementary Use

Sparse AI and Modular AI are not mutually exclusive and can be combined to leverage their strengths. For example:

- Hybrid Approach:

- A modular AI system for autonomous vehicles can use sparse models within its vision module to optimize image recognition tasks while maintaining flexibility across other modules like navigation or control.

Example Use Case:

- Healthcare:

- A modular system for patient monitoring could use sparse AI within its diagnostic module to efficiently analyze ECG data, while other modules handle imaging or treatment recommendations.

7. Economic Impact

| Aspect | Sparse AI | Modular AI |

|---|---|---|

| Cost Efficiency | Reduces computation and energy costs during inference. | Reduces development and maintenance costs by reusing modules. |

| Development Time | Longer due to sparsity optimization and fine-tuning. | Shorter as modules can be built and deployed independently. |

| Hardware Requirements | Requires less hardware during inference but may face inefficiencies in training. | Compatible with existing hardware but may need orchestration tools. |

8. Best Fit Scenarios

| Scenario | Sparse AI | Modular AI |

|---|---|---|

| Edge AI and IoT | Sparse AI is ideal for resource-constrained devices like smartphones or sensors. | Modular AI is beneficial for integrating diverse IoT functionalities. |

| Enterprise AI Systems | Suitable for optimizing individual components, like recommendation engines. | Ideal for systems requiring flexible, multi-task solutions. |

| Real-Time Applications | Works well for low-latency, single-task scenarios. | May introduce latency due to module orchestration. |

| Research and Prototyping | Effective for experimenting with computational efficiency. | Excellent for rapidly building and testing new functionalities. |

Key Takeaways

| Aspect | Sparse AI | Modular AI |

|---|---|---|

| Focus | Optimizing computational and memory efficiency. | Enhancing scalability, flexibility, and adaptability. |

| Strengths | Energy efficiency and performance for narrowly defined tasks. | Scalability and modularity for complex systems. |

| Limitations | Training complexity, hardware inefficiencies. | Integration complexity, orchestration overhead. |

| Complementarity | Can be embedded within modular AI systems for optimized components. | Provides a framework for deploying sparse AI in multi-task environments. |

Pro Tip:

For organizations building scalable AI systems, consider combining Sparse AI for efficiency in computationally intensive tasks and Modular AI for flexibility in system design. This hybrid approach can maximize performance while minimizing resource consumption.

Side-by-Side Comparison: Sparse AI vs. Modular AI

| Aspect | Sparse AI | Modular AI |

|---|---|---|

| Definition | Reduces computational load by selectively activating model parameters. | Breaks AI systems into independent, reusable components designed for specific tasks. |

| Primary Goal | Computational and energy efficiency. | Scalability, flexibility, and adaptability across multiple tasks. |

| Core Technique | Pruning, sparse attention mechanisms, and sparse matrix optimizations. | Decomposition of AI systems into task-specific modules with defined interfaces for integration. |

| Hardware Utilization | May underutilize traditional hardware optimized for dense computations, requiring tailored sparse-friendly accelerators. | Compatible with traditional hardware but needs orchestration tools for module communication and scaling. |

| Training Complexity | High due to sparsity pattern optimization and fine-tuning. | Moderate, as modules are developed independently but require integration. |

| Reusability | Limited; sparsity patterns are task-specific and often tied to a dataset or domain. | High; modules can be used across applications and adapted to new tasks. |

| Scalability | Scales inference efficiently, especially in resource-constrained environments like edge AI. | Scales by adding or updating modules independently, suitable for complex multi-task systems. |

| Performance | Excels in narrowly defined, high-dimensional data tasks but may lose accuracy for generalized or complex systems. | Optimized for diverse, multi-task systems but may introduce latency and orchestration overhead. |

Hybrid Approach: Combining Sparse AI and Modular AI

For industries like healthcare, hybrid systems can combine the efficiency of sparse models with the flexibility of modular AI.

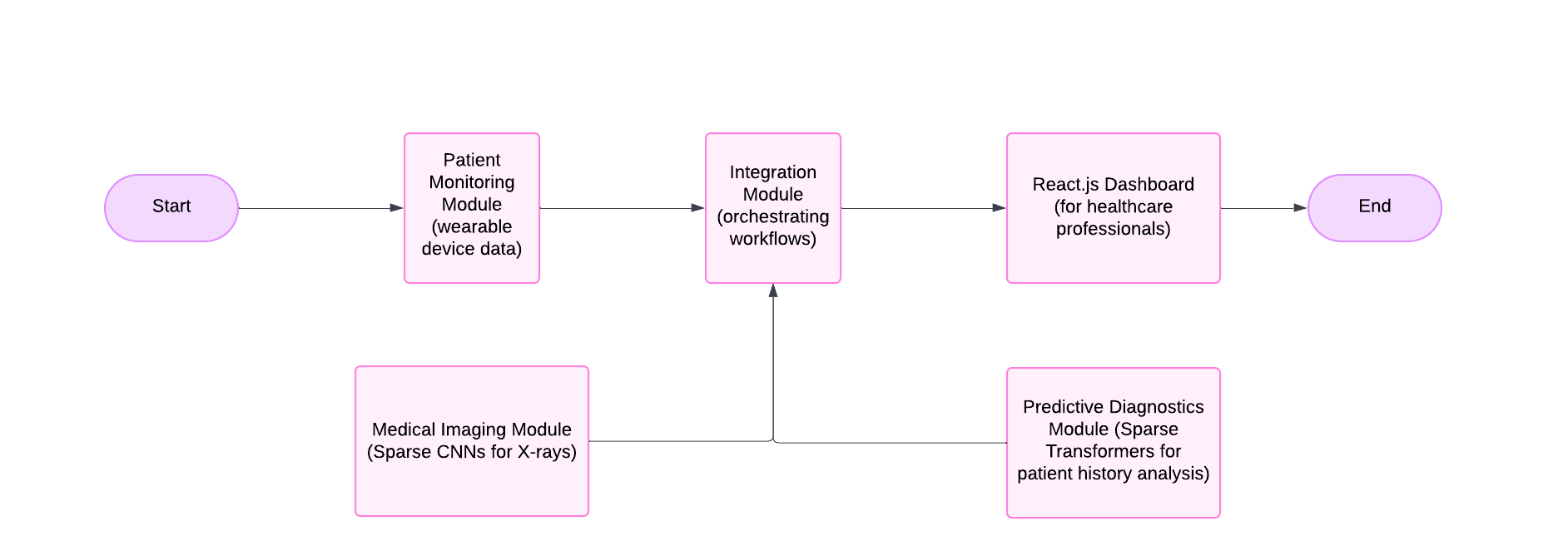

📋 Case Study: Healthcare

Scenario

A hospital needs to integrate patient monitoring, medical imaging, and predictive diagnostics while maintaining efficiency and scalability.

Hybrid AI System Design

1. Sparse AI for Efficiency:

- Medical Imaging Module: Sparse CNNs process X-rays, MRIs, or CT scans, reducing computational overhead.

- Predictive Diagnostics Module: Sparse transformers analyze patient records, optimizing long-sequence data processing.

2. Modular AI for Scalability:

- Patient Monitoring Module: Tracks vitals via wearables, scalable to include additional devices.

- Integration Module: Orchestrates workflows, connecting imaging and diagnostics modules.

Workflow Example

- Patient Visit: Wearable devices stream vitals to the Patient Monitoring Module.

- Medical Imaging: Sparse CNNs analyze imaging data on edge devices.

- Data Integration: Kafka streams imaging results to the Predictive Diagnostics Module.

- Diagnostics: Sparse transformers evaluate combined data for risk predictions.

- Output Delivery: Results are displayed on a React.js dashboard, enabling actionable insights for doctors.

Technologies for Implementation

Sparse AI Tools

- TensorFlow Sparse: Efficient sparse tensor operations.

- Hugging Face Transformers: Pre-built sparse transformer models like BigBird and Reformer.

- NVIDIA A100 GPUs: Optimized for sparse computations.

Modular AI Tools

- Docker & Kubernetes: Containerize and orchestrate modular components.

- Apache Kafka: Stream data between modules.

- React.js & Material-UI: Build modular, healthcare-focused dashboards.

Modular AI Technologies

- Microservices: Independent services communicate over APIs.

- Kubernetes: Orchestrates scalable module deployment.

- GraphQL: Provides flexible data queries across modules.

Addressing Limitations

Sparse AI Challenges

- Training Complexity: Use advanced tools like DeepSpeed for sparsity optimization.

- Hardware Inefficiencies: Transition to hardware optimized for sparse computations, such as NVIDIA’s A100 GPUs.

Modular AI Challenges

- Integration Complexity: Standardize module interfaces with ONNX.

- Latency: Optimize inter-module communication to reduce orchestration overhead.

Industries Benefiting from Hybrid Approaches

| Industry | Use Case | Hybrid Advantage |

|---|---|---|

| Healthcare | Medical imaging and diagnostics. | Efficient imaging with scalable monitoring systems. |

| Finance | Fraud detection and forecasting. | Sparse models for anomaly detection; modular risk analytics. |

| Retail | Personalized shopping experiences. | Sparse recommendations integrated with modular inventory tools. |

| Autonomous Tech | Real-time navigation and control. | Sparse vision systems in modular autonomous frameworks. |

Real-World Case Studies

1. OpenAI and GPT-4 Turbo

- Problem: Scaling GPT-4 required optimizing inference for enterprise use.

- Solution: Introduced sparse models and memory-efficient algorithms, reducing latency by 40%.

- Impact: Enabled real-time applications for businesses like Microsoft’s Azure OpenAI Services.

2. Cohere’s Fine-Tuned Language Models

- Problem: Industry-specific applications needed efficiency without compromising performance.

- Solution: Fine-tuned smaller models using transfer learning and sparse architectures.

- Impact: Reduced training costs by 60% for industries like legal and finance.

Real-World Applications by Industry

| Industry | Sparse AI Application | Modular AI Application |

|---|---|---|

| Healthcare | Sparse CNNs for imaging diagnostics. | Patient monitoring and predictive diagnostics. |

| E-Commerce | Sparse recommendation engines for efficiency. | Modular inventory and pricing systems. |

| Autonomous Vehicles | Sparse perception for real-time object detection. | Modular navigation and decision-making systems. |

| Finance | Sparse fraud detection systems. | Modular risk management and sentiment analysis. |

Common Pitfalls and Solutions

1. Sparse AI Challenges

- Hardware Inefficiencies: Sparse models often underutilize GPUs.

- Solution: Use sparse-compatible hardware like NVIDIA A100 GPUs.

- Accuracy Trade-Offs: Over-pruning can degrade performance.

- Solution: Balance sparsity with hybrid dense-sparse models.

2. Modular AI Challenges

- Integration Complexity: Inter-module communication can introduce latency.

- Solution: Use tools like Docker and Kubernetes for orchestration.

- Maintenance Overheads: Regular updates for independent modules.

- Solution: Automate updates with CI/CD pipelines.

🚀 Future Trends in AI Scaling

1. 🔄 Dynamic Assembly Systems

-

-

- Real-Time Modular Adaptation: AI systems dynamically reconfigure modules in real time based on task demands.

- Context-Aware Assembly: Modules adapt to the context of the task, prioritizing relevant workflows like diagnostics in healthcare or navigation in autonomous vehicles.

- Self-Learning Systems: Systems learn from past usage patterns to predict and preconfigure modules before tasks begin.

-

2. 🛒 Composable AI Marketplaces

-

-

- Plug-and-Play AI Solutions: Businesses will access modular AI services tailored to specific industries.

- Open Ecosystems: AI vendors collaborate to create interoperable modules adhering to standards like ONNX.

- Domain-Specific Modules: Pre-trained modules optimized for niche domains such as finance, retail, and education will dominate.

-

3. 🌱 Green AI

-

-

- Energy-Efficient Architectures: Sparse and modular AI models reduce energy consumption during training and inference.

- Carbon-Neutral AI Systems: Investments in renewable energy sources and carbon offset programs will drive AI development.

- Resource-Aware AI Development: Models will prioritize minimal compute resource usage, especially in edge AI deployments.

-

4. 🔒 Federated and Decentralized Learning

-

-

- Privacy-Preserving AI: AI models train on decentralized data (e.g., patient records) while ensuring privacy.

- Edge AI Collaboration: Devices collaborate to train shared models without centralizing data.

- Decentralized AI Networks: Distributed AI systems operate without a central server, increasing resilience and scalability.

-

5. 🧩 Hybrid AI Architectures

-

-

- Sparse-Modular Combinations: Hybrid systems integrate sparse and modular designs for efficiency and scalability.

- Task-Specific Hybrid Models: Specialized hybrids combine sparse computations and modularity for targeted applications like real-time language translation.

- Hybrid AIaaS (AI-as-a-Service): Cloud providers will offer hybrid AI models as services for diverse industries.

-

6. 💾 AI Co-Design with Hardware

-

-

- Customized AI Chips: Hardware optimized for sparse computations and modular workflows will improve performance.

- Neuromorphic Computing: Brain-inspired designs will improve scalability and efficiency in AI operations.

- Memory-Optimized Designs: Innovative memory hierarchies will address bottlenecks in large-scale training.

-

7. 🧠 Multi-Modal and Multi-Task AI

-

-

- Unified AI Models: Handle multiple data types (text, vision, audio) within a single architecture.

- Interoperable Modules: Modular AI systems with universal interfaces for seamless integration.

- Adaptive Multi-Task Learning: Dynamically allocate resources to prioritize tasks based on real-time requirements.

-

8. 🔧 Self-Healing AI Systems

-

-

- Autonomous Error Detection: AI systems identify and isolate faulty modules to prevent cascading failures.

- Real-Time Module Replacement: Faulty components are swapped with backups or updated versions without downtime.

- Anomaly Detection in Scaling: Systems monitor scaling processes to identify inefficiencies.

-

9. 🧐 Explainable and Ethical Scaling

-

-

- Transparent AI Scaling: Tools provide insights into resource allocation during scaling.

- Bias Mitigation at Scale: Modular and sparse architectures integrate fairness checks to ensure equitable outcomes.

- Regulatory Compliance: Systems are designed to meet ethical and legal standards.

-

10. 🤖 AI-Driven Orchestration

-

-

- Automated Scaling Decisions: AI systems manage their own scaling by analyzing workload patterns.

- Predictive Resource Allocation: AI predicts upcoming demands, pre-allocating resources accordingly.

- Cross-Cloud Scaling: Orchestrators manage scaling across multiple cloud providers for cost-effectiveness.

-

11. 🤝 Human-AI Collaboration in Scaling

-

-

- Augmented Intelligence: AI systems scale based on user feedback, improving usability.

- Collaborative Decision-Making: Modules prioritize scaling efforts aligned with human-defined goals.

- Customizable User Interfaces: Dashboards provide intuitive control over scaled AI operations.

-

12. 🧪 Quantum AI Integration

-

-

- Quantum-Assisted Scaling: Quantum computing accelerates sparse model optimizations and modular workflows.

- Quantum-Classical Hybrids: Hybrid architectures leverage quantum processing for specific scaling challenges.

- Scalable Quantum Algorithms: Quantum methods enhance performance for tasks like sparse attention in transformers.

-

13. 🔬 AI-Driven Discovery of Scaling Methods

-

-

- Meta-Learning for Scaling: AI systems optimize their scaling strategies through experimentation.

- Automated Scaling Frameworks: AI discovers and implements new scaling paradigms autonomously.

- Scalable Neural Architecture Search (NAS): AI systems autonomously identify optimal architectures.

-

14. 👤 Personalized AI Scaling

-

-

- User-Centric Models: AI systems prioritize scaling to meet individual user preferences.

- Context-Aware Personalization: Modules adapt to fit real-time user contexts (e.g., switching between professional and personal tasks).

- On-the-Fly Customization: Users can configure module behavior and performance instantly.

-

15. 🌐 Sustainable Data-Centric AI

-

-

- Efficient Data Usage: AI systems focus on high-quality datasets for scaling to minimize waste.

- Adaptive Data Augmentation: Sparse models dynamically augment training data for better scalability.

- Data Privacy at Scale: Federated systems ensure privacy-preserving scaling for sensitive data.

-

16. 🏭 Cross-Industry Scaling Frameworks

-

-

- Standardized Scaling Practices: Shared frameworks ensure consistent scaling across industries like healthcare and finance.

- Sector-Specific Modules: Industry-focused modules tailored for unique requirements will proliferate.

- Collaborative Scaling Networks: Organizations pool resources to scale AI collectively, reducing costs.

-

🎯 Key Takeaway

AI scaling is evolving toward dynamic, sustainable, and user-driven architectures. Future systems will prioritize efficiency, privacy, and scalability, reshaping industries with smarter solutions. The future lies not in brute-force scaling but in innovating smarter. Sparse AI optimizes computation, while Modular AI enhances flexibility and scalability. Together, they provide a powerful framework for sustainable, efficient, and adaptable AI systems.

💬 What combination of Sparse and Modular AI do you think would benefit your industry most? Share your insights below!